Problem statement: Implement a back propagation algorithm using any programming language

- 1. Structure of the program

- 2. Data Structures

- 2.1. class Neuron

- 2.2. class Layer

- 2.3. class NeuralNetwork

- 3. The Algorithm

- 3.1. The Weight Matrix

- 3.2. The Forward Pass

- 3.3. The Backward Pass

- 3.4. The Training

- 4. How to run

The program is written in Python programming language. Python has numerous supporting libraries like 'NumPy', 'Pandas'm etc., which can make many mathematical operations efficient and avoid unwanted iterations.

The program is written in such a way that, you have to make as less changes as possible to customize the neural the neural network according to your need

You can create your own network structure in JSON format (some sample files are provided), add required no. of neurons, no. of layers, your activation function of choice, anything you want in the JSON file and the Neural Network will be built and initialized according to it. All you need to do is while creating the Network, just pass the file-path of the network structure JSON file.

The Code has three important modules:

- BackProp.py: All the algorithms, data structures, class definitions are present here

- Runner.py: A module to run the algorithm

- activation_functions.py: A module containing various different activation function definitions, e.g., sigmoid(), tanh(), relu(), etc.

Now let's understand the Data structures and Class definitions

The three important data structures created for the program are viz., 'Neuron', 'Layer', and 'NeuralNetwork'.

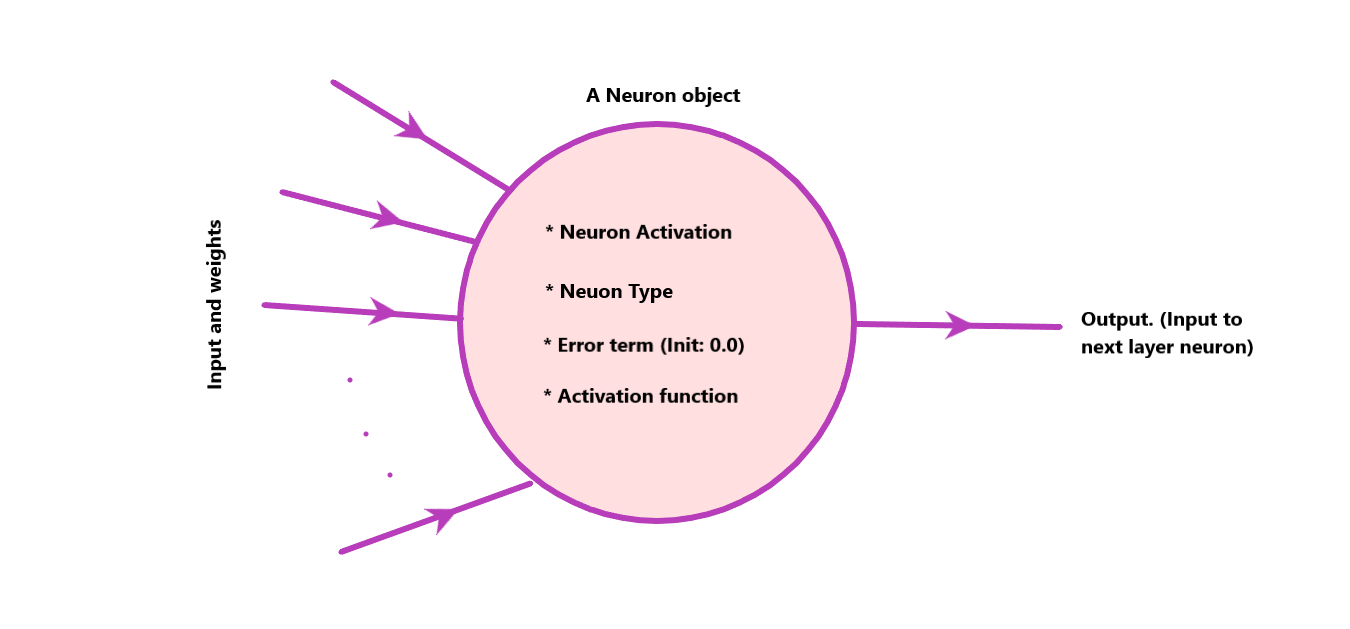

Figure 1. The Neuron objectA Neuron is the basic unit of every Network layer. It is a simple unit which is connected to all the previous layer neurons and the next layer with appropriate edge weights. It has various attributes like Activation value (value after applying activation function to the weighted sum), Activation function (function to be applied to the weighted sum of inputs and corresponding weights), error term (value of error term 'delta'), and Neuron type (Input layer neuron or other). A neuron has following methods:

- activate_neuron(input_vector, weights, bias_value): Depending on the type of neuron (input or hidden or output) it calculates weighted sum of 'input_vector' and 'weights' (Using np.dot()) and applies the activation function e.g. sigmoid() to the result. The final result is stored in Neuron's 'activation' attribute.

- Setters and Getters

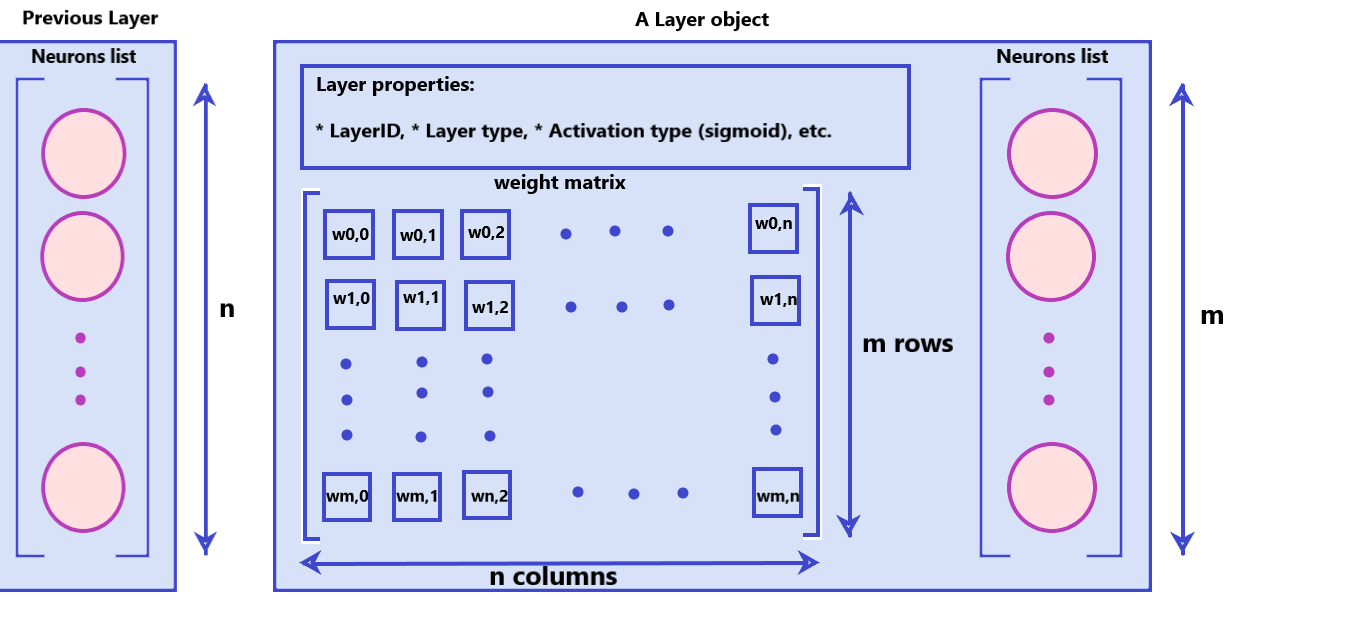

A 'Layer' object is a collection of 'Neuron' objects, weight matrix between current layer and 'previous' layer, and various static layer attributes. Following are the 'Layer' attributes:

- layer_type: Type of layer. Input, Hidden or Output ('i', 'h', 'o' respectively).

- num_units: No. of neurons in the layer.

- prev_layer_neurons: No. of neurons in previous layer (used to initialize weight matrix).

- neurons: List of Neurons for this layer

- weight_matrix: The weight matrix between current layer object and previous layer object.

- Other static attributes like layer_ID, etc.

A layer has crucial auxilliary functions which are useful in forward and backward propagation. Following are some important layer methods:

- get_neuron_activations(): Returns a 1D numpy vector with all the activation values of the neurons.

- get_neuron_error_terms(): Returns a 1D numpy vector with all the error term values of the neurons.

- get_weights_for_neuron(index): Gives a vector of weights connected to the particular neuron at position 'index' in the list of neurons.

- calculate_error_terms(is_output, resource): Calculate and set the values of error term for every neuron in the layer (depending the value of 'is_output').If 'is_output' = True then calculate according to output layer otherwise calculate according to hidden layer. 'resource' is the packet containing data which will be used to calculate the error term values.

- update_weights(X, DELTA, learning_rate): Updates the weight matrix (used in backward pass). 'X' is the input vector to the layer and 'DELTA' is the vector of error terms.

- Other setters and getters.

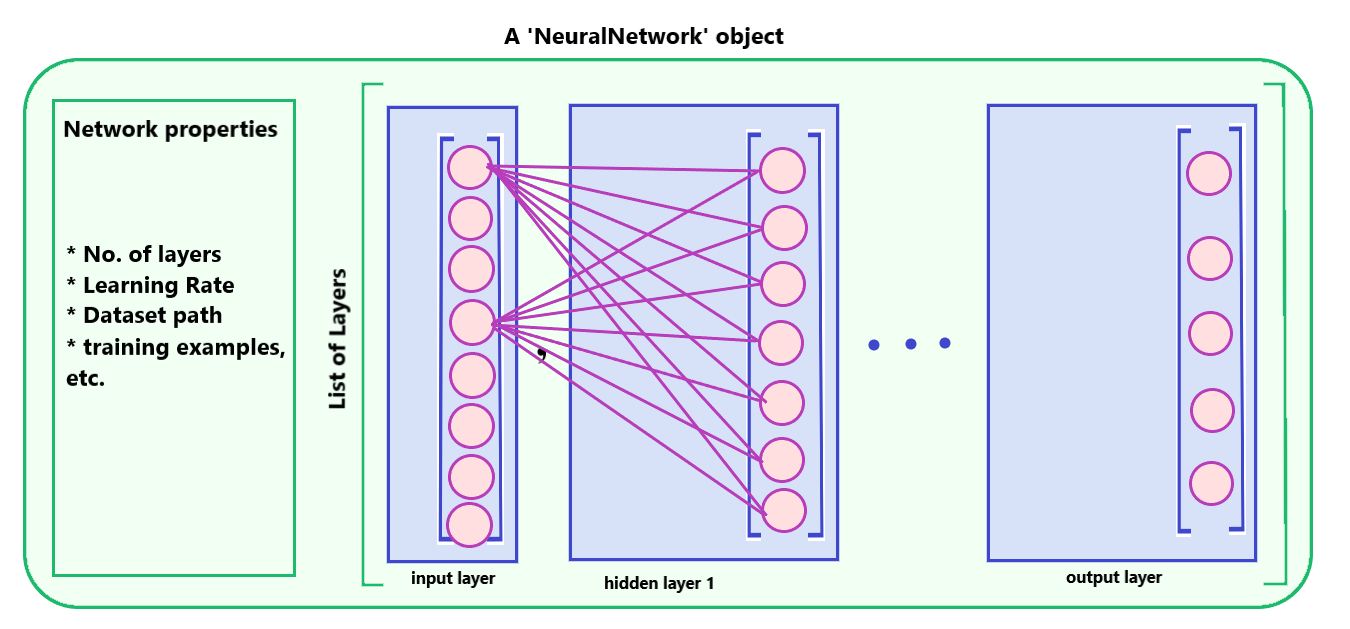

NeuralNetwork is the final structure consisting of the list of layers. All the training process takes place here. The network is costructed from the JSON file which is supplied while creating an instance of this class. The network in initilized by building a list of layers as per the provided configuration. Then setting the appropriate network properties. Also the the dataset is loaded during object construction from the appropriate path supplied in the JSON file. All you need to do is to call the train_network() method. The methods are described as follows:

- forward_pass(input_vector): The forward pass of the backpropagation algorithm.

- backward_pass(target_output_vector): The backward pass of the back-propagation algorithm.

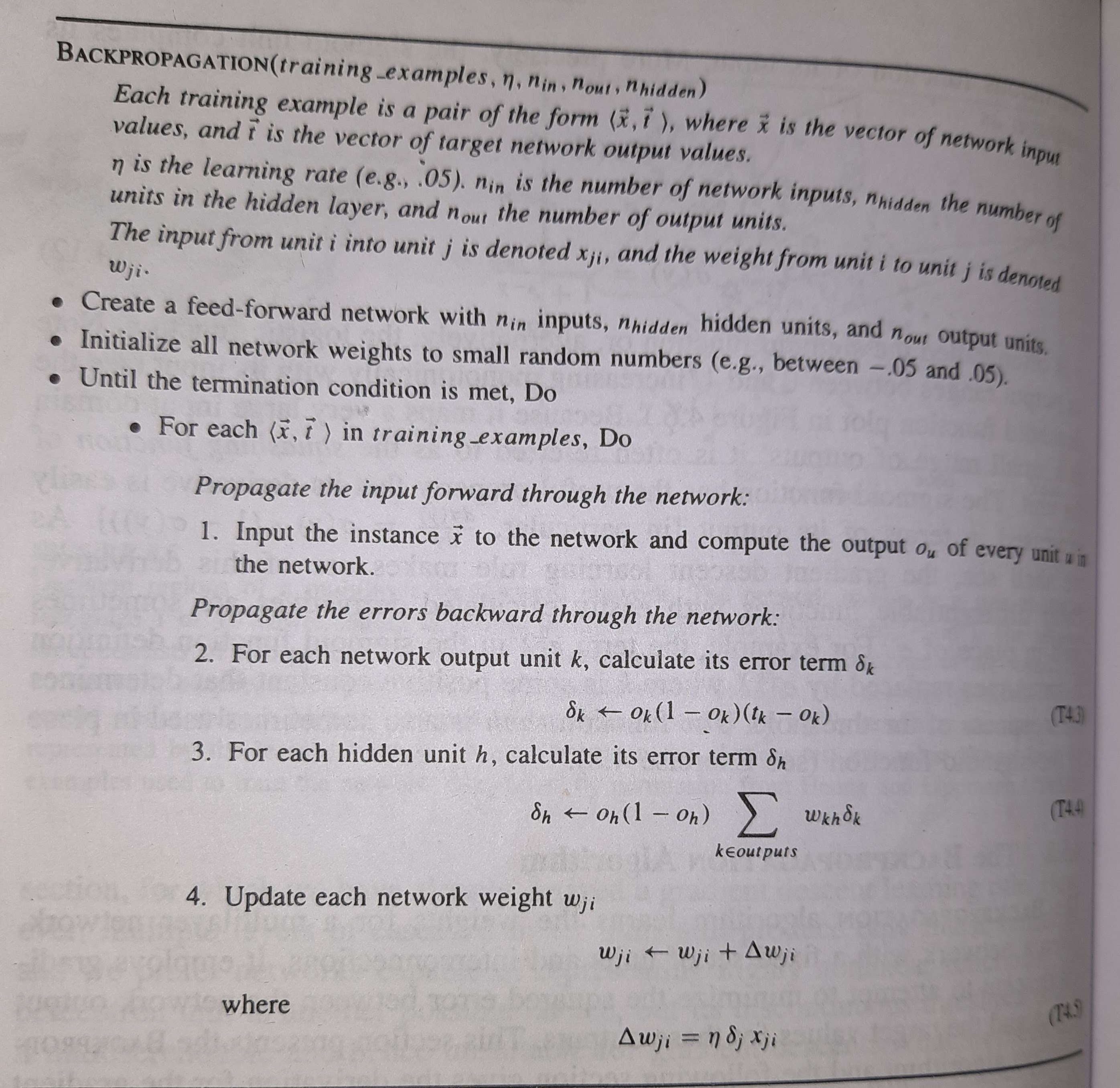

- train_network(): The stochastic gradient descent version of backpropagation algorithm (Referred from Machine Learning - Tom Mitchell 4th ed.)

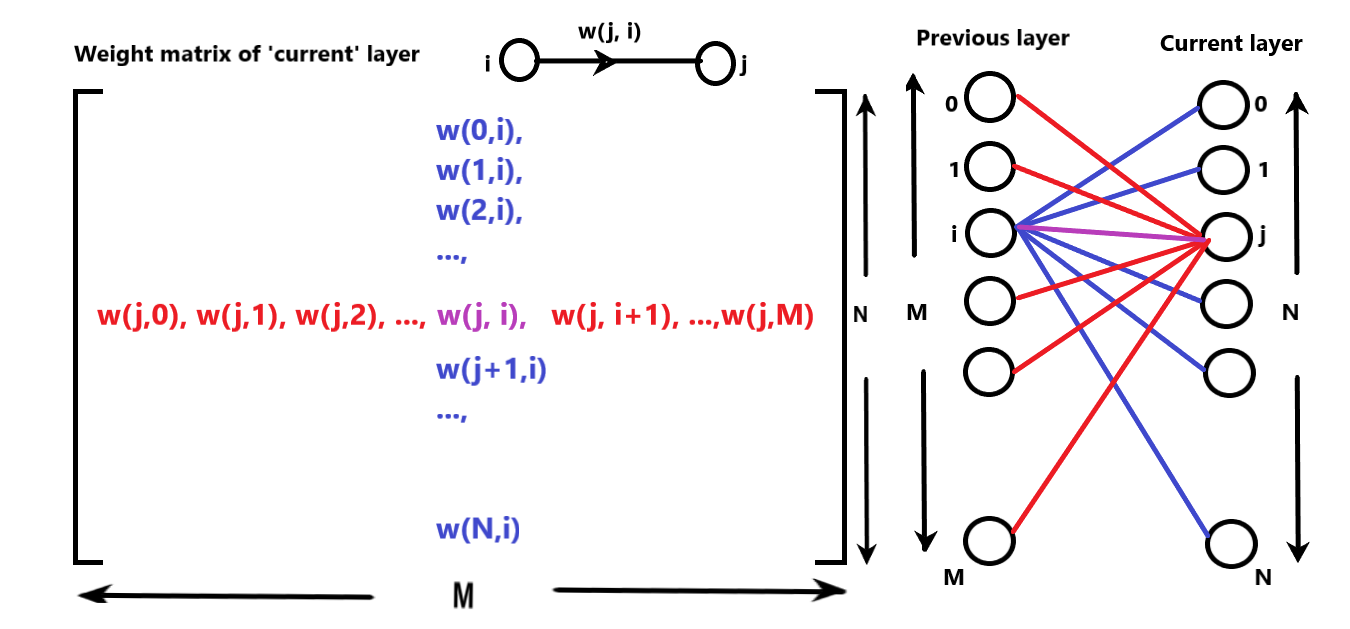

The weight matrix is one of the most fundamental structure in this algorithm. The notations are referred according to the original algorithm from Machine Learning by Tom Mitchell. 'W(j, i)' is the weight between neuron 'i' of previous layer and neuron 'j' of current layer and directed from 'i' to 'j' . Following illustration will clarify the situation.

Figure 5. The structure of a weight matrixConsider all the weight directions from previous layer to current layer.

The kth 'row' of weight matrix represent the weight vector containing all the weights from the previous layer, connected to kth neuron in current layer (in Red).

The lth 'column' of the weight matrix gives the vector of the weights from lth neuron from previous layer to all the neurons in current layer (in Blue).

The forward pass is the phase of the algorithm where the input signals propagate from input layer to the output layer. At every node, appropriate activation values are calculated by doing weighted sum of inputs and correct weights, and applying the activation function to the result. At the output layer the activations are calculated and are then used to find out error.

The forward_pass(input_vector) method:

For all the layers in the network starting from the input layer, the activations are calculated. The input vector is applied to the input layer, and from next layer onwards, the weighted sum for every neuron is calculated. Appropriate 'row' of the weight matrix is provided. These activations are collected in a temporary vector 'temp', which is input to the next layer. And this process continues until the last layer.

After the forward pass, the error is calculated at the output layer. The layer just before 'this' layer is resposible for the error at 'this' layer. Therefore the error is distributed to all the neurons in the previous layer accordingly. Thus the error for the previous layer is calculated. But this error is due to the layer before it. In this way the error signals are propagated from the output layer to the input layer in the backward order.

The backward_pass(target_output_vector) method:

For all the layers starting from the output layer to the input layer, the error terms are calculated first according to the formulae in the algorithm. After all the error terms are calculated, the weight matrices for all the layers are updated. (Read the code to get better insight).

The training example tuple <'input_vector', 'target_output_vector'> is given to the forward_pass() and backward_pass() respectively, and for every example in the training dataset, this procedure is repeated.

- Configure your network in a JSON file (refer to one of the file format provided in 'Network_structures' directory). Add a path of dataset (absolute path is recommended but a path relative to the directory where 'Runner.py' is present is also acceptable). Keep the data files in 'data' directory, this can make things less messy.

- Open Python terminal and run

python Runner.py - There's an additional module to create a dataset 'dataset_creator.py'. It just creates a random data block of 1's and 0's with specified dimensions. Also, Iris dataset is added.

For whatever discrepancies are there, kindly provide any advice to rectify. Thank you.