CitySurfaces is a framework that combines active learning and semantic segmentation to locate, delineate, and classify sidewalk paving materials from street-level images. Our framework adopts a recent high-performing semantic segmentation model (Tao et al., 2020), which uses hierarchical multi-scale attention combined with object-contextual representations

The framework was presented in our paper published at the Sustainable Cities and Society journal (Arxiv link here).

CitySurfaces: City-scale semantic segmentation of sidewalk materials

Maryam Hosseini, Fabio Miranda, Jianzhe Lin, Claudio T. Silva,

Sustainable Cities and Society, 2022

@article{HOSSEINI2022103630,

title = {CitySurfaces: City-scale semantic segmentation of sidewalk materials},

journal = {Sustainable Cities and Society},

volume = {79},

pages = {103630},

year = {2022},

issn = {2210-6707},

doi = {https://doi.org/10.1016/j.scs.2021.103630},

url = {https://www.sciencedirect.com/science/article/pii/S2210670721008933},

author = {Maryam Hosseini and Fabio Miranda and Jianzhe Lin and Claudio T. Silva},

keywords = {Sustainable built environment, Surface materials, Urban heat island, Semantic segmentation, Sidewalk assessment, Urban analytics, Computer vision}

}

You can use our pre-trained model to make inference on your own street-level images. Our extended model can classify eight different classes of paving materials:

The team includes:

- Maryam Hosseini (Rutgers University / New York University)

- Fabio Miranda (University of Illinois at Chicago)

- Jianzhe Lin (New York University)

- Cláudio T. Silva (New York University)

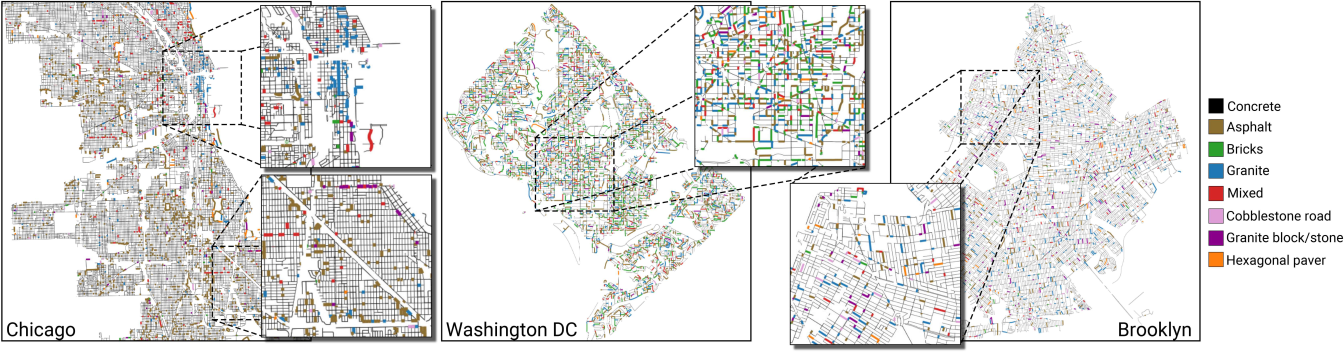

New weights from our updated model trained on more cities (now including DC, Chicago, and Philadelphia) is uploaded in our Google Drive.

The framework is based on NVIDIA Semantic Segmentation. The code is tested with pytorch 1.7 and python 3.9. You can use ./Dockerfile to build an image.

Follow the instructions below to be able to segment your own image data. Most of the steps are based on NVIDIA's original steps, with modifications regarding weights and dataset names.

- Create a directory where you can keep large files.

> mkdir <large_asset_dir>-

Update

__C.ASSETS_PATHinconfig.pyto point at that directory__C.ASSETS_PATH=<large_asset_dir>

-

Download our pretrained weights from Google Drive. Weights should be under

<large_asset_dir>/seg_weights.

The instructions below make use of a tool called runx, which we find useful to help automate experiment running and summarization. For more information about this tool, please see runx.

In general, you can either use the runx-style commandlines shown below. Or you can call python train.py <args ...> directly if you like.

Update the inference-citysurfaces.yml under scripts directory with the path to your image folder that you would like to make inference on.

Run

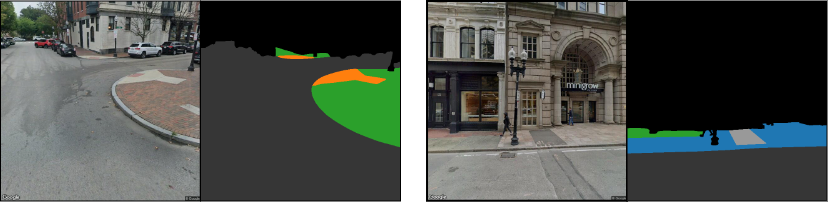

> python -m runx.runx scripts/inference-citysurfaces.yml -iThe results should look like the below examples, where you have your input image and segmentation mask, side by side.