Hierarchical Dialogue Understanding with Special Tokens and Turn-level Attention, ICLR 2023, Tiny Papers

https://arxiv.org/abs/2305.00262

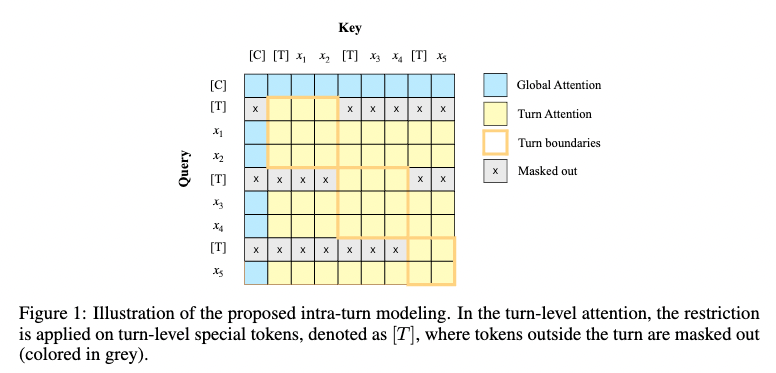

This is the official code repository of our ICLR 2023 Tiny paper. In this paper, we proposed a simple but effective Hierarchical Dialogue Understanding model, HiDialog. we first insert multiple special tokens into a dialogue and propose the turn-level attention to learn turn embeddings hierarchically. Then, a heterogeneous graph module is leveraged to polish the learned embeddings.

Our experiments are conducted with following core packages:

- PyTorch 1.11.0

- CUDA 11.6

- dgl-cuda11.3 0.8.2

- sklearn

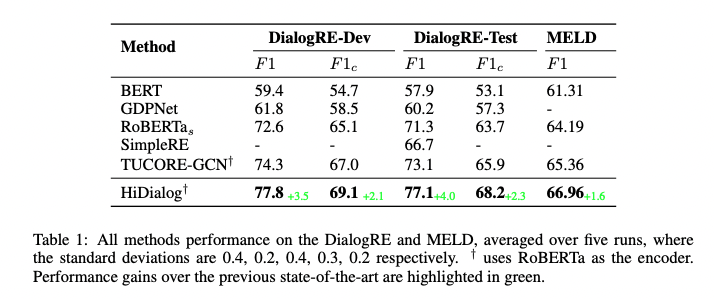

Main Results

Reproducibility

To reproduce our training process in main experiments on DialogRE,

- download RoBERTa and unzip it to

pre-trained_model/RoBERTa/. - download

config.json,merges.txtandvocab.jsonfrom here, put them topre-trained_model/RoBERTa/ - download DialogRE

- copy the *.json files into datasets/DialogRE

- run

bash dialogre.sh

To reproduce our training process in main experiments on MELD,

- download RoBERTa and unzip it to

pre-trained_model/RoBERTa/. - download

config.json,merges.txtandvocab.jsonfrom here, put them topre-trained_model/RoBERTa/ - download MELD

- copy the *.json files into datasets/MELD

- run

python MELD.py - run

bash meld.sh

This project is expanded upon from a course project at NUS [Course Page]. The code repository is based on following projects:

- ACL-20, "Dialogue-Based Relation Extraction" [github]

- AAAI-21, "GDPNet: Refining Refining Latent Multi-View Graph for Relation Extraction" [github]

- EMNLP-21, "Graph Based Network with Contextualized Representations of Turns in Dialogue" [github]

Thanks for their amazing work.

@Article{liu2023hierarchical,

author = {Xiao Liu and Jian Zhang and Heng Zhang and Fuzhao Xue and Yang You},

title = {Hierarchical Dialogue Understanding with Special Tokens and Turn-level Attention},

journal = {arXiv preprint arXiv:2305.00262},

year = {2023},

}