This is a collection of research papers for Reinforcement Learning with Human Feedback (RLHF). And the repository will be continuously updated to track the frontier of RLHF.

Welcome to follow and star!

The idea of RLHF is to use methods from reinforcement learning to directly optimize a language model with human feedback. RLHF has enabled language models to begin to align a model trained on a general corpus of text data to that of complex human values.

- RLHF for Large Language Model (LLM)

- RLHF for Video Game (e.g. Atari)

(The following section was automatically generated by ChatGPT)

RLHF typically refers to "Reinforcement Learning with Human Feedback". Reinforcement Learning (RL) is a type of machine learning that involves training an agent to make decisions based on feedback from its environment. In RLHF, the agent also receives feedback from humans in the form of ratings or evaluations of its actions, which can help it learn more quickly and accurately.

RLHF is an active research area in artificial intelligence, with applications in fields such as robotics, gaming, and personalized recommendation systems. It seeks to address the challenges of RL in scenarios where the agent has limited access to feedback from the environment and requires human input to improve its performance.

Reinforcement Learning with Human Feedback (RLHF) is a rapidly developing area of research in artificial intelligence, and there are several advanced techniques that have been developed to improve the performance of RLHF systems. Here are some examples:

-

Inverse Reinforcement Learning (IRL): IRL is a technique that allows the agent to learn a reward function from human feedback, rather than relying on pre-defined reward functions. This makes it possible for the agent to learn from more complex feedback signals, such as demonstrations of desired behavior. -

Apprenticeship Learning: Apprenticeship learning is a technique that combines IRL with supervised learning to enable the agent to learn from both human feedback and expert demonstrations. This can help the agent learn more quickly and effectively, as it is able to learn from both positive and negative feedback. -

Interactive Machine Learning (IML): IML is a technique that involves active interaction between the agent and the human expert, allowing the expert to provide feedback on the agent's actions in real-time. This can help the agent learn more quickly and efficiently, as it can receive feedback on its actions at each step of the learning process. -

Human-in-the-Loop Reinforcement Learning (HITLRL): HITLRL is a technique that involves integrating human feedback into the RL process at multiple levels, such as reward shaping, action selection, and policy optimization. This can help to improve the efficiency and effectiveness of the RLHF system by taking advantage of the strengths of both humans and machines.

Here are some examples of Reinforcement Learning with Human Feedback (RLHF):

-

Game Playing: In game playing, human feedback can help the agent learn strategies and tactics that are effective in different game scenarios. For example, in the popular game of Go, human experts can provide feedback to the agent on its moves, helping it improve its gameplay and decision-making. -

Personalized Recommendation Systems: In recommendation systems, human feedback can help the agent learn the preferences of individual users, making it possible to provide personalized recommendations. For example, the agent could use feedback from users on recommended products to learn which features are most important to them. -

Robotics: In robotics, human feedback can help the agent learn how to interact with the physical environment in a safe and efficient manner. For example, a robot could learn to navigate a new environment more quickly with feedback from a human operator on the best path to take or which objects to avoid. -

Education: In education, human feedback can help the agent learn how to teach students more effectively. For example, an AI-based tutor could use feedback from teachers on which teaching strategies work best with different students, helping to personalize the learning experience.

format:

- [title](paper link) [links]

- author1, author2, and author3...

- publisher

- keyword

- code

- experiment environments and datasets

-

- OpenAI

- Keyword: A large-scale, multimodal model, Transformerbased model, Fine-tuned used RLHF

- Code: official

- Dataset: DROP, WinoGrande, HellaSwag, ARC, HumanEval, GSM8K, MMLU, TruthfulQA

-

Better Aligning Text-to-Image Models with Human Preference

- Xiaoshi Wu, Keqiang Sun, Feng Zhu, Rui Zhao, Hongsheng Li

- Keyword: Diffusion Model, Text-to-Image, Aesthetic

- Code: official

-

Few-shot Preference Learning for Human-in-the-Loop RL

- Joey Hejna, Dorsa Sadigh

- Keyword: Preference Learning, Interactive Learning, Multi-task Learning, Expanding the pool of available data by viewing human-in-the-loop RL

- Code: official

-

Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models

- Chenfei Wu, Shengming Yin, Weizhen Qi, Xiaodong Wang, Zecheng Tang, Nan Duan

- Keyword: Visual Foundation Models, Visual ChatGPT

- Code: official

-

Pretraining Language Models with Human Preferences (PHF)

- Tomasz Korbak, Kejian Shi, Angelica Chen, Rasika Bhalerao, Christopher L. Buckley, Jason Phang, Samuel R. Bowman, Ethan Perez

- Keyword: Pretraining, offline RL, Decision transformer

- Code: official

-

Aligning Language Models with Preferences through f-divergence Minimization (f-DPG)

- Dongyoung Go, Tomasz Korbak, Germán Kruszewski, Jos Rozen, Nahyeon Ryu, Marc Dymetman

- Keyword: f-divergence, RL with KL penalties

- Is Reinforcement Learning (Not) for Natural Language Processing?: Benchmarks, Baselines, and Building Blocks for Natural Language Policy Optimization (NLPO)

- Rajkumar Ramamurthy, Prithviraj Ammanabrolu, Kianté,Brantley, Jack Hessel, Rafet Sifa, Christian Bauckhage, Hannaneh Hajishirzi, Yejin Choi

- Keyword: Optimizing language generators with RL, Benchmark, Performant RL algorithm

- Code: official

- Dataset: IMDB, CommonGen, CNN Daily Mail, ToTTo, WMT-16 (en-de),NarrativeQA, DailyDialog

- Scaling Laws for Reward Model Overoptimization

- Leo Gao, John Schulman, Jacob Hilton

- Keyword: Gold reward model train proxy reward model, Dataset size, Policy parameter size, BoN, PPO

- Improving alignment of dialogue agents via targeted human judgements (Sparrow)

- Amelia Glaese, Nat McAleese, Maja Trębacz, et al.

- Keyword: Information-seeking dialogue agent, Break down the good dialogue into natural language rules, DPC, Interact with the model to elicit violation of a specific rule (Adversarial Probing)

- Dataset: Natural Questions, ELI5, QuALITY, TriviaQA, WinoBias, BBQ

- Red Teaming Language Models to Reduce Harms: Methods, Scaling Behaviors, and Lessons Learned

- Deep Ganguli, Liane Lovitt, Jackson Kernion, et al.

- Keyword: Red team language model, Investigate scaling behaviors, Read teaming Dataset

- Code: official

- Dynamic Planning in Open-Ended Dialogue using Reinforcement Learning

- Deborah Cohen, Moonkyung Ryu, Yinlam Chow, Orgad Keller, Ido Greenberg, Avinatan Hassidim, Michael Fink, Yossi Matias, Idan Szpektor, Craig Boutilier, Gal Elidan

- Keyword: Real-time, Open-ended dialogue system, Pairs the succinct embedding of the conversation state by language models, CAQL, CQL, BERT

- Quark: Controllable Text Generation with Reinforced Unlearning

- Ximing Lu, Sean Welleck, Jack Hessel, Liwei Jiang, Lianhui Qin, Peter West, Prithviraj Ammanabrolu, Yejin Choi

- Keyword: Fine-tuning the language model on signals of what not to do, Decision Transformer, LLM tuning with PPO

- Code: official

- Dataset: WRITINGPROMPTS, SST-2, WIKITEXT-103

- Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback

- Yuntao Bai, Andy Jones, Kamal Ndousse, et al.

- Keyword: Harmless assistants, Online mode, Robustness of RLHF training, OOD detection.

- Code: official

- Dataset: TriviaQA, HellaSwag, ARC, OpenBookQA, LAMBADA, HumanEval, MMLU, TruthfulQA

- Teaching language models to support answers with verified quotes (GopherCite)

- Jacob Menick, Maja Trebacz, Vladimir Mikulik, John Aslanides, Francis Song, Martin Chadwick, Mia Glaese, Susannah Young, Lucy Campbell-Gillingham, Geoffrey Irving, Nat McAleese

- Keyword: Generate answers which citing specific evidence, Abstain from answering when unsure

- Dataset: Natural Questions, ELI5, QuALITY, TruthfulQA

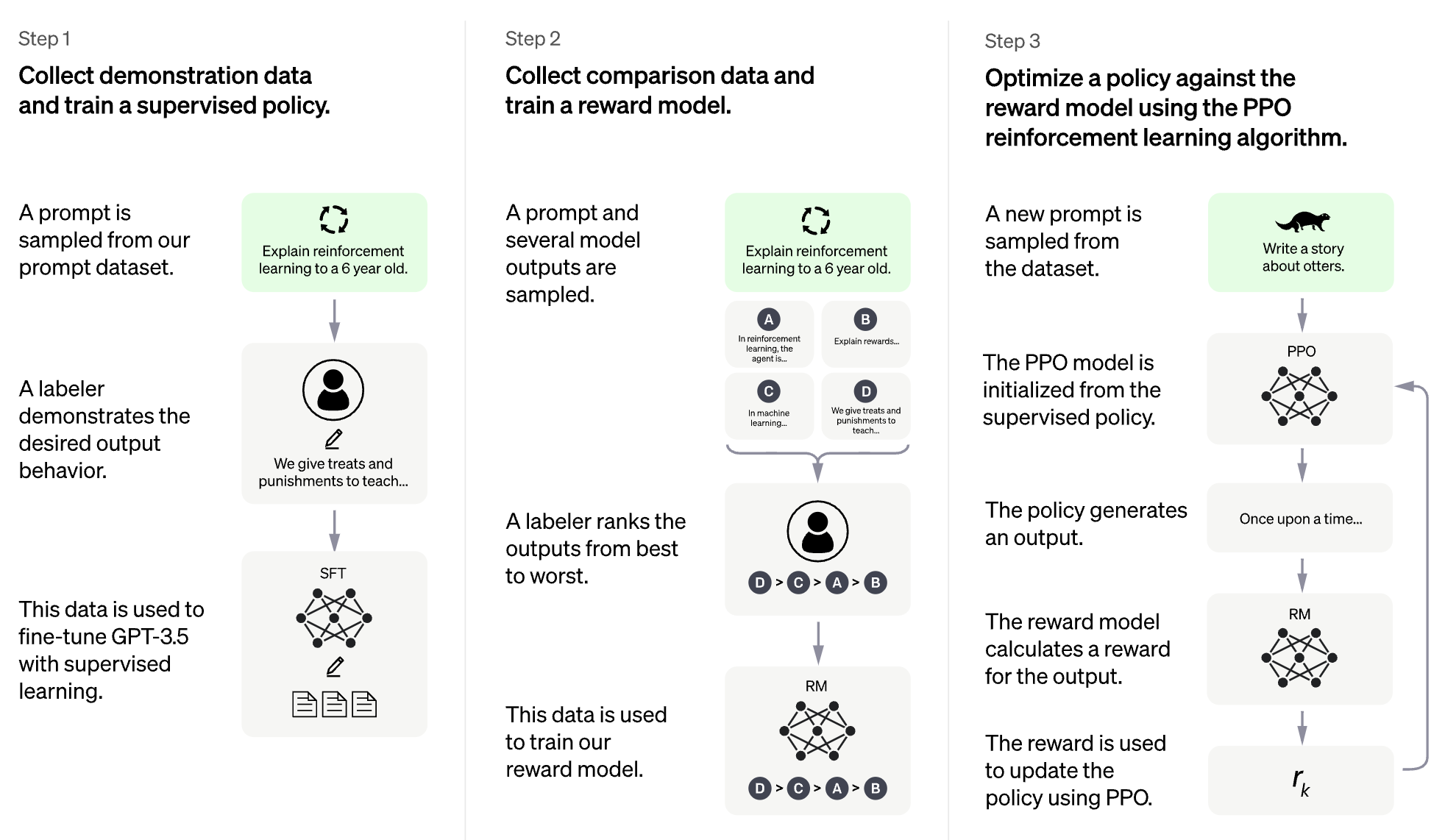

- Training language models to follow instructions with human feedback (InstructGPT)

- Long Ouyang, Jeff Wu, Xu Jiang, et al.

- Keyword: Large Language Model, Align Language Model with Human Intent

- Code: official

- Dataset: TruthfulQA, RealToxicityPrompts

- Constitutional AI: Harmlessness from AI Feedback

- Yuntao Bai, Saurav Kadavath, Sandipan Kundu, Amanda Askell, Jackson Kernion, et al.

- Keyword: RL from AI feedback(RLAIF), Training a harmless AI assistant through selfimprovement, Chain-of-thought style, Control AI behavior more precisely

- Code: official

- Discovering Language Model Behaviors with Model-Written Evaluations

- Ethan Perez, Sam Ringer, Kamilė Lukošiūtė, Karina Nguyen, Edwin Chen, et al.

- Keyword: Automatically generate evaluations with LMs, More RLHF makes LMs worse, LM-written evaluations are highquality

- Code: official

- Dataset: BBQ, Winogender Schemas

- Non-Markovian Reward Modelling from Trajectory Labels via Interpretable Multiple Instance Learning

- Joseph Early, Tom Bewley, Christine Evers, Sarvapali Ramchurn

- Keyword: Reward Modelling (RLHF), Non-Markovian, Multiple Instance Learning, Interpretability

- Code: official

- WebGPT: Browser-assisted question-answering with human feedback (WebGPT)

- Reiichiro Nakano, Jacob Hilton, Suchir Balaji, et al.

- Keyword: Model search the web and provide reference, Imitation learning, BC, long form question

- Dataset: ELI5, TriviaQA, TruthfulQA

- Recursively Summarizing Books with Human Feedback

- Jeff Wu, Long Ouyang, Daniel M. Ziegler, Nisan Stiennon, Ryan Lowe, Jan Leike, Paul Christiano

- Keyword: Model trained on small task to assist human evaluate broader task, BC

- Dataset: Booksum, NarrativeQA

- Revisiting the Weaknesses of Reinforcement Learning for Neural Machine Translation

- Learning to summarize from human feedback

- Fine-Tuning Language Models from Human Preferences

- Scalable agent alignment via reward modeling: a research direction

- Jan Leike, David Krueger, Tom Everitt, Miljan Martic, Vishal Maini, Shane Legg

- Keyword: Agent alignment problem, Learn reward from interaction, Optimize reward with RL, Recursive reward modeling

- Code: official

- Env: Atari

- Reward learning from human preferences and demonstrations in Atari

- Borja Ibarz, Jan Leike, Tobias Pohlen, Geoffrey Irving, Shane Legg, Dario Amodei

- Keyword: Expert demonstration trajectory preferences reward hacking problem, Noise in human label

- Code: official

- Env: Atari

- Deep TAMER: Interactive Agent Shaping in High-Dimensional State Spaces

- Garrett Warnell, Nicholas Waytowich, Vernon Lawhern, Peter Stone

- Keyword: High dimension state, Leverage the input of Human trainer

- Code: third party

- Env: Atari

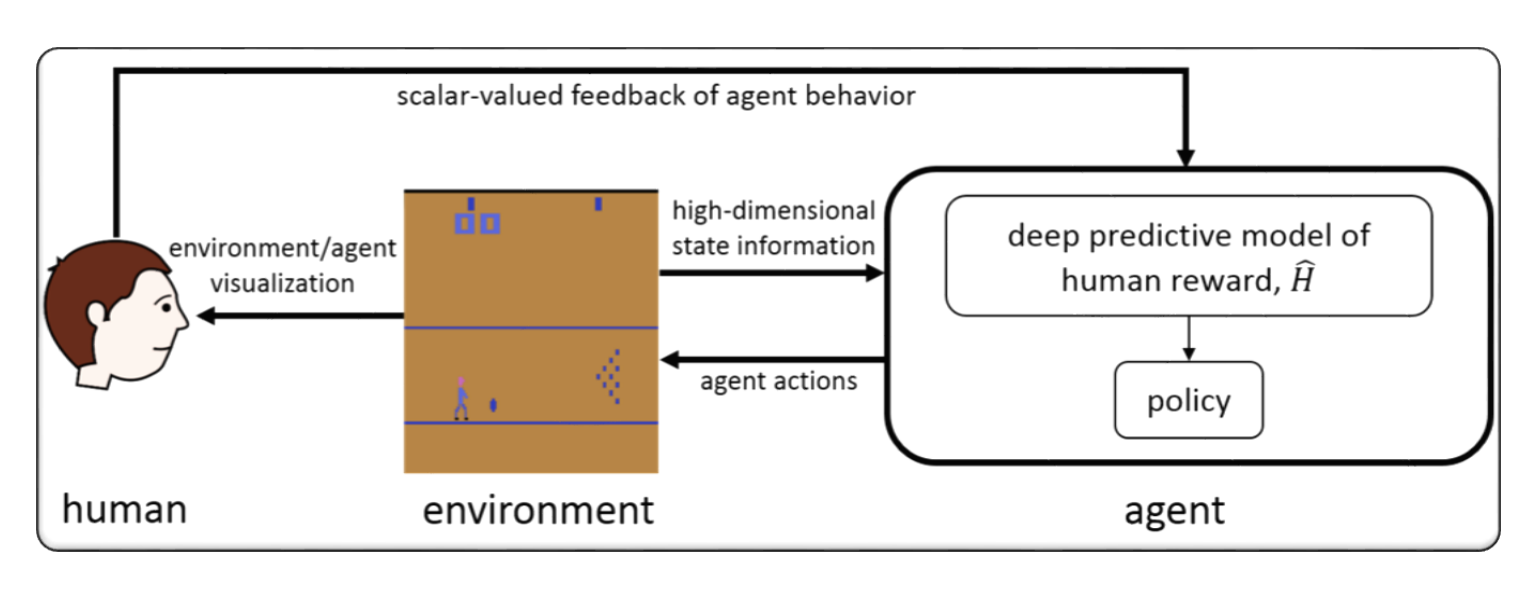

- Deep reinforcement learning from human preferences

- Paul Christiano, Jan Leike, Tom B. Brown, Miljan Martic, Shane Legg, Dario Amodei

- Keyword: Explore goal defined in human preferences between pairs of trajectories segmentation, Learn more complex thing than human feedback

- Code: official

- Env: Atari, MuJoCo

- Interactive Learning from Policy-Dependent Human Feedback

- James MacGlashan, Mark K Ho, Robert Loftin, Bei Peng, Guan Wang, David Roberts, Matthew E. Taylor, Michael L. Littman

- Keyword: Decision is influenced by current policy rather than human feedback, Learn from policy dependent feedback that converges to a local optimal

format:

- [title](codebase link) [links]

- author1, author2, and author3...

- keyword

- experiment environments, datasets or tasks

- PaLM + RLHF - Pytorch

- Phil Wang, Yachine Zahidi, Ikko Eltociear Ashimine, Eric Alcaide

- Keyword: Transformers, PaLM architecture

- Dataset: enwik8

- lm-human-preferences

- following-instructions-human-feedback

- Long Ouyang, Jeff Wu, Xu Jiang, et al.

- Keyword: Large Language Model, Align Language Model with Human Intent

- Dataset: TruthfulQA RealToxicityPrompts

- Transformer Reinforcement Learning (TRL)

- Leandro von Werra, Younes Belkada, Lewis Tunstall, et al.

- Keyword: Train LLM with RL, PPO, Transformer

- Task: IMDB sentiment

- Transformer Reinforcement Learning X (TRLX)

- Jonathan Tow, Leandro von Werra, et al.

- Keyword: Distributed training framework, T5-based language models, Train LLM with RL, PPO, ILQL

- Task: Fine tuning LLM with RL using provided reward function or reward-labeled dataset

- RL4LMs (A modular RL library to fine-tune language models to human preferences)

- Rajkumar Ramamurthy, Prithviraj Ammanabrolu, Kianté,Brantley, Jack Hessel, Rafet Sifa, Christian Bauckhage, Hannaneh Hajishirzi, Yejin Choi

- Keyword: Optimizing language generators with RL, Benchmark, Performant RL algorithm

- Dataset: IMDB, CommonGen, CNN Daily Mail, ToTTo, WMT-16 (en-de), NarrativeQA, DailyDialog

- HH-RLHF

- Ben Mann, Deep Ganguli

- Keyword: Human preference dataset, Red teaming data, machine-written

- Task: Open-source dataset for human preference data about helpfulness and harmlessness

- LaMDA-rlhf-pytorch

- Phil Wang

- Keyword: LaMDA, Attention-mechanism

- Task: Open-source pre-training implementation of Google's LaMDA research paper in PyTorch

- TextRL

- Eric Lam

- Keyword: huggingface's transformer

- Task: Text generation

- Env: PFRL, gym

- minRLHF

- Thomfoster

- Keyword: PPO, Minimal library

- Task: educational purposes

- Stanford Human Preferences Dataset(SHP)

- Ethayarajh, Kawin and Zhang, Heidi and Wang, Yizhong and Jurafsky, Dan

- Keyword: Naturally occurring and human-written dataset,18 different subject areas

- Task: Intended to be used for training RLHF reward models

- PromptSource

- Stephen H. Bach, Victor Sanh, Zheng-Xin Yong et al.

- Keyword: Prompted English datasets, Mapping a data example into natural language

- Task: Toolkit for creating, Sharing and using natural language prompts

- Structured Knowledge Grounding(SKG) Resources Collections

- Tianbao Xie, Chen Henry Wu, Peng Shi et al.

- Keyword: Structured Knowledge Grounding

- Task: Collection of datasets are related to structured knowledge grounding

- The Flan Collection

- Longpre Shayne, Hou Le, Vu Tu et al.

- Task: Collection compiles datasets from Flan 2021, P3, Super-Natural Instructions

- [OpenAI] ChatGPT: Optimizing Language Models for Dialogue

- [Hugging Face] Illustrating Reinforcement Learning from Human Feedback (RLHF)

- [ZhiHu] 通向AGI之路:大型语言模型 (LLM) 技术精要

- [W&B Fully Connected] Understanding Reinforcement Learning from Human Feedback (RLHF)

- [Deepmind] Learning through human feedback

Our purpose is to make this repo even better. If you are interested in contributing, please refer to HERE for instructions in contribution.

Awesome RLHF is released under the Apache 2.0 license.