The official respository of MedPLIB: Towards a Multimodal Large Language Model with Pixel-Level Insight for Biomedicine.

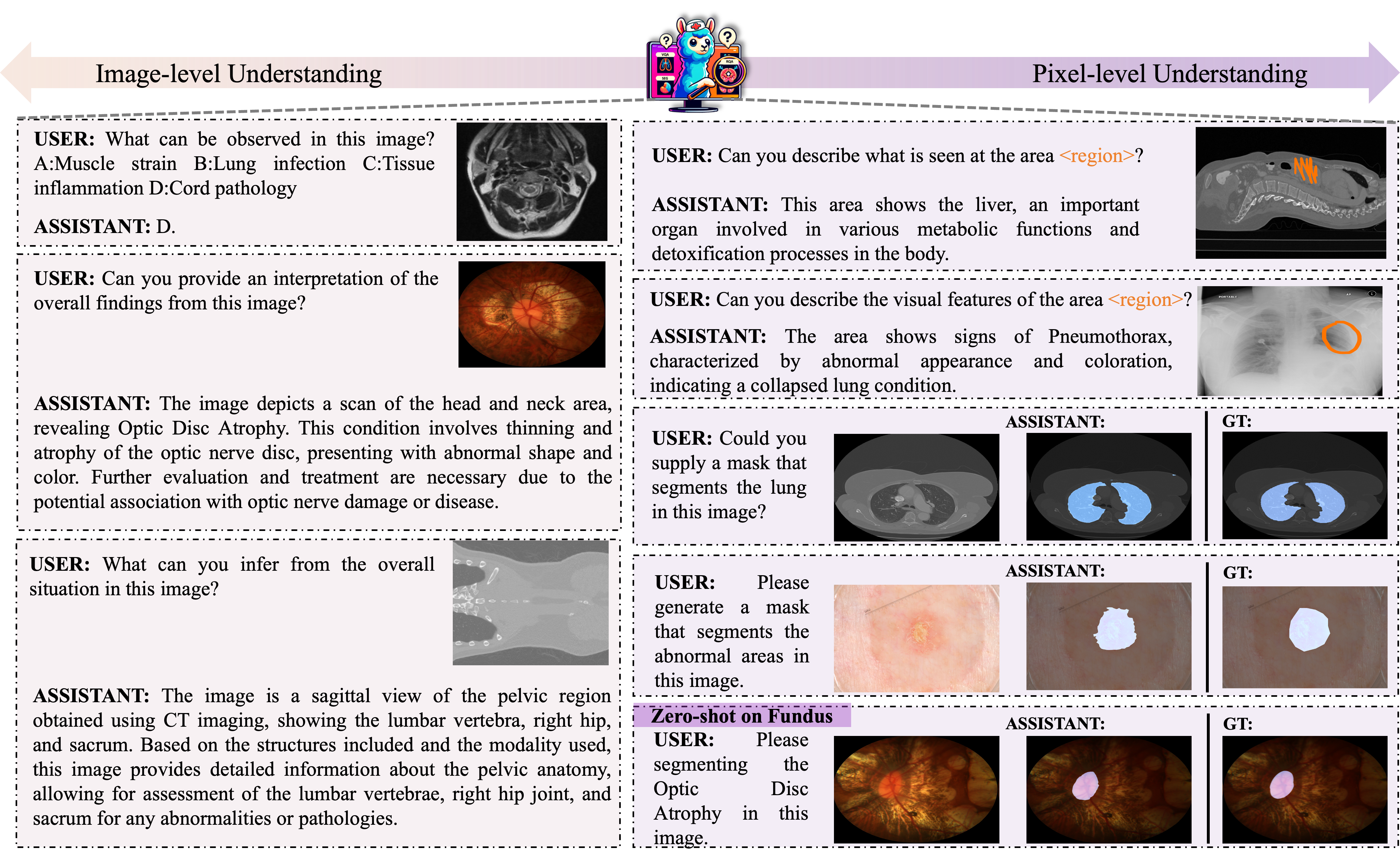

MedPLIB shows excellent performance in pixel-level understanding in biomedical field.

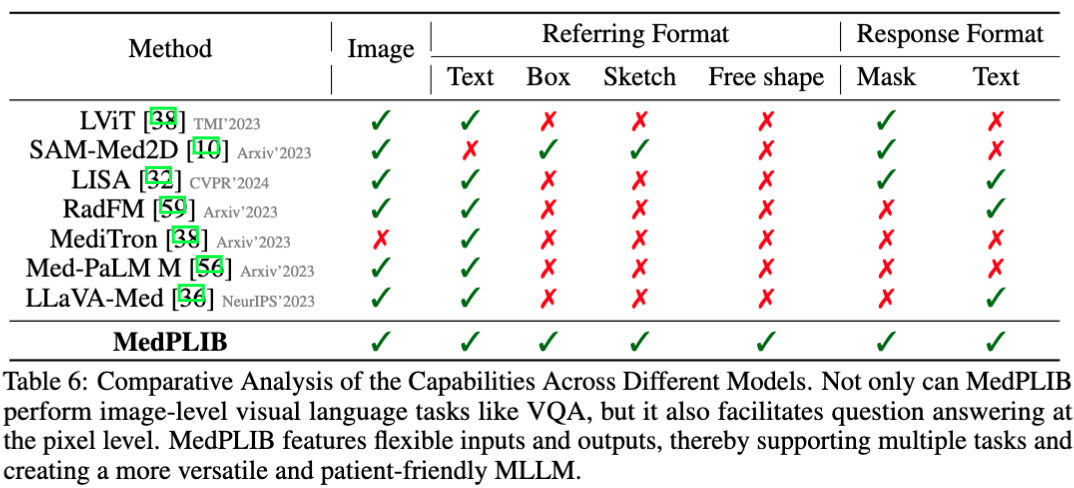

- ✨ MedPLIB is a biomedical MLLM with a huge breadth of abilities and supports multiple imaging modalities. Not only can it perform image-level visual language tasks like VQA, but it also facilitates question answering at the pixel level.

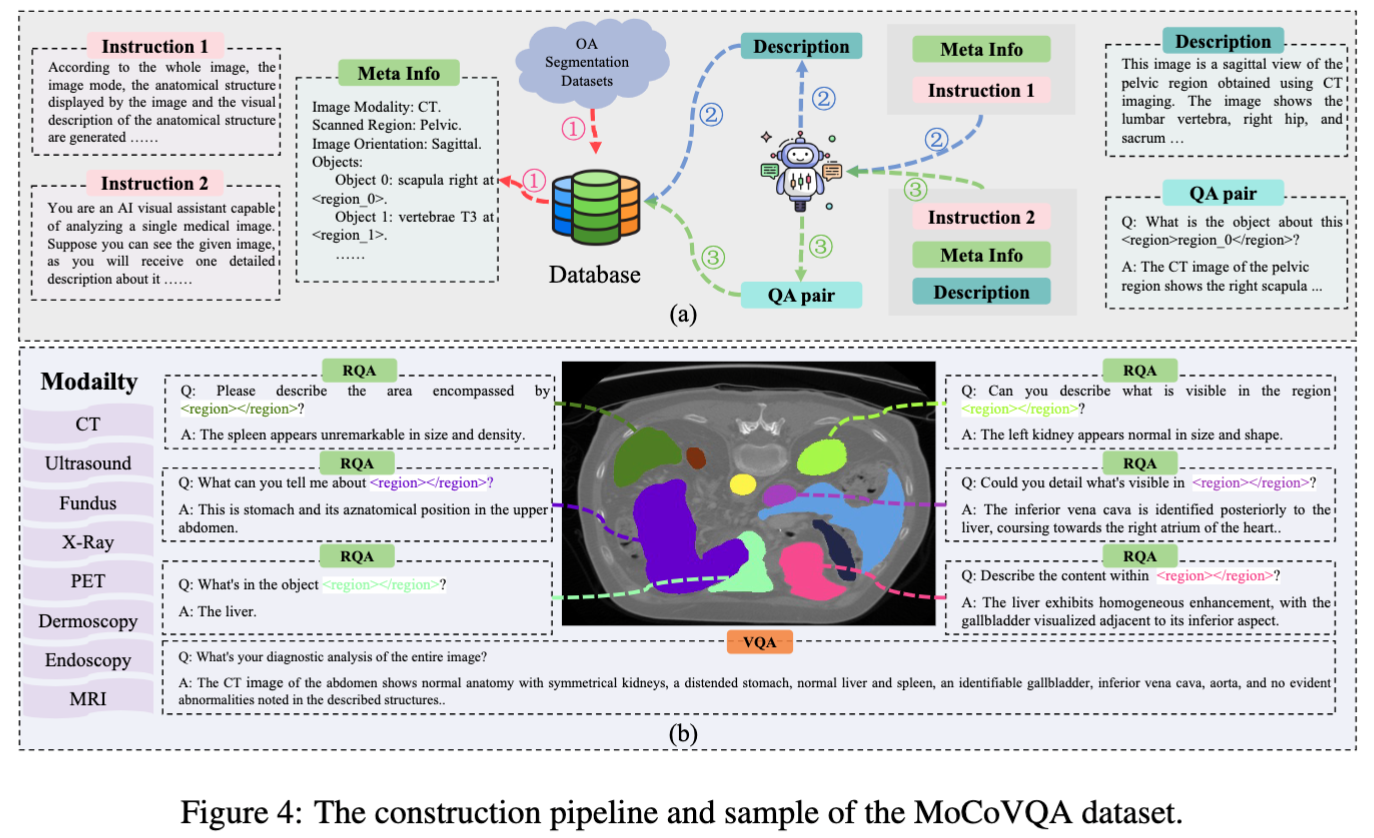

- ✨ We constructe MeCoVQA Dataset. It comprises an array of 8 modalities with a total of 310k pairs for complex medical imaging question answering and image region understanding.

- 2024-07-30: We release the readme.md

We recommend trying our web demo, which includes all the features currently supported by MedPLIB. To run our demo, you need to download or train MedPLIB to make the checkpoints locally. Please run the following commands one by one.

# launch the server controller

python -m model.serve.controller --host 0.0.0.0 --port 64000# launch the web server

python -m model.serve.gradio_web_server --controller http://localhost:64000 --model-list-mode reload --add_region_feature --port 64001 # launch the model worker

CUDA_VISIBLE_DEVICES=0 python -m model.serve.model_worker --host localhost --controller http://localhost:64000 --port 64002 --worker http://localhost:64002 --model-path /path/to/the/medplib_checkpoints --add_region_feature --device_map cuda- Region VQA:

- Pixel grounding:

- VQA:

- Clone this repository and navigate to MedPLIB folder

git clone https://github.com/ShawnHuang497/MedPLIB

cd MedPLIB- Install Package

conda create -n medplib python=3.10 -y

conda activate medplib

pip install --upgrade pip

pip install -r requirements.txt- Install additional packages for training cases

pip install ninja==1.11.1.1

pip install flash-attn==2.5.2 --no-build-isolationOn the way...

We perfrom the pre-training stage I to get the projector checkpoints. Please obtain the llava_med_alignment_500k dataset according to LLaVA-Med, and then follow the usage tutorial of LLaVA-v1.5 to pretrain.

sh scripts/train_stage2.shsh scripts/train_stage3.shsh scripts/train_stage4.shTRANSFORMERS_OFFLINE=1 deepspeed --include=localhost:1 --master_port=64995 model/eval/vqa_infer.py \

--version="/path/to/the/medplib_checkpoints" \

--vision_tower='/path/to/the/clip-vit-large-patch14-336' \

--answer_type='open' \

--val_data_path='/path/to/the/pixel_grounding_json_file' \

--image_folder='/path/to/the/SAMed2D_v1' \

--vision_pretrained="/path/to/the/sam-med2d_b.pth" \

--eval_seg \

--moe_enable \

--region_fea_adapter \

# --vis_mask \Infer to generate the prediction jsonl file.

sh model/eval/infer_parallel_bird.shCalcuate the metrics.

python model/eval/cal_metric.py \

--pred="/path/to/the/jsonl_file" \We thank the following works for giving us the inspiration and part of the code: LISA, MoE-LLaVA, LLaVA, SAM-Med2D, SAM and SEEM.

The data, code, and model checkpoints are intended to be used solely for (I) future research on visual-language processing and (II) reproducibility of the experimental results reported in the reference paper. The data, code, and model checkpoints are not intended to be used in clinical care or for any clinical decision making purposes.

The primary intended use is to support AI researchers reproducing and building on top of this work. MedPLIB and its associated models should be helpful for exploring various biomedical pixel grunding and vision question answering (VQA) research questions.

Any deployed use case of the model --- commercial or otherwise --- is out of scope. Although we evaluated the models using a broad set of publicly-available research benchmarks, the models and evaluations are intended for research use only and not intended for deployed use cases.

- The majority of this project is released under the Apache 2.0 license as found in the LICENSE file.

- The service is a research preview intended for non-commercial use only, subject to the model License of LLaMA, Terms of Use of the data generated by OpenAI, and Terms of Use of SAM-Med2D-20M. Please contact us if you find any potential violation.

If you find our paper and code useful in your research, please consider giving a star and citation.