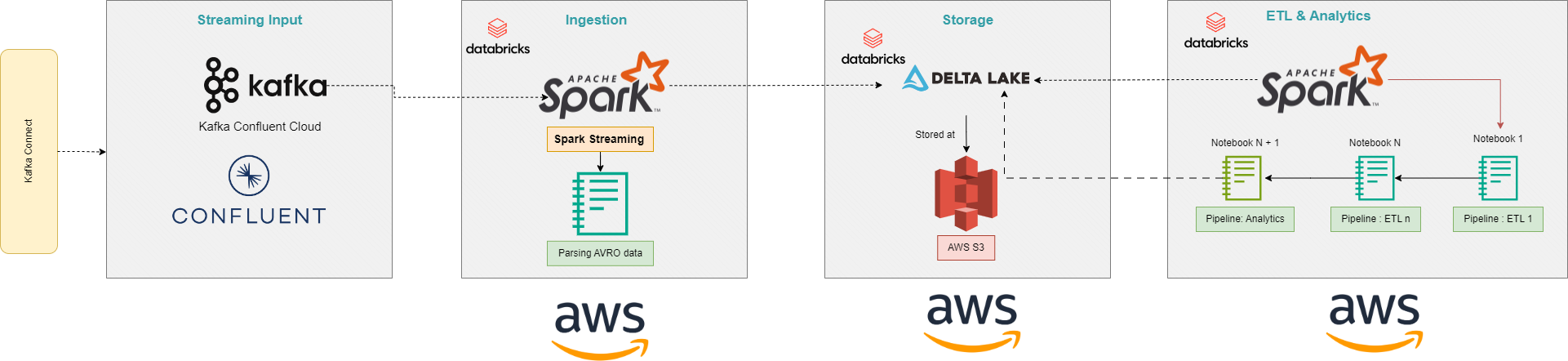

Project to stream real-time orders and apply some ETL pipelines & analytics using DataBricks, AWS

More details to be included later...

-

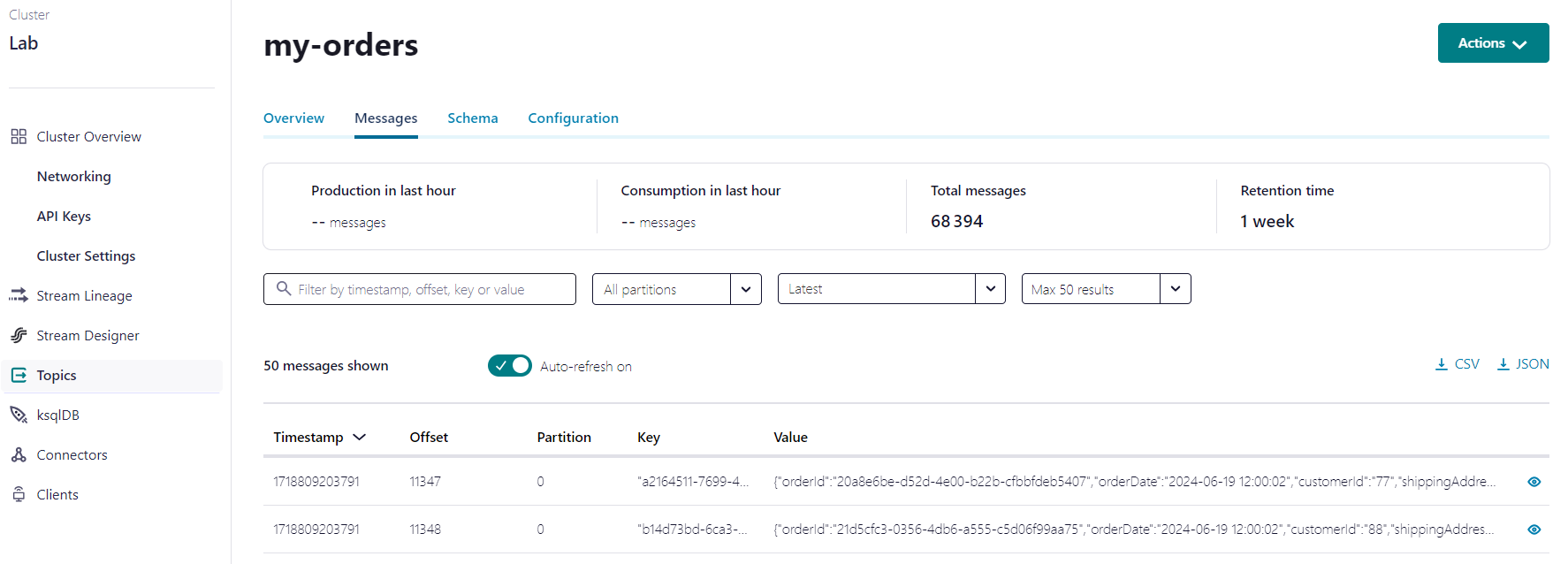

Stream orders from Kafka: Simulate a real-time stream of orders from an e-commerce platform. (Kafka Confluent) ✅

-

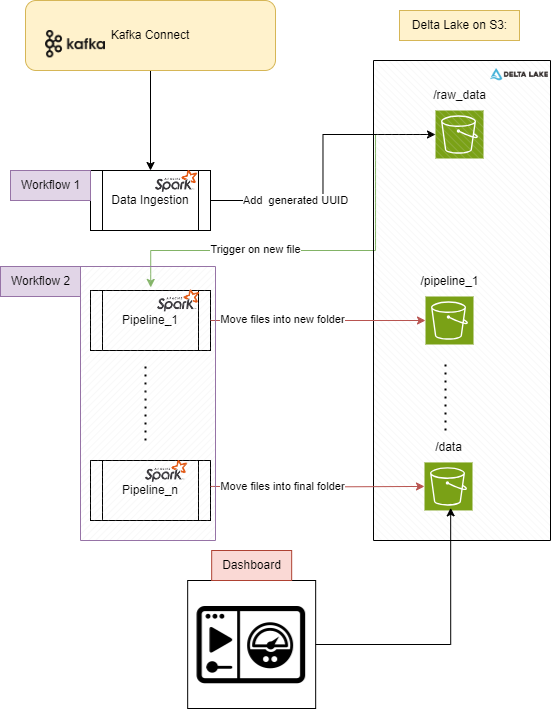

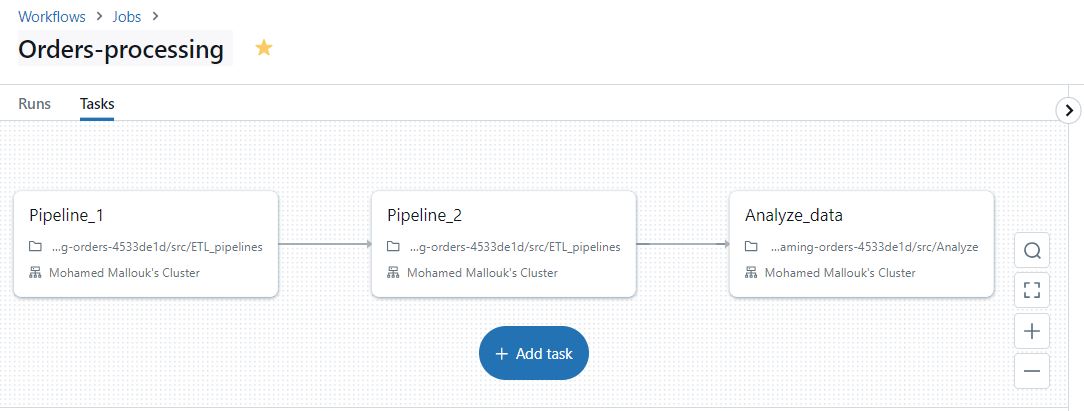

Streaming ingesting & processing : Create spark jobs to read from our kafka stream, apply etl pipelines and write our data into a delta lake (Databricks workflows + AWS S3) ✅

-

Analytics Pipelines : the final step of any workflow triggered on new orders received need to apply some analysis tasks as mentionned bellow :✅

- Develop a fraud detection rules that flags suspicious orders based on patterns such as high-value orders, frequent orders from the same customer, etc. 💡

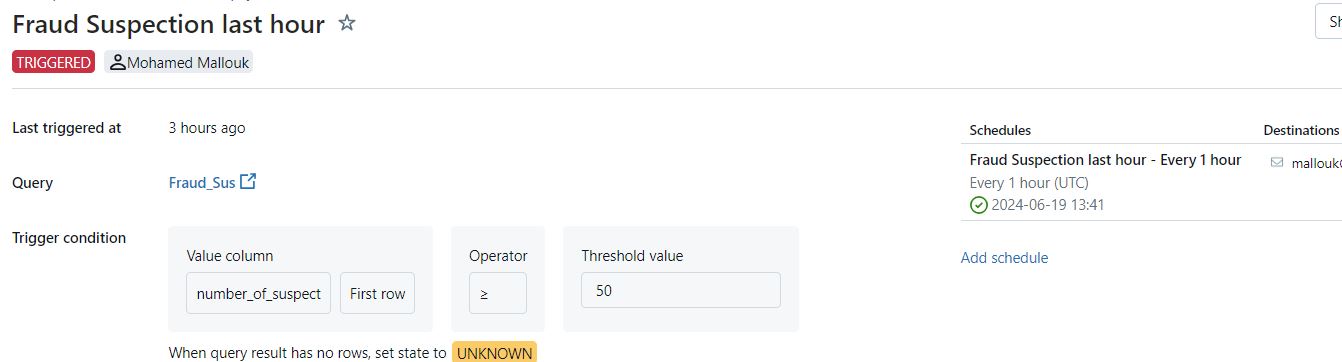

- Alerting: Set up alerts for flagged suspected clients using Databricks alerting systems (email only). 🚨

-

Vizualization : It's not the main objective of this project, but we'll create a quick dashboard included some analytical statistics using databricks dashboarding. 👀

-

Additional Challenges : as this is a learning project, I decided to add some challenging use-cases to simulate real-life problems as mentionned bellow : 🤓

-

Data Schema Evolution: Handle changes in the data schema over time, such as new fields being added to the order data, and changing types. 🔄

-

Data Schema Evolution: Ensure backward and forward compatibility in our streaming pipeline. 🔄

- I've added a new field called "message" in my topic and changed the producer code to include it from one side, from the other side I had to enable the merge schema to allow schema evolution in my delta table, to allow backward compatibility I provided a default value to my new field.

-

Exactly-Once Processing: Implement exactly-once processing semantics to ensure that each order is processed exactly once, even in the case of failures. (Avoid dupplication)

-

Stateful Stream Processing: Use stateful transformations to keep track of state across events. For example, maintain a running total of orders per customer or product category.

- Implemented two live counters in statefull_streaming.ipynb.

-

Scalability and Performance Optimization: Optimize your Spark streaming jobs for performance and scalability. This includes tuning Spark configurations, partitioning data effectively, and minimizing data shuffles.

- Optimization done during the code, for example used of pre-built functions, broadcast functions...

- Scalability: Added field dateOrder, and change the previous one to Datetime.

- Uses of concept : bronze, silver, gold tables instead of saving it directly into s3 path.

-

Data Quality Monitoring: Implement real-time data quality checks and monitoring. Alert if data quality issues such as missing values, duplicates, or outliers are detected.

- Implemented withing the statefull_streaming notebook.

-

Data Enrichment: Enrich your streaming data with additional context from external data sources. For example, enrich order data with customer demographics or product details from a database.

- Implementation of Enums to generate additional informations + GEOPY to get localisation informations.

-

Real-Time Analytics and Reporting: Build real-time analytics and reporting dashboards using tools like Databricks SQL, Power BI, or Tableau. Visualize key metrics such as order volume, sales trends, and customer behavior.

- DataBricks Dashboard based on Seaborn & PyPlot read from a real-time delta lake.

-