This repository contains the implementation of DL-ROM: Deep Learning for Reduced Order Modelling and Efficient Temporal Evolution of Fluid Simulations.

Reduced Order Modeling (ROM) creates low-order, computationally inexpensive representations of higher-order dynamical systems. DL-ROM (Deep Learning - Reduced Order Modelling) uses neural networks for non-linear projections to reduced-order states, predicting future time steps efficiently using 3D Autoencoder and 3D U-Net architectures without ground truth supervision or solving expensive Navier-Stokes equations. It achieves significant computational savings while maintaining accuracy.

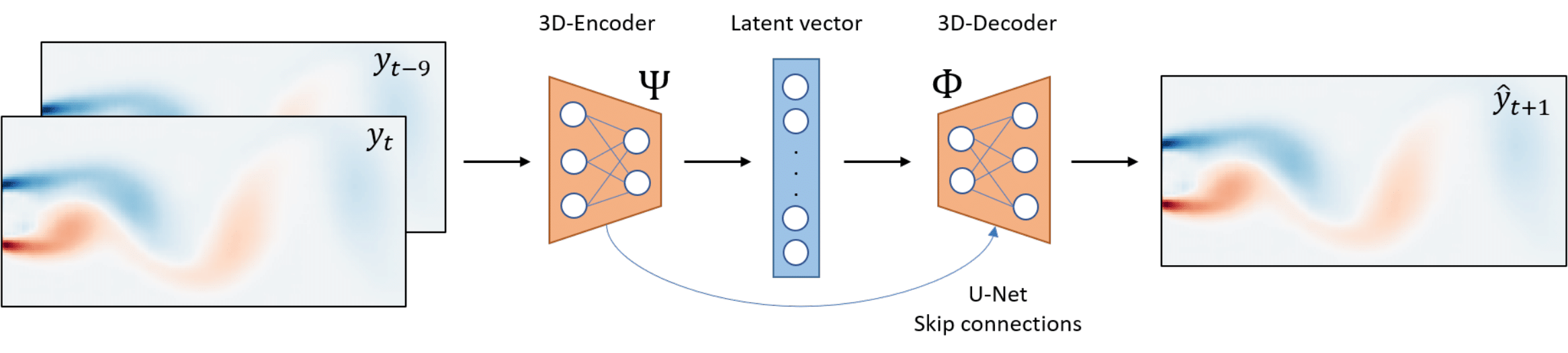

Framework for the transient Reduced order Model (DL-ROM). 10 snapshots of the previously solved CFD data are stacked and used as input to the model. The model then uses a 3D encoder architecture to reduce the high-dimensional CFD data to reduced order latent vector. This latent space is then deconvolved using a 3D-decoder to produce the higher order CFD prediction at timestep t+1.

Framework for the transient Reduced order Model (DL-ROM). 10 snapshots of the previously solved CFD data are stacked and used as input to the model. The model then uses a 3D encoder architecture to reduce the high-dimensional CFD data to reduced order latent vector. This latent space is then deconvolved using a 3D-decoder to produce the higher order CFD prediction at timestep t+1.

Clone the repository:

git clone https://github.com/Shen-Ming-Hong/DL-ROM.git

cd DL-ROM/Download the 5 datasets used for the evaluation of the DL-ROM model and place them in the data folder.

The data can be downloaded from the following Google Drive Link.

Usage: test_benchmark.py [-h] [-N NUM_EPOCHS] [-B BATCH_SIZE] [-d_set {SST,2d_plate,2d_cylinder_CFD,2d_sq_cyl,channel_flow}] [--train] [--transfer] [--test] [-test_epoch EPOCH] [--simulate]

Arguments:

-h, --help Show this help message and exit

-N NUM_EPOCHS Number of epochs for model training

-B BATCH_SIZE The batch size of the dataset (default: 16)

-d_set DATASET_NAME Name of the dataset to perform model training with

--train Run DL-ROM in training mode

--transfer Use pretrained weights to speed up model convergence (must be used with --train flag)

--test Run DL-ROM in testing mode

-test_epoch EPOCH Use the weight saved at epoch number specified by EPOCH

--simulate Run DL-ROM in simulation mode (used for in-the-loop prediction of future simulation timesteps)Link👉 Google Colab

# Clone the DL-ROM GitHub repository

!git clone https://github.com/Shen-Ming-Hong/DL-ROM.git

# Change directory to the cloned DL-ROM directory

%cd DL-ROM/

# List the contents of the current directory

!ls

# Download the dataset from the specified Google Drive folder into the 'data' directory

!gdown --folder https://drive.google.com/drive/folders/1JI4jTBM1vE9AjkdxYce0GCDG9tCi5FtQ -O data

# List the contents of the 'data' directory to confirm the download

!ls data

# Change directory to the 'code' folder within the DL-ROM directory

%cd code/!python main.py -N 100 -B 16 -d_set 2d_cylinder_CFD --train!python main.py --test -test_epoch 100 -d_set 2d_cylinder_CFD!python main.py --transfer -N 100 -B 16 -d_set 2d_cylinder_CFD --train!python main.py --simulate -test_epoch 100 -d_set 2d_cylinder_CFDUsage: visualize.py [-mode {result, simulate}] [-d_set {SST,2d_plate,2d_cylinder_CFD,2d_sq_cyl,channel_flow}] [-freq] [--MSE] [--train_plot]

Arguments:

-mode result: Plots the results of the validation set of the selected dataset with the given frequency

-mode simulate: Creates a MSE lineplot for the validation set of the selected dataset and two animations: one for prediction and one for the groundtruth

-d_set SST: Dataset name (default: 2d_cylinder_CFD)

-freq INT: Frequency of saving images in the [-mode result] (default: 20)

--MSE: Creates a barplot for comparing the MSE achieved on all supported datasets using our approach

--train_plot: Plots the training and validation loss curve over epochs for the selected datasetpython visualize.py -mode results -d_set 2d_cylinder -freq 10

python visualize.py -mode simulate -d_set 2d_cylinder@misc{pant2021deep,

title={Deep Learning for Reduced Order Modelling and Efficient Temporal Evolution of Fluid Simulations},

author={Pranshu Pant and Ruchit Doshi and Pranav Bahl and Amir Barati Farimani},

year={2021},

eprint={2107.04556},

archivePrefix={arXiv},

primaryClass={physics.flu-dyn}

}