Project Page | arXiv | Twitter

Shengjie Wang*, Shaohuai Liu*, Weirui Ye*, Jiacheng You, Yang Gao

International Conference on Machine Learning (ICML) 2024, Spotlight

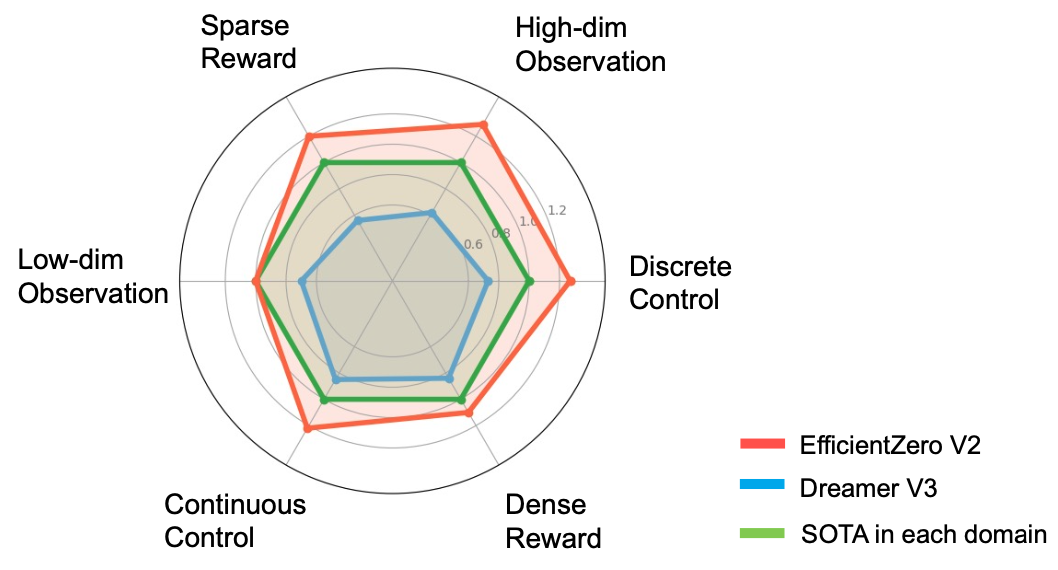

EfficientZero V2 is a general framework designed for sample-efficient RL algorithms. We have expanded the performance of EfficientZero to multiple domains, encompassing both contin- uous and discrete actions, as well as visual and low-dimensional inputs.

EfficientZero V2 outperforms the current state-of-the-art (SOTA) by a significant margin in diverse tasks under the limited data setting. EfficientZero V2 exhibits a notable ad- vancement over the prevailing general algorithm, DreamerV3, achieving superior outcomes in 50 of 66 evaluated tasks across diverse benchmarks, such as Atari 100k, Proprio Control, and Vision Control.

- [2024-05-30] We have released the code for EfficientZero V2.

See INSTALL.md for installation instructions.

Then, you can run the following command to start training:

#!/bin/bash

export OMP_NUM_THREADS=1

export CUDA_VISIBLE_DEVICES=0,1

export HYDRA_FULL_ERROR=1

# # Port for DDP

# export MASTER_PORT='12300'

# Atari

python ez/train.py exp_config=ez/config/exp/atari.yaml

# DMC state

python ez/train.py exp_config=ez/config/exp/dmc_state.yaml

# DMC image

python ez/train.py exp_config=ez/config/exp/dmc_image.yamlNote that if you modify the variable total_transitions, you should modify the variables buffer_size together. The training time will decrease if you reduce the training_steps variable. Basically, when the training_steps variable should be equal to total_transitions, the performance is sufficient.

-

Add a configuration file in

config/exp/your_env.yamland defineagent_name, etc. -

Implement your own agent in the

agentsdirectory (you can directly inherit frombase.Agent). -

Refer to the provided agent implementations for image/state, discrete/continuous setups to implement your own agent accordingly.

-

Run the following command:

python ez/train.py exp_config=ez/config/exp/your_env.yaml

Run bash eval.sh. You can modify the relevant content for evaluation in config/config.yaml.

EfficientZero V2 outperforms or be comparible to SoTA baselines in multiple domains. The results can be found in media\img folder.

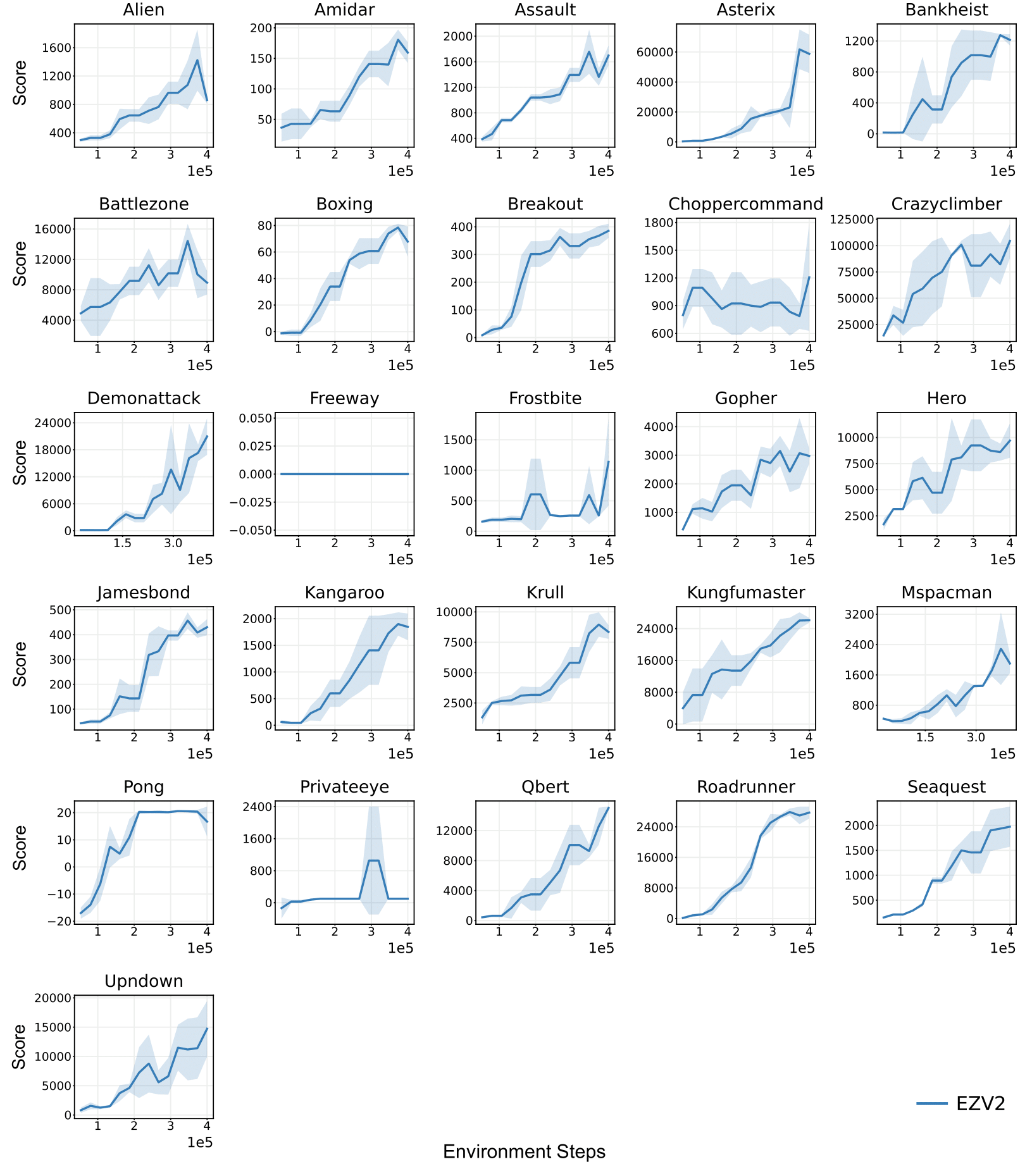

Atari 100k

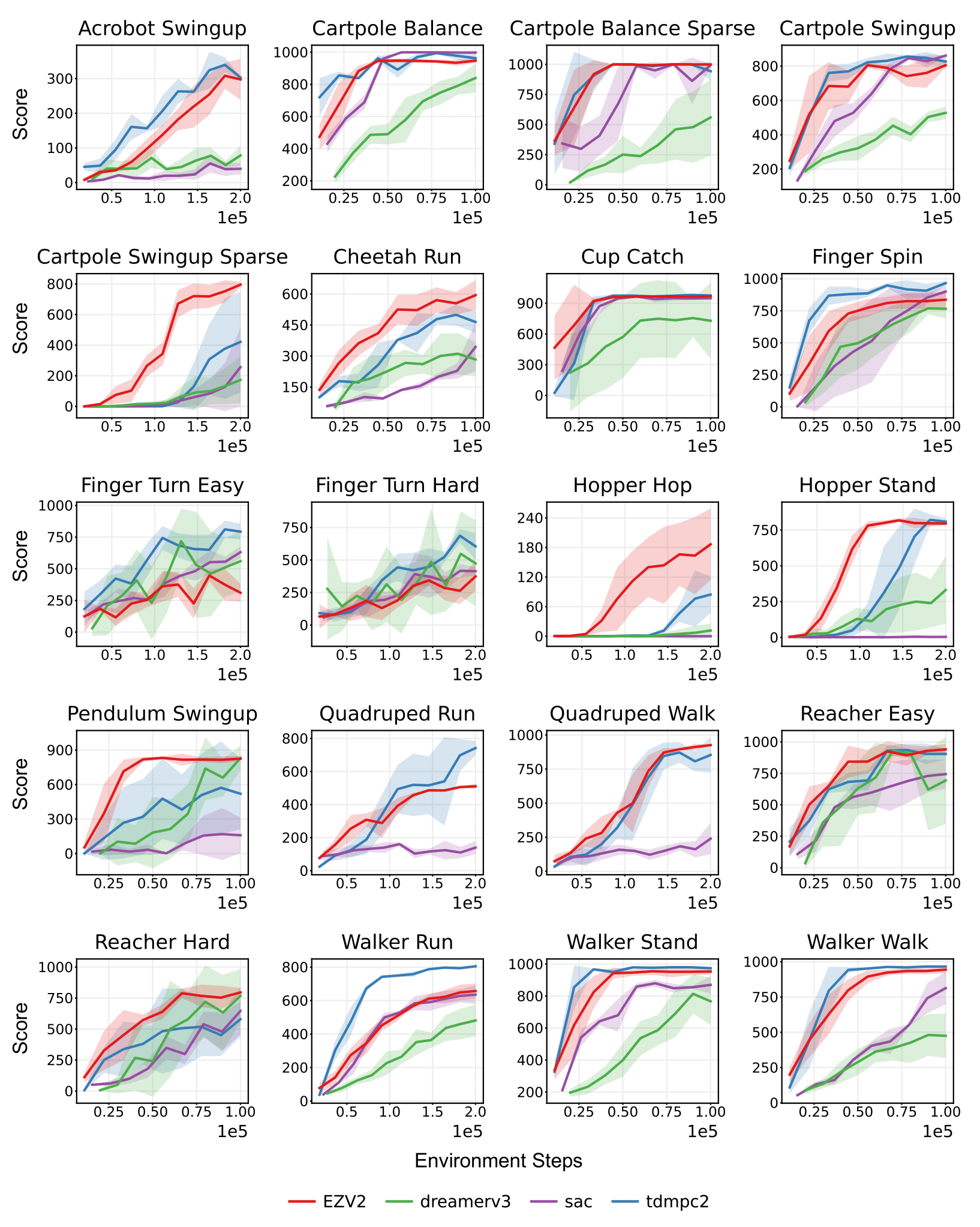

DMC State

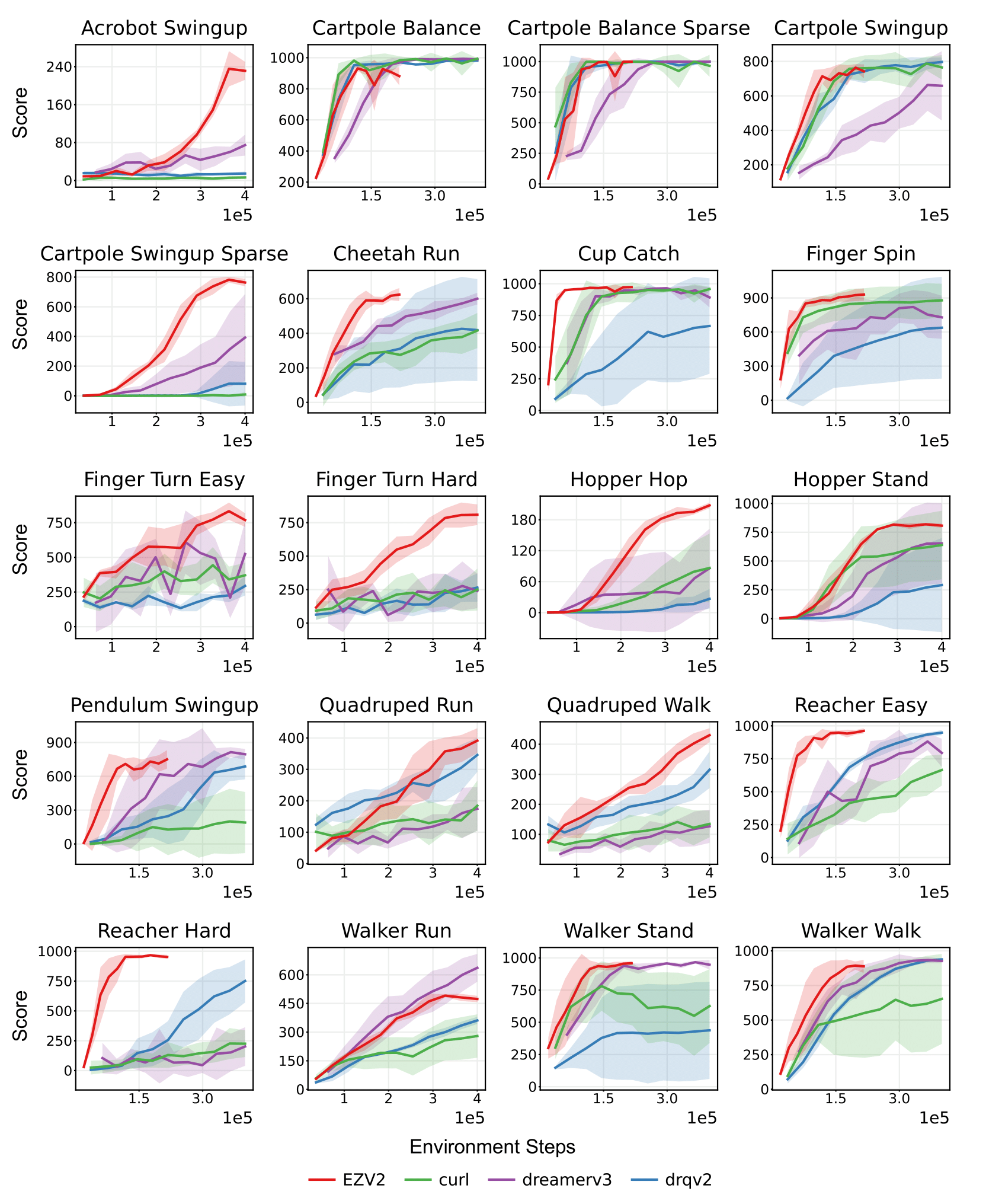

DMC Image

This repository is released under the GPL license. See LICENSE for additional details.

Our code is generally built upon: EfficientZero. We thank all these authors for their nicely open sourced code and their great contributions to the community.

Contact Shengjie Wang, Shaohuai Liu and Weirui Ye if you have any questions or suggestions.

If you find our work useful, please consider citing:

@article{wang2024efficientzero,

title={EfficientZero V2: Mastering Discrete and Continuous Control with Limited Data},

author={Wang, Shengjie and Liu, Shaohuai and Ye, Weirui and You, Jiacheng and Gao, Yang},

journal={arXiv preprint arXiv:2403.00564},

year={2024}

}