This project is a port of Andrej Karpathy's llm.c to Mojo, currently in beta. It is under active development and subject to significant changes. Users should expect to encounter bugs and unfinished features.

- train_gpt_basic.mojo: Basic port of train_gpt.c to Mojo, which does not leverage Mojo's capabilities. Beyond the initial commit, we will not provide further updates for the 'train_gpt2_basic' version, except for necessary bug fixes.

- train_gpt.mojo: Enhanced version utilizing Mojo's performance gems like vectorization and parallelization. Work in progress.

Visit llm.c for a detailed explanation of the original project. To use llm.mojo, follow the essential steps below:

pip install -r requirements.txtUse the tinyshakespeare dataset for a quick setup. This dataset is the fastest to download and tokenize. Run the following command to download and prepare the dataset:

python prepro_tinyshakespeare.py(all Python scripts in this repo are from Andrej Karpathy's llm.c repository.)

Alternatively, download and tokenize the larger TinyStories dataset with the following command:

python prepro_tinystory.pyNext download the GPT-2 weights and save them as a checkpoint we can load in Mojo with following command:

python train_gpt2.pyTrain your model using the downloaded and tokenized data by running:

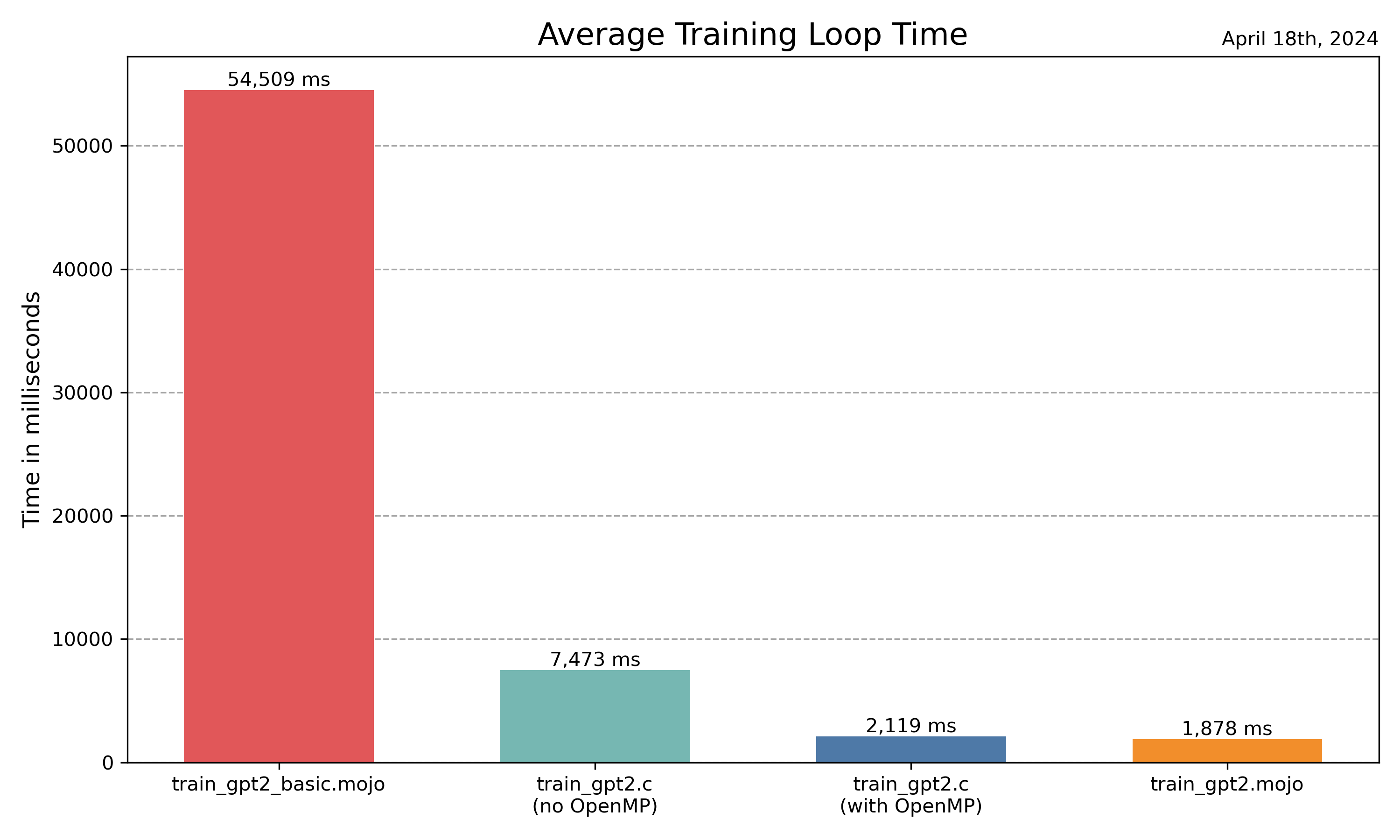

mojo train_gpt.mojoPreliminary benchmark results: (M2 MacBook Pro)

| Implementation | Average Training Loop Time |

|---|---|

| train_gpt2.mojo | 1878 ms |

| train_gpt2.c (with OpenMP) | 2119 ms |

| train_gpt2.c (no OpenMP) | 7473 ms |

| train_gpt2_basic.mojo | 54509 ms |

- Implementation Improvement: Enhance

train_gpt.mojoto fully exploit Mojo's capabilities, including further optimization for speed and efficiency. - Port test_gpt2.c to Mojo: Coming soon

- Following Changes of llm.c: Regularly update the Mojo port to align with the latest improvements and changes made to

llm.c. - Solid Benchmarks: Develop comprehensive and reliable benchmarks to accurately measure performance improvements and compare them against other implementations.

- 2024.04.18

- Upgraded project status to Beta.

- Further optimizations of train_gpt2.mojo.

- 2024.04.16

- Vectorize parameter update

- 2024.04.15

- Tokenizer Added -

train_gpt2.cUpdate 2024.04.14 - Bug fix

attention_backward

- Tokenizer Added -

- 2024.04.13

- Initial repository setup and first commit.

MIT