Read the research paper.

- Noise helps optimizers escape saddle points and local maxima/minima

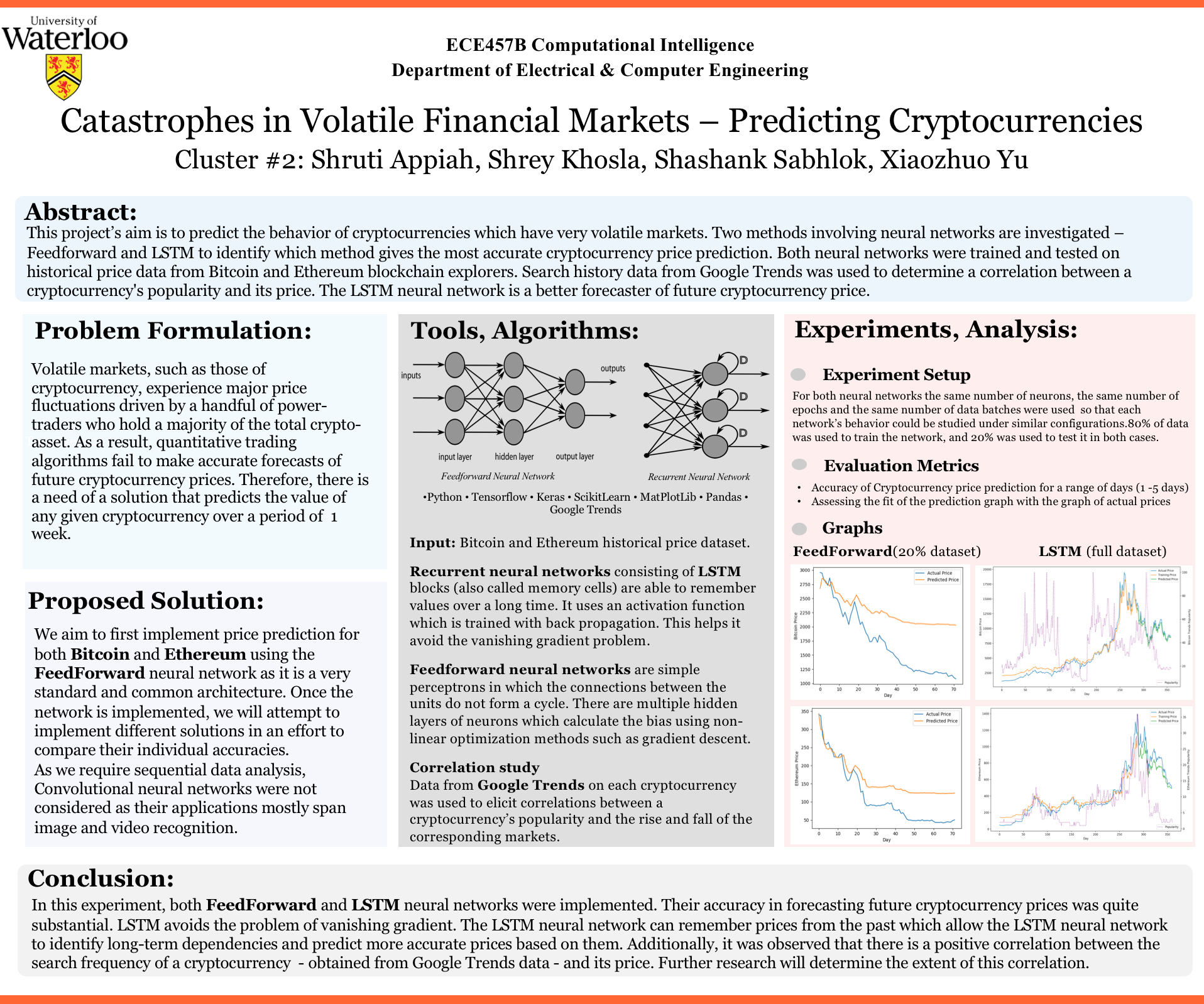

- LSTM (Long Short-term Memory) neural networks are mindful of long-term dependencies. They remember things from the past just like your girlfriend does. Read more about gradient descents.

- Adam optimizers combine Adaptive Gradient (AdaGrad) and Root Mean Square Propogation (RMS Prop) calculators.

- In a distribution, the first-order moment is the mean. The second-order moment is the variance.

- AdaGrad is great at handling sparse gradients. It calculates second-order moments based on multiple past gradients.

- RMSProp is based solely on first-order moments i.e means.

- Combined, the Adam Optimizer produces more sensible learning rates in each iteration.

Copyright (c) 2018 Shruti Appiah