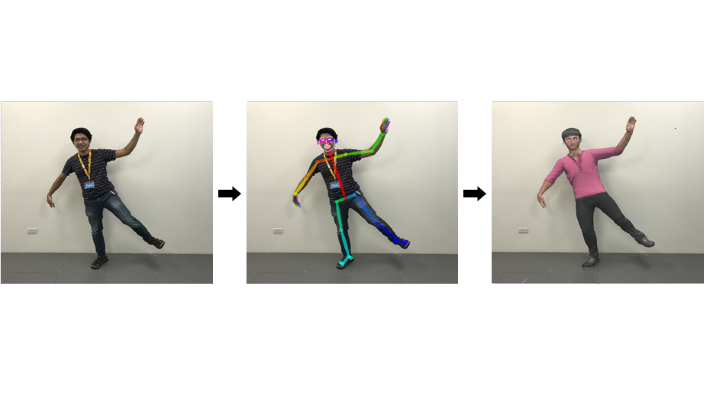

Following the "Everybody Dance Now" topic, we tried to make anime character move as we move. We provided 3 sets of different Mixamo characters for this image generation task. A generator-discriminator network was built for training anime image generation from key points for each character. The network was trained on each pair of the image and the corresponding keypoints o of the character's pose. Each keypoint set should be able to generate the image of the specific anime character with the same pose. Hope you enjoy teaching these characters to dance!

Data can be downloaded from kaggle The images were fetched from Mixamo and resized to 512x512 size. There are more than 4000 images for each character with different poses. The corresponding keypoints (facial features, joints, ...) were generated by OpenPose:

- 70 facial keypoints

- 25 body keypoints

- 21 left-hand keypoints

- 21 right-hand keypoints Specific keypoint assignment can be found at openpose doc file

Enter directory: "dance_now/train_scripts/" and follow the order of the notebooks:

- 0.make_real_head.py: Crop 64x64 head image from given anime image according to skeleton

- 1.train_bodystick.py: train stick to body generation with multiple GPUs and save the model

- 2.cut_fake_head.py: Crop 64x64 head image from generated anime image according to skeleton

- 3_1.train_face_residue.py: Train the face residue enhancement process from raw generated face to the precise given anime face.

In the root directory: The pre-trained NN models can be found in Drive "inference_openpose.py" can be imported with NN models preloaded. "try_preload.py" was provided for testing inference.

(Further Model discribed in https://hackmd.io/@usmile/Bkn9Vz-7P)

Thanks for the opportunity provided by Taiwan AI Academy for us to form a study group of GAN. Thanks to Eric for inviting all the members to the group. Thanks to all the members: Cloud,Eric, SeanLin, ShuYu, and TA of the GAN study group.

Everybody Dance Now is a topic in Github challenging for transferring ones' movement to another one. Inspired by this project, we proposed to automatically replace someone in the photo with the specific anime character with the same pose.

- Chan, C., Ginosar, S., Zhou, T., & Efros, A. A. (2019). Everybody dance now. In Proceedings of the IEEE International Conference on Computer Vision (pp. 5933-5942).

- Isola, P., Zhu, J. Y., Zhou, T., & Efros, A. A. (2017). Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1125-1134).

- Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., … Chintala, S. (2019). PyTorch: An Imperative Style, High-Performance Deep Learning Library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d extquotesingle Alch'e-Buc, E. Fox, & R. Garnett (Eds.), Advances in Neural Information Processing Systems 32 (pp. 8024–8035). Curran Associates, Inc.

- Cao, Z., Hidalgo, G., Simon, T., Wei, S. E., & Sheikh, Y. (2018). OpenPose: realtime multi-person 2D pose estimation using Part Affinity Fields. arXiv preprint arXiv:1812.08008.

- Xu, N., Price, B., Cohen, S., & Huang, T. (2017). Deep image matting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 2970-2979).

- Chen, L. C., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV) (pp. 801-818).

- Wang, T. C., Liu, M. Y., Zhu, J. Y., Tao, A., Kautz, J., & Catanzaro, B. (2018). High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8798-8807).