Packing Analysis: Packing Is More Appropriate for Large Models or Datasets in Supervised Fine-tuning

This repo is for our paper: Packing Analysis: Packing Is More Appropriate for Large Models or Datasets in Supervised Fine-tuning.

- October 6, 2023 we released our scripts, checkpoints and data.

- October 6, 2024 we released our paper in arxiv.

- Packing Analysis: Packing Is More Appropriate for Large Models or Datasets in Supervised Fine-tuning

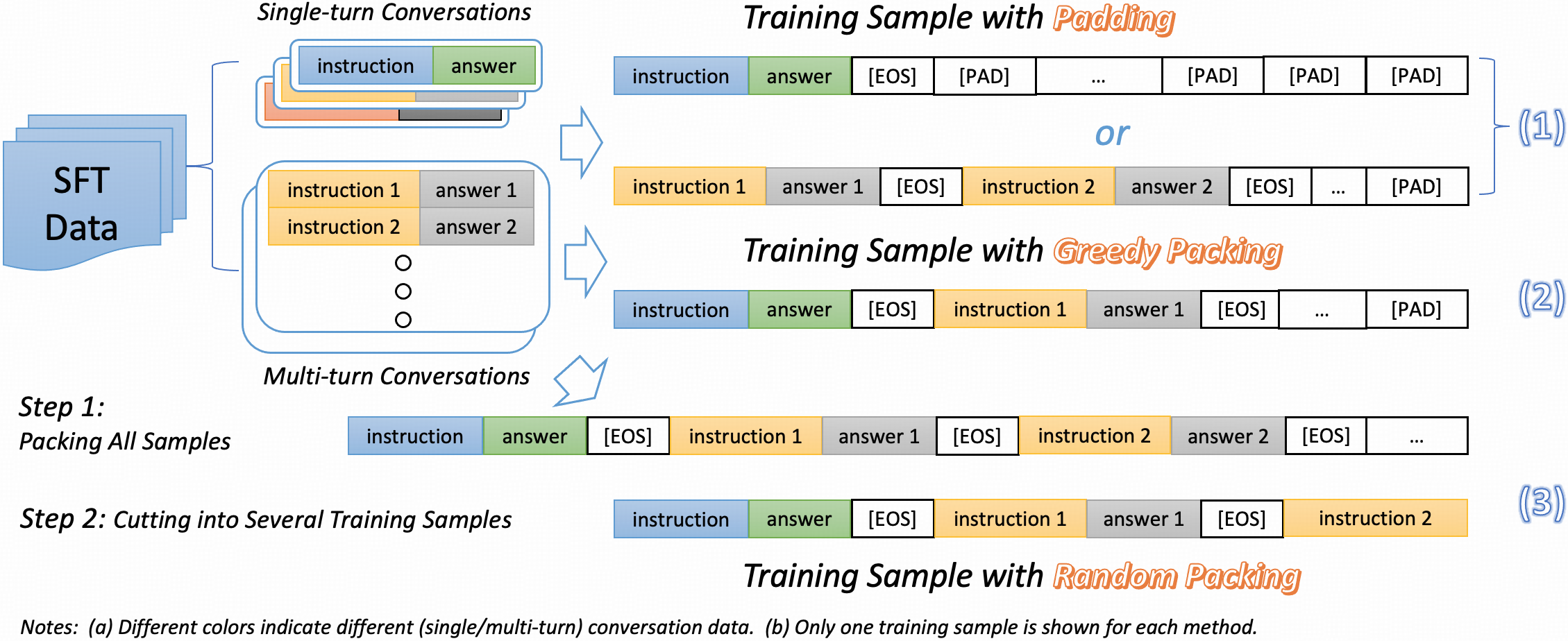

Packing, initially utilized in the pre-training phase, is an optimization technique designed to maximize hardware resource efficiency by combining different training sequences to fit the model's maximum input length. Although it has demonstrated effectiveness during pre-training, there remains a lack of comprehensive analysis for the supervised fine-tuning (SFT) stage on the following points: (1) whether packing can effectively enhance training efficiency while maintaining performance, (2) the suitable size of the model and dataset for fine-tuning with the packing method, and (3) whether packing unrelated or related training samples might cause the model to either excessively disregard or over-rely on the context.

To address these concerns, here, we provide a thorough analysis of packing during the supervised fine-tuning (SFT) stage. Specifically, we perform extensive comparisons between supervised fine-tuning (SFT) methods using padding and packing, covering SFT datasets ranging from 69K to 1.2M and models from 8B to 70B. Our comparisons include various benchmarks, such as knowledge, reasoning, and coding, GPT-based evaluations, time efficiency, and other fine-tuning parameters, concluding that:

- Models using packing generally perform better on average compared to those using padding across various benchmarks.

- As the model size grows, the performance gap between padding and packing-based models on the benchmark increases.

- Tailoring the packing of specific training samples may result in desired performance on specific benchmarks.

- Compared to padding, the packing method greatly reduces training time, making it possible to fine-tune large models on large datasets.

- Using longer training samples increases the time required for the packing method to process each sample, making it less suitable for training on particularly small datasets.

- In packing mode, the batch size is no longer directly proportional to the learning rate.

- Applying packing to datasets with only single-turn conversations may lead to a significant decrease in performance on few-shot benchmarks.

Illustraitions for our comparisons is following:

We use LLaMA-Factory as our codebase. To get started, please first clone this repo and then run:

pip install -e ".[torch,metrics]"

In this part, we offer links to download our training data.

Please cite the original data when using it.

After downloading the data, move them to the data folder, like:

mv wildchat_gpt.json ./data

Then, folloing the format below to update the file data/dataset_info.json:

"wildchat_gpt": {

"file_name": "wildchat_gpt.json",

"formatting": "sharegpt",

"columns": {

"messages": "conversations"

}

- Download backboned LLaMA models:

- Fill the

MODEL,DATANAMEandOUTPUTDIRinto our provided training scripts:- LLaMA-3-8B

- single node:

./train_scripts/llama_3_8B/single_node.sh - multi node:

./train_scripts/llama_3_8B/multi_node.sh

- single node:

- LLaMA-3-70B

- multi node:

./train_scripts/llama_3_70B/multi_node.sh

- multi node:

- LLaMA-3-8B

- Run the training scirpt, such as:

bash ./train_scripts/llama_3_8B/multi_node.sh

Table 1: Results of different size models and datasets on various benchmarks.

| Model | MMLU (5-shot) | GSM8K (4-shot) | MATH (4-shot) | BBH (3-shot) | IFEval (0-shot) | HumanEval (0-shot) | Avg |

| WildChat (GPT-4) Dataset, Size: 69K | |||||||

| LLaMA-3-8B | |||||||

| Padding | 63.99 | 58.76 | 14.72 | 60.71 | 56.01 | 43.29 | 49.58 |

| Random Packing | 63.5(-0.44) | 61.18(+2.42) | 15.58(+0.86) | 61.04(+0.33) | 51.57(-4.44) | 43.9 (+0.61) | 49.46(-0.12) |

| Greedy Packing | 64.71(+0.72) | 60.88(+2.12) | 15.6(+0.88) | 62.59(+1.88) | 57.12(+1.11) | 42.68(-0.61) | 50.6(+1.02) |

| LLaMA-3-70B | |||||||

| Padding | 73.47 | 79.3 | 28.8 | 78.33 | 51.76 | 57.32 | 61.50 |

| Random Packing | 75.16(+1.69) | 82.38(+3.08) | 31.46(+2.66) | 79.94(+1.61) | 61.00(+9.24) | 65.85(+8.53) | 65.97(+4.47) |

| Greedy Packing | 74.77(+1.3) | 81.61(+2.31) | 32.84(+4.04) | 80.98(+2.65) | 64.33(+12.57) | 60.98(+3.66) | 65.92(+4.42) |

| TULU Dataset, Size: 326K | |||||||

| LLaMA-3-8B | |||||||

| Padding | 62.26 | 57.32 | 14.6 | 60.14 | 41.77 | 44.24 | 46.72 |

| Random Packing | 63.94(+1.68) | 58.83(+1.51) | 13.94(-0.66) | 61.11(+0.97) | 42.51(+0.74) | 45.61(+1.37) | 47.66(+0.94) |

| Greedy Packing | 62.14(-0.12) | 60.8(+3.48) | 14.74(+0.14) | 61.26(+1.12) | 46.40(+4.63) | 44.51(+0.27) | 48.31(+1.59) |

| LLaMA-3-70B | |||||||

| Padding | 73.2 | 81.18 | 29.02 | 78.06 | 47.32 | 62.95 | 61.96 |

| Random Packing | 73.48(+0.28) | 81.73(+0.55) | 29.42(+0.4) | 78.35(+0.29) | 47.29(-0.03) | 60.37(-2.58) | 61.77(-0.19) |

| Greedy Packing | 73.43(+0.23) | 81.2(+0.02) | 30(+0.18) | 77.54(-0.52) | 53.05(+5.73) | 68.9(+5.95) | 64.02(+2.06) |

| WildChat Dataset, Size: 652K | |||||||

| LLaMA-3-8B | |||||||

| Padding | 64.52 | 61.83 | 14.21 | 61.88 | 51.36 | 40.12 | 48.99 |

| Random Packing | 64.46(-0.06) | 62.77(+0.94) | 14.44(+0.23) | 62(+0.12) | 50.28(-1.08) | 40.24(+0.12) | 49.03(+0.04) |

| Greedy Packing | 65.07(+0.55) | 61.41(-0.42) | 15.08(+0.87) | 62.83(+0.95) | 52.68(+1.32) | 48.17(+8.05) | 50.87(+1.88) |

| LLaMA-3-70B | |||||||

| Padding | 74.82 | 79.26 | 29.44 | 76.31 | 52.19 | 63.7 | 62.62 |

| Random Packing | 75.67(+0.85) | 80.1(+0.84) | 30.37(+0.93) | 76.74(+0.43) | 52.43(+0.24) | 65.26(+1.56) | 63.43(+0.81) |

| Greedy Packing | 75.36(+0.46) | 79.45(+0.19) | 31.28(+1.84) | 77.47(+1.16) | 53.60(+1.41) | 64.02(+0.32) | 63.53(+0.91) |

| Open-source 1M Dataset, Size: 1.2M | |||||||

| LLaMA-3-8B | |||||||

| Padding | 63.7 | 77.08 | 27.96 | 63.45 | 48.39 | 45.22 | 54.3 |

| Random Packing | 63.96(0.26) | 77.26(+0.16) | 28.4(+0.44) | 64.83(+1.38) | 49.54(+1.15) | 45.73(+0.51) | 54.95(+0.65) |

| Greedy Packing | 63.63(-0.07) | 77.48(+0.4) | 28.26(+0.3) | 63.01(-0.44) | 51.57(+3.28) | 46.34(+1.12) | 55.05(+0.75) |

| LLaMA-3-70B | |||||||

| Padding | 74.97 | 85.23 | 41.82 | 78.65 | 54.33 | 61.74 | 66.12 |

| Random Packing | 76.38(+1.41) | 86.14(+0.91) | 42.73(+0.91) | 79.42(+0.77) | 55.9(+1.57) | 62.98(+1.24) | 67.26(+1.14) |

| Greedy Packing | 75.69(+0.72) | 86.88(+1.65) | 42.92(+1.1) | 79.94(+1.29) | 56.82(+2.49) | 62.98(+1.24) | 67.54(+1.42) |

Table 2: Results of different size models and datasets on the WildBench benchmark.

| Model | WildChat (GPT-4), 69K | TULU, 326K | WildChat, 652K | Open-source 1M, 1.2M |

| LLaMA-3-8B | ||||

| Padding | 28.86 | 19.11 | 21.06 | 18.38 |

| Random Packing | 27.89(-0.97) | 20.84(+1.73) | 20.73(-0.33) | 20.42(+2.04) |

| Greedy Packing | 29.81(+0.95) | 20.73(+1.62) | 21.34(+0.28) | 21.9(+3.52) |

| LLaMA-3-70B | ||||

| Padding | 37.0 | 22.84 | 30.69 | 34.95 |

| Random Packing | 39.92(+2.92) | 23.93(+1.09) | 30.76(+0.07) | 35.21(+0.26) |

| Greedy Packing | 41.09(+4.09) | 24.46(+1.62) | 31.26(+0.57) | 35.81(+0.86) |

Table 3: The training time of models across various datasets, with blue indicating an improvement over the padding method, while red represents a decrease in performance compared to the padding method.

| Model | Epoch | Total Steps | Total Training Time (s)↓ | Steps per Second↑ | Samples per Second↑ |

| WildChat (GPT-4) Dataset, Size: 69K | |||||

| LLaMA-3-8B | |||||

| Padding | 4 | 1964 | 1188.8449 | 0.165 | 21.13 |

| Random Packing | 4 | 728 | 445.28773(-743.55717) | 0.163(-0.002) | 20.934(-0.196) |

| Greedy Packing | 4 | 492 | 308.33346(-880.51144) | 0.16(-0.005) | 20.48(-0.65) |

| LLaMA-3-70B | |||||

| Padding | 3 | 2943 | 9533.42936 | 0.031 | 1.976 |

| Random Packing | 3 | 1092 | 3749.3016(-5784.12776) | 0.029(-0.002) | 1.865(-0.111) |

| Greedy Packing | 3 | 741 | 2573.34781(-6960.08155) | 0.029(-0.002) | 1.84(-0.136) |

| TULU Dataset, Size: 326K | |||||

| LLaMA-3-8B | |||||

| Padding | 4 | 9183 | 4906.59014 | 0.165 | 21.084 |

| Random Packing | 4 | 1928 | 1175.43583(-3731.15431) | 0.164(-0.001) | 20.977(-0.107) |

| Greedy Packing | 4 | 1956 | 1328.12592(-3578.46422) | 0.147(-0.018) | 18.841(-2.243) |

| LLaMA-3-70B | |||||

| Padding | 3 | 13761 | 40735.40051 | 0.034 | 2.162 |

| Random Packing | 3 | 2889 | 9758.68127(-30976.71924) | 0.03(-0.004) | 1.895(-0.267) |

| Greedy Packing | 3 | 2931 | 10313.89593(-30421.50458) | 0.028(-0.006) | 1.82(-0.342) |

| WildChat Dataset, Size: 652K | |||||

| LLaMA-3-8B | |||||

| Padding | 4 | 18340 | 11738.48881 | 0.156 | 20.183 |

| Random Packing | 4 | 5348 | 3422.97918(-8315.50963) | 0.156 | 20.006(-0.177) |

| Greedy Packing | 4 | 4780 | 3124.28736(-8614.20145) | 0.153(-0.003) | 19.58(-0.603) |

| LLaMA-3-70B | |||||

| Padding | 3 | 27510 | 97893.95669 | 0.034 | 2.261 |

| Random Packing | 3 | 8025 | 28904.78592(-68989.17077) | 0.030(-0.004) | 2.083(-0.178) |

| Greedy Packing | 3 | 7170 | 25124.6234(-72769.33329) | 0.029(-0.005) | 1.826(-0.435) |

| Open-source 1M Dataset, Size: 1.2M | |||||

| LLaMA-3-8B | |||||

| Padding | 4 | 33064 | 19918.48664 | 0.168 | 21.413 |

| Random Packing | 4 | 5400 | 3253.07972(-16665.40692) | 0.166(-0.002) | 21.255(-0.158) |

| Greedy Packing | 4 | 5104 | 3175.09395(-16743.39269) | 0.161(-0.007) | 20.571(-0.842) |

| LLaMA-3-70B | |||||

| Padding | 3 | 49596 | 184709.04470 | 0.031 | 2.306 |

| Random Packing | 3 | 8103 | 29893.65963(-154815.38507) | 0.03(-0.001) | 2.193(-0.113) |

| Greedy Packing | 3 | 7653 | 27426.66515(-157282.37955) | 0.028(-0.003) | 1.786(-0.52) |

If you have any issues or questions about this repo, feel free to contact shuhewang@student.unimelb.edu.au