| Feature | AutoMQ | Apache Kafka | Confluent | Apache Pulsar | Redpanda | Warpstream |

|---|---|---|---|---|---|---|

| Apache Kafka Compatibility[1] | Native Kafka | Native Kafka | Native Kafka | Non-Kafka | Kafka Protocol | Kafka Protocol |

| Source Code Availability | Yes | Yes | No | Yes | Yes | No |

| Stateless Broker | Yes | No | No | Yes | No | Yes |

| P99 Latency | Single-digit ms latency |

> 400ms | ||||

| Continuous Self-Balancing | Yes | No | Yes | Yes | Yes | Yes |

| Scale in/out | In seconds | In hours/days | In hours | In hours (scale-in); In seconds (scale-out) |

In hours/In seconds (Enterprise Only) | In seconds |

| Spot Instance Support | Yes | No | No | No | No | Yes |

| Partition Reassignment | In seconds | In hours/days | In hours | In seconds | In hours/In seconds (Enterprise Only) | In seconds |

| Component | Broker | Broker Zookeeper (Non-KRaft) |

Broker Zookeeper Bookkeeper Proxy(Optional) |

Broker | Agent MetadataServer |

|

| Durability | Guaranteed by S3/EBS[2] | Guaranteed by ISR | Guaranteed by Bookkeeper | Guaranteed by Raft | Guaranteed by S3 | |

| Inter-AZ Networking Fees | No | Yes | No | |||

[1] Apache Kafka Compatibility's definition is coming from this blog.

[2] EBS Durability: On Azure, GCP, and Alibaba Cloud, Regional EBS replicas span multiple AZs. On AWS, ensure durability by double writing to EBS and S3 Express One Zone in different AZs.

- Cloud Native: Built on cloud service. Every system design decision takes the cloud service's feature and billing items into consideration to offer the best low-latency, scalable, reliable, and cost-effective Kafka service on the cloud.

- High Reliability: Leverage the features of cloud service to offer RPO of 0 and RTO in seconds.

- AWS: Double writing to EBS and S3 Express One Zone in different AZs.

- GCP: Use regional SSD and cloud storage to offer AZ-level disaster recovery.

- Azure: Use zone-redundant storage and blob storage to offer AZ-level disaster recovery.

- Alibaba Cloud: Use regional Disk and OSS to offer AZ-level disaster recovery.

- Serverless:

- Auto Scaling: Watch key metrics of cluster and scale in/out automatically to match your workload and achieve pay-as-you-go.

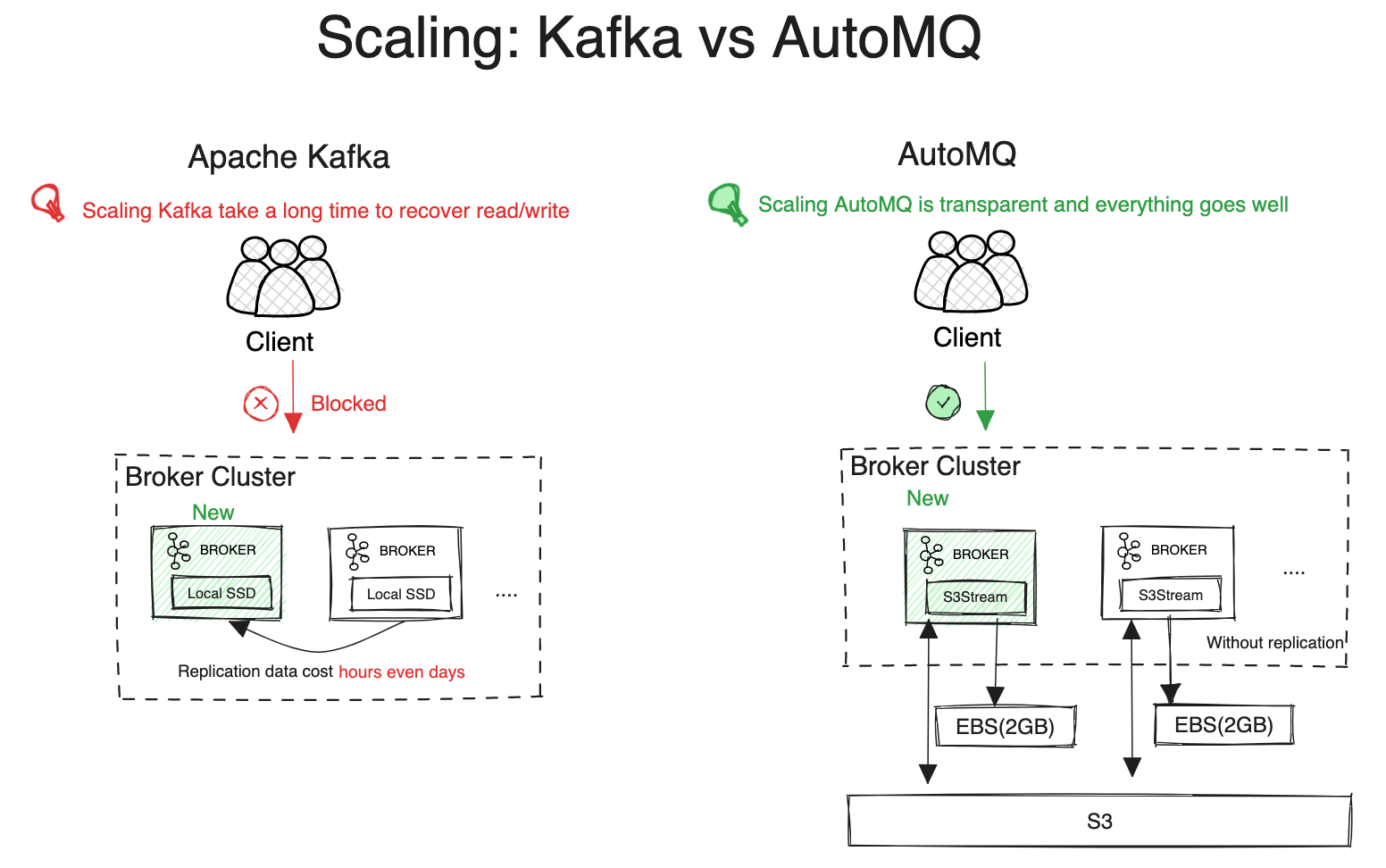

- Scaling in seconds: The computing layer (broker) is stateless and can scale in/out in seconds, which makes AutoMQ truly serverless. Learn more

- Infinite scalable: Use the cloud's object storage as the main storage, never worry about storage capacity.

- Manage-less: Built-in auto-balancer component balance partition and network traffic across brokers automatically. Never worry about partition re-balance. Learn more

- Cost effective: Leveraging object storage as the primary storage solution, incorporating billing considerations into the system design, and maximizing the use of cloud services collectively enable AutoMQ to be 10x more cost-effective than Apache Kafka. Refer to this report to see how we cut Apache Kafka billing by 90% on the cloud.

- High performance:

- Low latency: Use cloud block storage like AWS EBS as the WAL(Write Ahead Log) to accelerate writing.

- High throughput: Use pre-fetching, batch processing, and parallel to achieve high throughput.

Refer to the AutoMQ Performance White Paper to see how we achieve this.

- A superior alternative to Apache Kafka: 100% compatible with Apache Kafka greater than 0.9.x and not lose any good features of it, but cheaper and better.

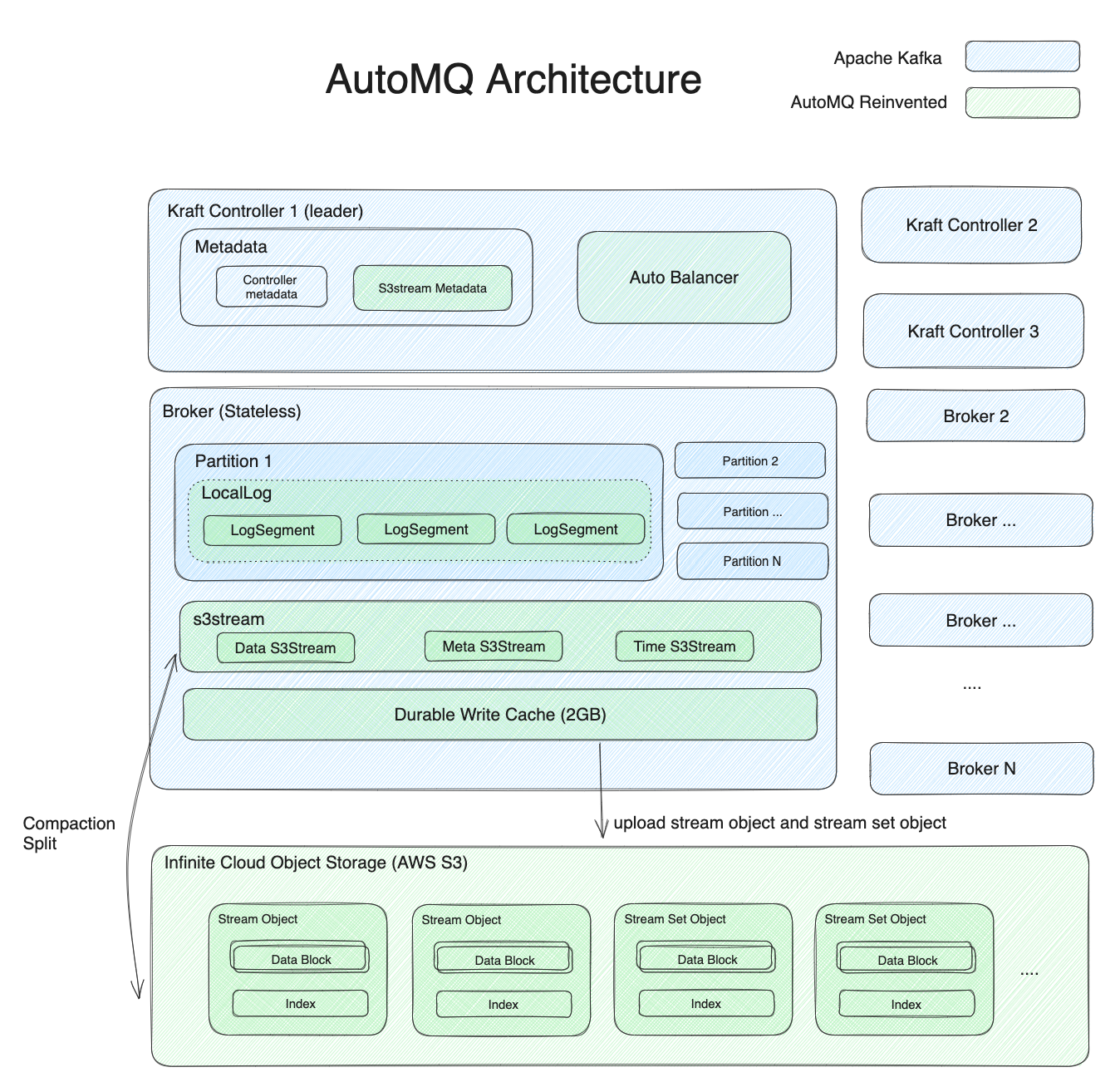

AutoMQ uses logSegment as a coding aspect of Apache Kafka to weave into our features. The architecture includes the following main components:

- S3Stream: A streaming library based on object storage offered by AutoMQ. It is the core component of AutoMQ and is responsible for reading and writing data to object storage. Learn more.

- Stream: Stream is an abstraction for mapping the logSegment of Apache Kafka. LogSegment's data, index, and other metadata will map to different types of streams. Learn more

- WAL: AutoMQ uses a small-size cloud block storage like AWS EBS as the WAL(Write Ahead Log) to accelerate writing. Pay attention that this is not tiered storage and the AutoMQ broker can decoupled from the WAL completely. Learn more

- Stream set object: A Stream Set Object aggregates data from multiple streams into individual segments, significantly cutting down on object storage API usage and metadata size. Learn more

- Stream Object: A Stream Object contains data from a single stream, typically separated when compacting Stream Set Objects for streams with larger data volumes. Learn more

curl https://download.automq.com/install.sh | sh

The easiest way to run AutoMQ. You can experience features like Partition Reassignment in Seconds and Continuous Self-Balancing in your local machine. Learn more

Attention: Local mode mock object storage locally and is not a production-ready deployment. It is only for demo and test purposes.

Deploy AutoMQ manually with released tgz files on the cloud, currently compatible with AWS, Aliyun Cloud, Tencent Cloud, Huawei Cloud, and Baidu Cloud. Learn more

You can join the following groups or channels to discuss or ask questions about AutoMQ:

- Ask questions or report a bug by GitHub Issues

- Discuss about AutoMQ or Kafka by Wechat Group

If you've found a problem with AutoMQ, please open a GitHub Issues. To contribute to AutoMQ please see Code of Conduct and Contributing Guide. We have a list of good first issues that help you to get started, gain experience, and get familiar with our contribution process.

AutoMQ is released under Business Source License 1.1. When contributing to AutoMQ, you can find the relevant license header in each file.

-yellow)

-orange)