Shuwei Shi1,

Wenbo Li2,

Yuechen Zhang2,

Jingwen He2,

Biao Gong3,

Yinqiang Zheng‡1

1The University of Tokyo

2The Chinese University of Hong Kong

3Ant Group

‡Corresponding author

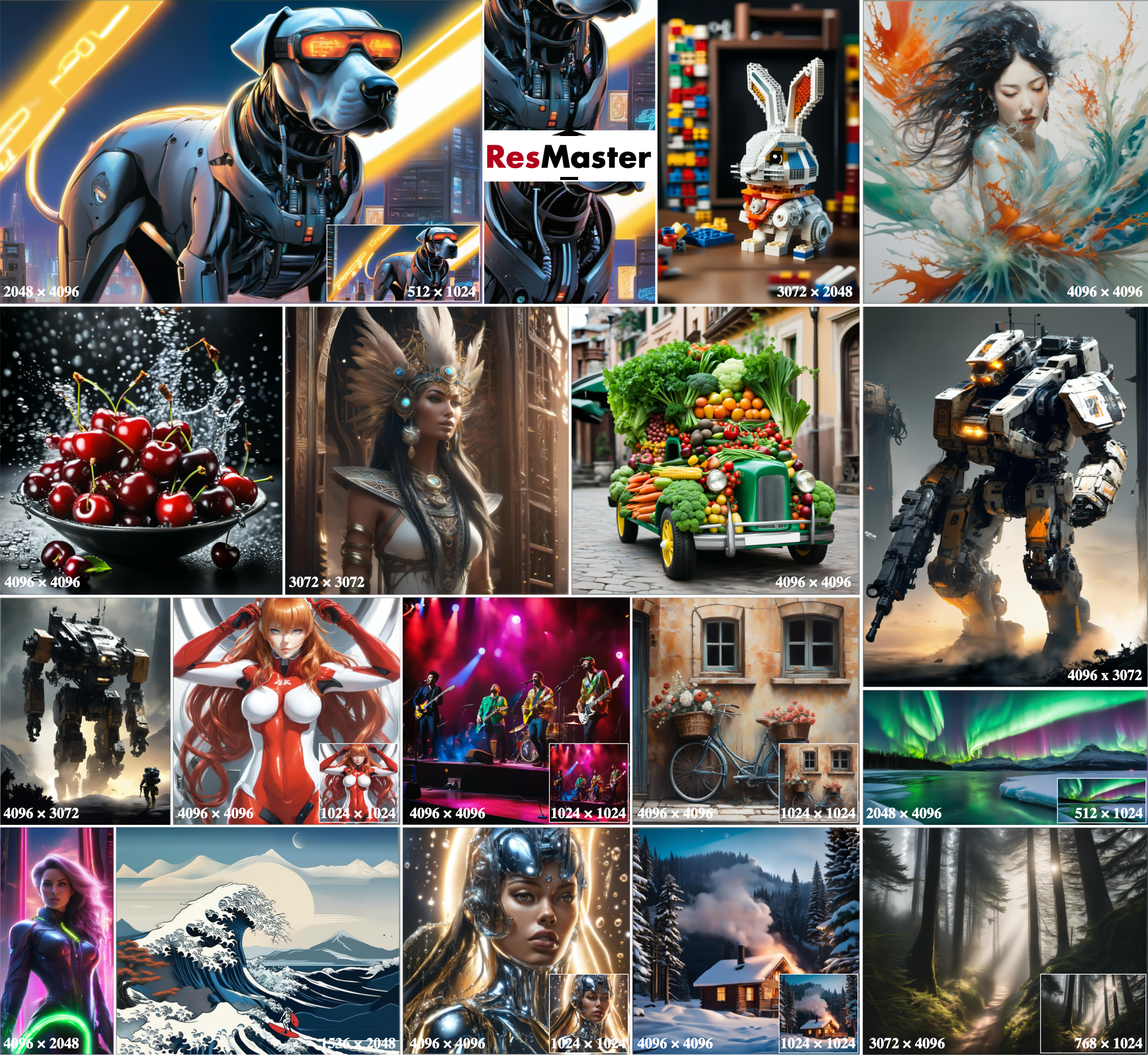

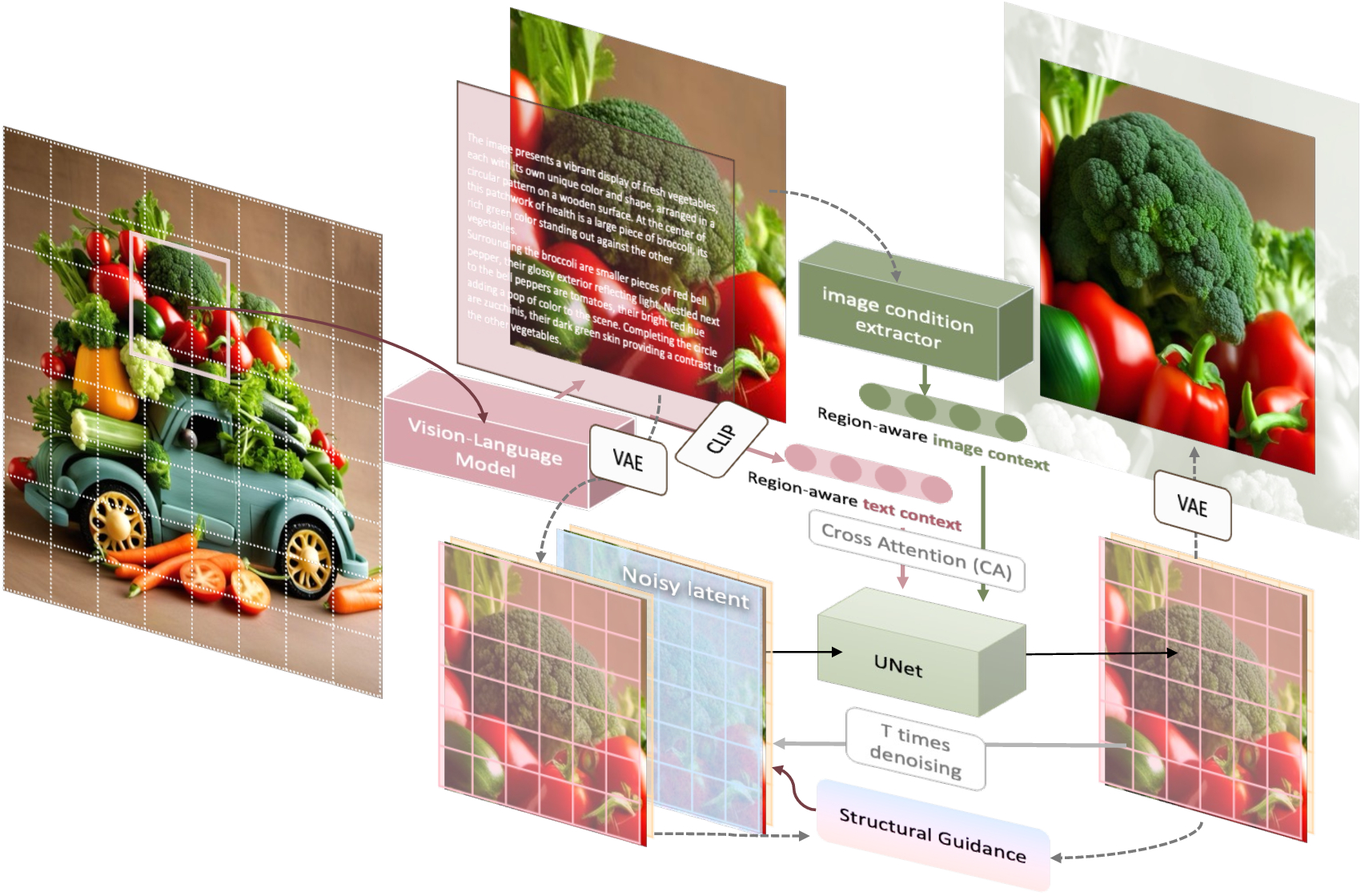

Diffusion models excel at producing high-quality images; however, scaling to higher resolutions, such as 4K, often results in over-smoothed content, structural distortions, and repetitive patterns. To this end, we introduce ResMaster, a novel, training-free method that empowers resolution-limited diffusion models to generate high-quality images beyond resolution restrictions. Specifically, ResMaster leverages a low-resolution reference image created by a pre-trained diffusion model to provide structural and fine-grained guidance for crafting high-resolution images on a patch-by-patch basis. To ensure a coherent global structure,ResMaster meticulously aligns the low-frequency components of high-resolution patches with the low-resolution reference at each denoising step. For fine-grained guidance, tailored image prompts based on the low-resolution reference and enriched textual prompts produced by a vision-language model are incorporated. This approach could significantly mitigate local pattern distortions and improve detail refinement. Extensive experiments validate that ResMaster sets a new benchmark for high-resolution image generation and demonstrates promising efficiency.

ResMaster employs Structural and Fine-Grained Guidance to ensure structural integrity and enhance detail generation. Specifically, ResMaster implements low-frequency component swapping using the low-resolution image generated at each sampling step to maintain global structural coherence in higher-resolution outputs. Additionally, to mitigate repetitive patterns and increase detail accuracy, we employ localized fine-grained guidance using condensed image prompts and enriched textual descriptions. The image prompts, derived from the generated low-resolution counterparts, contain critical semantic and structural information. Simultaneously, the detailed textual prompts produced by a pre-trained visionlanguage model (VLM) contribute to image generation on more complex and accurate patterns.

- 2024.6.25 - 🛳️ This repo is released.

@misc{shi2024resmaster,

title={ResMaster: Mastering High-Resolution Image Generation via Structural and Fine-Grained Guidance},

author={Shuwei Shi, Wenbo Li, Yuechen Zhang, Jingwen He, Biao Gong, Yinqiang Zheng},

year={2024},

eprint={2406.16476},

archivePrefix={arXiv},

primaryClass={cs.CV}

}