This repository is the official implementation of "A Comparative Study on Machine Learning Algorithms for Knowledge Discovery."

🚀 Update: The paper has been accepted for 17th International Conference on Control, Automation, Robotics and Vision (ICARCV 2022).

A. Requirements

B. Dataset

C. Baselines

D. Benchmark

E. Results

F. Citation

Overview: The paper aims to summarize key research works in symbolic regression and perform a comparative study to understand the strengths and limitations of each method. Finally, we highlight the challenges in the current methods and future research directions in the application of machine learning in knowledge discovery.

- Install miniconda to manage experiments' dependencies.

To install requirements:

conda env create -f environment.yml

-

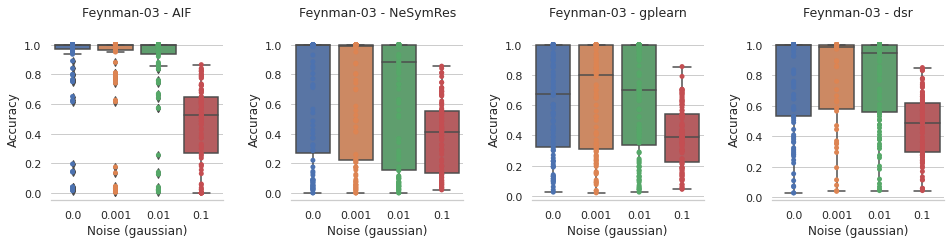

Feynman-03: All equations with up to

3 input variablesfrom the AI-Feynman dataset were sampled. The resulting dataset contained 52 equations. -

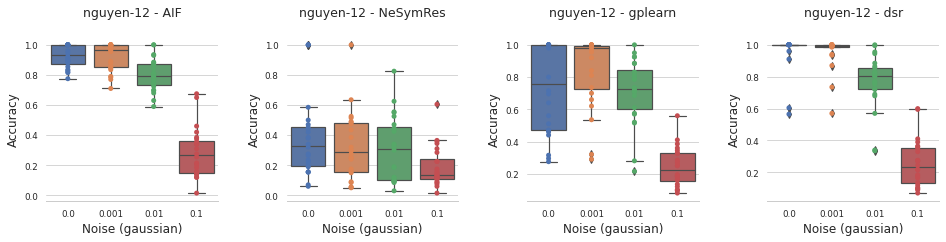

Nguyen-12: The datasets consisted of 12 equations with a maximum of

2 input variables. It is important to highlight that few equations contain terms such asx^6andx^5which were included to test the methods’ ability to understand high-frequency terms.

-

Genetic programming (GPL): A python library called gplearn was used to perform genetic programming (GP).

-

Deep symbolic regression (DSR): An auto-regressive approach based on reinforcement learning search to optimize the symbolic search space. The opensource implementation of (Petersen et. el.) with default parameters was used for the benchmark tasks.

-

AIFeynman (AIF): A heuristic based search approach that uses recurring patterns in symbolic formulas describing natural phenomena. A custom wrapper was written around the open-source python package, AIFeynman, for the evaluation.

-

Neural Symbolic Regression that Scales (NeSymRes): A symbolic language modelling approach that was pretrained on a large distribution of millions of equations. The pretrained model on 100 million equations was used for the benchmark.

The baseline models can be benchmarked using the following command and arguments:

- Models:

gpl,dsr,aif,nesymres - Datasets:

feynman,nguyen

make <DATASET-NAME>-<MODEL-NAME> noise=<NOISE-LEVEL> num_points=<NUM-POINTS>For example, to run the benchmark for the gpl model on the feynman dataset with noise=0.1 and num_points=1000, run:

make feynman-gpl noise=0.1 num_points=1000

Figure 1: Effect of noise on accuracy in Feynman-03 dataset

Figure 2: Effect of noise on accuracy in Nguyen-12 dataset

Other results can be found in the results and discussion section of the paper.

If you find this work useful in your research, please consider citing:

@inproceedings{,

title={A Comparative Study on Machine Learning Algorithms for Knowledge Discovery},

author={Siddesh Sambasivam Suseela, Yang Feng, Kezhi Mao},

booktitle={17th International Conference on Control, Automation, Robotics and Vision (ICARCV 2022)},

year={2022},

organization={Nanyang Technological University}

}

For any questions, please contact Siddesh Sambasivam Suseela (siddeshsambasivam.official@gmail.com)