TransferAttack is a pytorch framework to boost the adversarial transferability for image classification.

Devling into Adversarial Transferability on Image Classification: A Review, Benchmark and Evaluation will be released soon.

We also release a list of papers about transfer-based attacks here.

There are a lot of reasons for TransferAttack, such as:

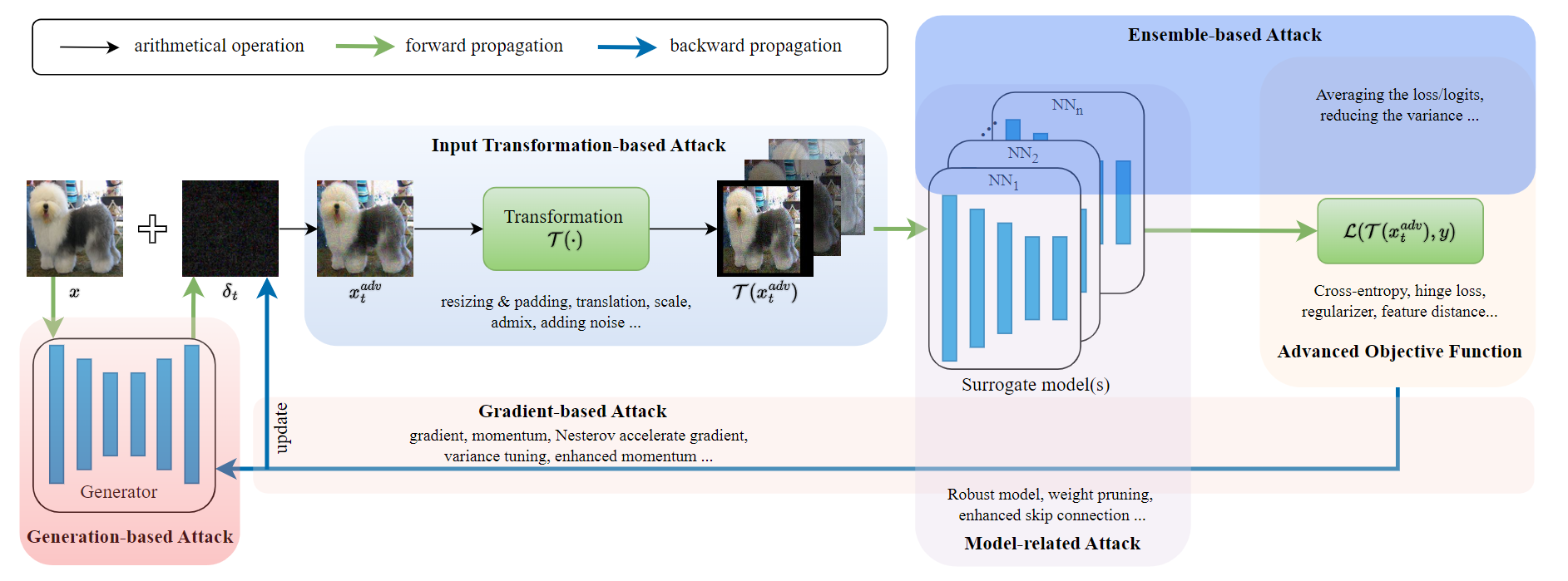

- A benchmark for evaluating new transfer-based attacks: TransferAttack categorizes existing transfer-based attacks into several types and fairly evaluates various transfer-based attacks under the same setting.

- Evaluate the robustness of deep models: TransferAttack provides a plug-and-play interface to verify the robustness of models, such as CNNs and ViTs.

- A summary of transfer-based attacks: TransferAttack reviews numerous transfer-based attacks, making it easy to get the whole picture of transfer-based attacks for practitioners.

- Python >= 3.6

- PyTorch >= 1.12.1

- Torchvision >= 0.13.1

- timm >= 0.6.12

pip install -r requirements.txtWe randomly sample 1,000 images from ImageNet validate set, in which each image is from one category and can be correctly classified by the adopted models (For some categories, we cannot choose one image that is correctly classified by all the models. In this case, we select the image that receives accurate classifications from the majority of models.). Download the data from

/path/to/data. Then you can execute the attack as follows:

python main.py --input_dir ./path/to/data --output_dir adv_data/mifgsm/resnet18 --attack mifgsm --model=resnet18

python main.py --input_dir ./path/to/data --output_dir adv_data/mifgsm/resnet18 --eval

| Category | Attack | Main Idea |

|---|---|---|

| Gradient-based | FGSM (Goodfellow et al., 2015) | Add a small perturbation in the direction of gradient |

| I-FGSM (Kurakin et al., 2015) | Iterative version of FGSM | |

| MI-FGSM (Dong et al., 2018) | Integrate the momentum term into the I-FGSM | |

| NI-FGSM (Lin et al., 2020) | Integrate the Nesterov's accelerated gradient into I-FGSM | |

| PI-FGSM (Gao et al., 2020) | Reuse the cut noise and apply a heuristic project strategy to generate patch-wise noise | |

| VMI-FGSM (Wang et al., 2021) | Variance tuning MI-FGSM | |

| VNI-FGSM (Wang et al., 2021) | Variance tuning NI-FGSM | |

| EMI-FGSM (Wang et al., 2021) | Accumulate the gradients of several data points linearly sampled in the direction of previous gradient | |

| I-FGS²M (Zhang et al., 2021) | Assigning staircase weights to each interval of the gradient | |

| VA-I-FGSM (Zhang et al., 2022) | Adopt a larger step size and auxiliary gradients from other categories | |

| AI-FGTM (Zou et al., 2022) | Adopt Adam to adjust the step size and momentum using the tanh function | |

| RAP (Qin et al., 2022) | Inject the worst-case perturbation when calculating the gradient. | |

| GI-FGSM (Wang et al., 2022) | Use global momentum initialization to better stablize update direction. | |

| PC-I-FGSM (Wan et al., 2023) | Gradient Prediction-Correction on MI-FGSM | |

| IE-FGSM (Peng et al., 2023) | Integrate anticipatory data point to stabilize the update direction. | |

| DTA (Yang et al., 2023) | Calculate the gradient on several examples using small stepsize | |

| GRA (Zhu et al., 2023) | Correct the gradient using the average gradient of several data points sampled in the neighborhood and adjust the update gradient with a decay indicator | |

| PGN (Ge et al., 2023) | Penalizing gradient norm on the original loss function | |

| SMI-FGRM (Han et al., 2023) | Substitute the sign function with data rescaling and use the depth first sampling technique to stabilize the update direction. | |

| MIG (Ma et al., 2023) | Utilize integrated gradient to steer the generation of adversarial perturbations | |

| Input transformation-based | DIM (Xie et al., 2019) | Random resize and add padding to the input sample |

| TIM (Dong et al., 2019) | Adopt a Gaussian kernel to smooth the gradient before updating the perturbation | |

| SIM (Ling et al., 2020) | Calculate the average gradient of several scaled images | |

| ATTA (Wu et al., 2021) | Train an adversarial transformation network to perform the input-transformation | |

| DEM (Zou et al., 2020) | Calculate the average gradient of several DIM's transformed images | |

| Admix (Wang et al., 2021) | Mix up the images from other categories | |

| SSM (Long et al., 2022) | Randomly scale images and add noise in the frequency domain | |

| AITL (Yuan et al., 2022) | Select the most effective combination of image transformations specific to the input image. | |

| MaskBlock (Fan et al., 2022) | Calculate the average gradients of multiple randomly block-level masked images. | |

| SIA (Wang et al., 2023) | Split the image into blocks and apply various transformations to each block | |

| STM (Ge et al., 2023) | Transform the image using a style transfer network | |

| LPM (Wei et al., 2023) | Boosting Adversarial Transferability with Learnable Patch-wise Masks | |

| BSR (Wang et al., 2023) | Randomly shuffles and rotates the image blocks | |

| USMM (Wang et al., 2023) | Apply uniform scale and a mix mask from an image of a different category to the input image | |

| DeCowA (Lin et al., 2024) | Augments input examples via an elastic deformation, to obtain rich local details of the augmented inputs | |

| L2T (Zhu et al., 2024) | Optimizing the input-transformation trajectory along the adversarial iteration | |

| Advanced objective | TAP (Zhou et al., 2018) | Maximize the difference of feature maps between benign sample and adversarial example and smooth the perturbation |

| ILA (Huang et al., 2019) | Enlarge the similarity of feature difference between the original adversarial example and benign sample | |

| ATA (Wu et al., 2020) | Add a regularizer on the difference between attention maps of benign sample and adversarial example | |

| YAILA (Li et al., 2020) | Establishe a linear map between intermediate-level discrepancies and classification loss | |

| FIA (Wang et al., 2021) | Minimize a weighted feature map in the intermediate layer | |

| TRAP (Wang et al., 2021) | Utilize affine transformations and reference feature map | |

| NAA (Zhang et al., 2022) | Compute the feature importance of each neuron with decomposition on integral | |

| RPA (Zhang et al., 2022) | Calculate the weight matrix in FIA on randomly patch-wise masked images | |

| TAIG (Huang et al., 2022) | Adopt the integrated gradient to update perturbation | |

| FMAA (He et al., 2022) | Utilize momentum to calculate the weight matrix in FIA | |

| Fuzziness_Tuned (Yang et al., 2023) | The logits vector is fuzzified using the confidence scaling mechanism and temperature scaling mechanism | |

| ILPD (Li et al., 2023) | Decays the intermediate-level perturbation from the benign features by mixing the features of benign samples and adversarial examples | |

| IR (Wang et al., 2021) | Introduces the interaction regularizer into the objective function to minimize the interaction for better transferability | |

| DANAA (Jin et al., 2023) | Utilize an adversarial non-linear path to compute feature importance for each neuron by decomposing the integral | |

| Model-related | Ghost (Li et al., 2020) | Densely apply dropout and random scaling on the skip connection to generate several ghost networks to average the gradient |

| SGM (Wu et al., 2021) | Utilize more gradients from the skip connections in the residual blocks | |

| IAA (Zhu et al., 2022) | Replace ReLU with Softplus and decrease the weight of residual module | |

| DSM (Yang et al., 2022) | Train surrogate models in a knowledge distillation manner and adopt CutMix on the input | |

| MTA (Qin et al., 2023) | Train a meta-surrogate model (MSM), whose adversarial examples can maximize the loss on a single or a set of pre-trained surrogate models | |

| MUP (Yang et al., 2023) | Mask unimportant parameters of surrogate models | |

| BPA (Wang et al., 2023) | Recover the trunctaed gradient of non-linear layers | |

| DHF (Wang et al., 2023) | Mixup the feature of current examples and benign samples and randomly replaces the features with their means. | |

| PNA-PatchOut (Wei et al., 2021) | Ignore gradient of attention and randomly drop patches among the perturbation | |

| SAPR (Zhou et al., 2022) | Randomly permute input tokens at each attention layer | |

| TGR (Zhang et al., 2023) | Scale the gradient and mask the maximum or minimum gradient magnitude | |

| SETR (Naseer et al., 2022) | Ensemble and refine classifiers after each transformer block | |

| AGS (Wang et al., 2024) | Train surrogate models with adversary-centric contrastive learning and adversarial invariant learning | |

| Ensemble-based | Ens (Liu et al., 2017) | Generate the adversarial examplesusing multiple models |

| SVRE (Xiong et al., 2020) | Use the stochastic variance reduced gradient to update the adversarial example | |

| LGV (Gubri et al., 2022) | Ensemble multiple weight sets from a few additional training epochs with a constant and high learning rate | |

| MBA (Li et al., 2023) | Maximize the average prediction loss on several models obtained by single run of fine-tuning the surrogate model using Bayes optimization | |

| CWA (Chen et al., 2023) | Define the common weakness of an ensemble of models as the solution that is at the flat landscape and close to the models' local optima | |

| AdaEA (Chen et al., 2023) | Adjust the weights of each surrogate model in ensemble attack using adjustment strategy and reducing conflicts between surrogate models by reducing disparity of gradients of them | |

| Generation-based | LTP (Nakka et al., 2021) | Introduce a loss function based on such mid-level features to learn an effective, transferable perturbation generator |

| ADA (Kim et al., 2022) | Utilize a generator to stochastically perturb shared salient features across models to avoid poor local optima and explore the search space thoroughly | |

| CDTP (Naseer et al., 2019) | Train a generative model on datasets from different domains to learn domain-invariant perturbations |

| Category | Attack | Main Idea |

|---|---|---|

| Input transformation-based | ||

| ODI (Byun et al., 2022) | Diverse inputs based on 3D objects | |

| SU (Wei et al., 2023) | Optimize adversarial perturbation on the original and cropped images by minimizing prediction error and maximizing their feature similarity | |

| IDAA (Liu et al., 2024) | design local mixup to randomly mix a group of transformed adversarial images, strengthening the input diversity | |

| Advanced objective | ||

| PoTrip (Li et al., 2020) | Introduce the Poincare distance as the similarity metric to make the magnitude of gradient self-adaptive | |

| Logit (Zhao et al., 2021) | Replace the cross-entropy loss with logit loss | |

| CFM (Byun et al., 2023) | Mix feature maps of adversarial examples with clean feature maps of benign images stocastically | |

| Logit-Margin (Weng et al., 2023) | Downscale the logits using a temperature factor and an adaptive margin | |

| FFT (Zeng et al., 2023) | Fine-tuning a crafted adversarial example in the feature space | |

| Generation-based | ||

| TTP (Naseer et al., 2021) | Train a generative model to generate adversarial examples, of which both the global distribution and local neighborhood structure in the latent feature space are matched with the target class. |

To thoroughly evaluate existing attacks, we have included various popular models, including both CNNs (ResNet-18, ResNet-101, ResNeXt-50, DenseNet-121) and ViTs (ViT, PiT, Visformer, Swin). Moreover, we also adopted four defense methods, namely AT, HGD, RS, NRP. The defense models can be downloaded from Google Drive or Huggingface.

Note: We adopt

| Category | Attacks | CNNs | ViTs | Defenses | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-18 | ResNet-101 | ResNeXt-50 | DenseNet-101 | ViT | PiT | Visformer | Swin | AT | HGD | RS | NRP | ||

| Gradient-based | FGSM | 96.1 | 33.5 | 36.8 | 60.2 | 15.0 | 17.8 | 26.4 | 32.7 | 33.4 | 25.9 | 22.9 | 29.7 |

| I-FGSM | 100.0 | 14.9 | 18.6 | 42.9 | 4.9 | 10.0 | 14.6 | 21.7 | 30.3 | 8.8 | 20.0 | 13.7 | |

| MI-FGSM | 100.0 | 42.9 | 46.3 | 73.9 | 17.2 | 23.8 | 33.7 | 42.5 | 33.1 | 32.0 | 22.4 | 26.5 | |

| NI-FGSM | 100.0 | 43.8 | 47.2 | 77.0 | 16.6 | 21.5 | 33.3 | 43.2 | 33.0 | 33.2 | 22.5 | 27.3 | |

| PI-FGSM | 100.0 | 37.9 | 46.3 | 72.7 | 14.4 | 17.7 | 27.2 | 37.9 | 37.2 | 37.6 | 31.9 | 36.1 | |

| VMI-FGSM | 100.0 | 62.0 | 64.9 | 88.9 | 28.2 | 39.4 | 53.2 | 58.6 | 36.0 | 53.8 | 26.1 | 40.8 | |

| VNI-FGSM | 100.0 | 62.2 | 64.8 | 89.8 | 26.3 | 35.9 | 52.5 | 56.3 | 34.6 | 50.2 | 25.0 | 38.2 | |

| EMI-FGSM | 100.0 | 57.0 | 59.0 | 89.0 | 21.2 | 28.9 | 44.6 | 52.2 | 35.0 | 43.2 | 24.9 | 32.6 | |

| I-FGS²M | 100.0 | 22.7 | 27.0 | 54.5 | 9.0 | 12.1 | 20.1 | 28.9 | 30.8 | 16.2 | 20.2 | 16.6 | |

| VA-I-FGSM | 100.0 | 17.7 | 22.4 | 46.9 | 7.2 | 11.2 | 15.0 | 22.7 | 30.3 | 12.7 | 20.1 | 19.2 | |

| AI-FGTM | 100.0 | 36.2 | 39.6 | 69.5 | 13.9 | 20.1 | 29.7 | 37.3 | 32.0 | 26.9 | 21.7 | 23.5 | |

| RAP | 100.0 | 51.8 | 58.5 | 87.5 | 21.1 | 26.9 | 43.1 | 49.3 | 32.4 | 39.7 | 22.8 | 31.0 | |

| GI-FGSM | 100.0 | 48.0 | 53.6 | 81.7 | 17.8 | 24.9 | 38.3 | 45.4 | 34.0 | 36.9 | 23.7 | 31.2 | |

| PC-I-FGSM | 100.0 | 42.8 | 46.8 | 74.5 | 17.1 | 23.6 | 33.4 | 42.8 | 32.9 | 32.1 | 22.9 | 29.3 | |

| DTA | 100.0 | 50.6 | 54.8 | 82.5 | 18.1 | 26.0 | 40.2 | 44.8 | 33.0 | 40.6 | 23.1 | 29.2 | |

| GRA | 100.0 | 67.9 | 70.0 | 93.9 | 30.3 | 39.3 | 54.5 | 64.2 | 40.8 | 61.0 | 35.1 | 54.8 | |

| PGN | 100.0 | 69.3 | 73.3 | 94.7 | 32.7 | 42.9 | 56.0 | 66.5 | 40.5 | 63.3 | 34.9 | 56.9 | |

| IE-FGSM | 100.0 | 51.1 | 54.5 | 83.9 | 19.0 | 28.4 | 40.1 | 47.2 | 33.2 | 39.9 | 22.8 | 28.9 | |

| SMI-FGRM | 99.8 | 40.2 | 44.5 | 77.1 | 14.0 | 21.0 | 30.7 | 43.9 | 36.6 | 31.6 | 26.0 | 30.5 | |

| MIG | 100.0 | 54.3 | 58.0 | 87.2 | 22.9 | 31.3 | 44.3 | 53.5 | 37.5 | 47.7 | 26.5 | 39.8 | |

| Input transformation-based | DIM | 100.0 | 62.7 | 67.3 | 90.3 | 29.5 | 37.1 | 53.7 | 58.7 | 36.5 | 58.5 | 24.9 | 36.0 |

| TIM | 100.0 | 37.2 | 45.0 | 71.8 | 15.5 | 19.6 | 29.3 | 39.1 | 37.4 | 35.2 | 32.5 | 37.4 | |

| SIM | 100.0 | 59.9 | 63.1 | 89.9 | 24.8 | 34.1 | 51.0 | 53.9 | 36.1 | 52.0 | 25.1 | 38.2 | |

| ATTA | 100.0 | 46.6 | 50.3 | 79.4 | 17.5 | 26.3 | 37.3 | 45.3 | 33.8 | 38.1 | 22.8 | 30.4 | |

| DEM | 100.0 | 76.4 | 78.8 | 97.3 | 39.9 | 45.6 | 66.0 | 67.0 | 38.6 | 78.6 | 30.5 | 47.3 | |

| Admix | 100.0 | 68.2 | 71.8 | 95.1 | 30.0 | 38.6 | 56.1 | 60.5 | 37.6 | 60.1 | 27.6 | 44.2 | |

| SSM | 99.9 | 70.5 | 73.8 | 93.5 | 30.4 | 39.4 | 54.5 | 63.3 | 37.2 | 62.1 | 29.2 | 50.9 | |

| AITL | 99.5 | 78.9 | 82.4 | 96.3 | 46.4 | 51.4 | 68.1 | 71.1 | 41.8 | 79.7 | 32.9 | 53.1 | |

| MaskBlock | 100.0 | 49.2 | 51.4 | 78.6 | 18.0 | 25.1 | 38.1 | 45.6 | 33.9 | 36.8 | 22.9 | 30.5 | |

| SIA | 100.0 | 87.5 | 90.5 | 99.1 | 43.5 | 57.8 | 77.5 | 78.0 | 39.2 | 81.4 | 28.8 | 51.9 | |

| STM | 100.0 | 75.4 | 77.2 | 96.1 | 35.7 | 45.2 | 61.5 | 68.1 | 40.9 | 70.7 | 32.5 | 58.8 | |

| LPM | 95.9 | 11.1 | 12.2 | 28.9 | 4.6 | 6.8 | 11.3 | 17.2 | 29.6 | 5.5 | 19.2 | 18.7 | |

| BSR | 100.0 | 85.4 | 87.9 | 99.1 | 42.9 | 56.9 | 74.6 | 77.0 | 38.6 | 80.1 | 27.3 | 48.1 | |

| USMM | 100.0 | 74.0 | 78.1 | 96.4 | 33.7 | 45.3 | 62.8 | 64.8 | 40.0 | 66.1 | 29.4 | 50.8 | |

| DeCowA | 100.0 | 84.8 | 87.7 | 98.6 | 53.6 | 64.0 | 79.5 | 79.7 | 43.6 | 85.7 | 35.2 | 56.0 | |

| L2T | 100.0 | 88.4 | 89.9 | 98.8 | 50.7 | 64.2 | 79.6 | 79.7 | 43.0 | 86.7 | 32.9 | 60.6 | |

| Advanced objective | TAP | 100.0 | 38.5 | 42.4 | 72.0 | 14.3 | 17.9 | 28.5 | 34.2 | 31.6 | 28.9 | 20.8 | 25.9 |

| ATA | 100.0 | 16.4 | 19.6 | 41.8 | 5.9 | 8.9 | 14.4 | 21.4 | 30.4 | 10.0 | 20.5 | 15.7 | |

| ILA | 100.0 | 45.6 | 51.9 | 77.8 | 15.2 | 21.6 | 35.3 | 44.4 | 32.0 | 31.5 | 20.1 | 22.9 | |

| YAILA | 51.5 | 26.2 | 28.5 | 49.0 | 6.7 | 11.4 | 16.5 | 25.7 | 29.3 | 13.4 | 18.8 | 14.7 | |

| FIA | 99.5 | 31.0 | 36.4 | 65.3 | 10.2 | 16.3 | 24.4 | 35.3 | 31.4 | 18.9 | 21.1 | 19.9 | |

| TRAP | 96.9 | 63.2 | 66.7 | 85.1 | 23.6 | 33.3 | 52.8 | 56.5 | 33.0 | 56.8 | 20.6 | 26.2 | |

| NAA | 99.5 | 56.5 | 58.9 | 80.8 | 23.9 | 33.9 | 46.8 | 54.5 | 34.8 | 44.2 | 23.9 | 36.8 | |

| RPA | 100.0 | 62.5 | 68.7 | 91.6 | 23.7 | 34.2 | 49.6 | 57.0 | 35.8 | 56.3 | 26.7 | 39.1 | |

| TAIG | 100.0 | 26.0 | 29.1 | 62.0 | 8.4 | 14.1 | 21.8 | 32.4 | 32.3 | 18.3 | 20.9 | 18.2 | |

| FMAA | 100.0 | 39.5 | 44.6 | 80.3 | 11.1 | 20.1 | 29.4 | 41.2 | 32.4 | 25.9 | 21.3 | 22.3 | |

| Fuzziness_Tuned | 100.0 | 39.9 | 46.5 | 75.3 | 15.6 | 21.2 | 31.5 | 38.9 | 33.1 | 29.9 | 27.6 | 22.8 | |

| ILPD | 70.6 | 68.0 | 68.0 | 72.0 | 31.8 | 46.1 | 52.6 | 55.9 | 33.8 | 50.7 | 24.0 | 50.0 | |

| IR | 100.0 | 42.0 | 45.3 | 74.0 | 16.7 | 23.4 | 33.4 | 40.9 | 40.8 | 32.2 | 28.0 | 22.8 | |

| DANAA | 100.0 | 59.6 | 63.8 | 90.4 | 17.3 | 26.4 | 44.7 | 49.8 | 34.8 | 44.9 | 23.4 | 32.4 | |

| Model-related | Ghost | 64.4 | 93.9 | 63.1 | 66.9 | 19.1 | 29.7 | 39.5 | 42.3 | 31.2 | 36.1 | 21.2 | 54.7 |

| SGM | 100.0 | 48.4 | 50.9 | 78.5 | 20.1 | 28.7 | 39.7 | 48.3 | 34.9 | 37.5 | 24.2 | 30.9 | |

| IAA | 100.0 | 44.2 | 50.6 | 85.1 | 12.8 | 19.6 | 32.8 | 40.4 | 33.3 | 29.4 | 22.0 | 26.0 | |

| DSM | 98.9 | 60.4 | 66.3 | 91.9 | 23.8 | 33.8 | 49.3 | 56.2 | 34.7 | 48.7 | 24.3 | 34.1 | |

| MTA | 82.4 | 44.2 | 46.8 | 74.9 | 12.6 | 17.9 | 31.7 | 41.0 | 30.4 | 34.5 | 19.1 | 19.2 | |

| MUP | 100.0 | 50.7 | 51.0 | 81.2 | 18.5 | 26.3 | 37.4 | 43.3 | 33.8 | 37.1 | 22.7 | 29.6 | |

| BPA | 100.0 | 60.1 | 65.6 | 89.6 | 24.2 | 34.2 | 51.2 | 58.2 | 35.2 | 50.6 | 26.0 | 37.4 | |

| DHF | 100.0 | 70.4 | 72.1 | 92.3 | 31.5 | 43.4 | 59.8 | 61.9 | 35.9 | 59.8 | 25.5 | 40.2 | |

| PNA-PatchOut | 47.5 | 34.3 | 36.5 | 45.8 | 81.3 | 39.1 | 40.9 | 53.0 | 31.7 | 29.0 | 22.5 | 27.1 | |

| SAPR | 66.4 | 50.3 | 53.2 | 65.6 | 96.7 | 57.5 | 60.4 | 75.4 | 35.4 | 41.8 | 24.8 | 31.9 | |

| TGR | 70.8 | 48.1 | 52.6 | 68.2 | 98.3 | 56.0 | 61.8 | 73.4 | 36.6 | 43.5 | 28.0 | 36.9 | |

| SETR | 72.6 | 36.6 | 43.4 | 64.5 | 54.3 | 33.6 | 43.5 | 68.8 | 36.5 | 31.6 | 25.5 | 50.7 | |

| AGS | 86.1 | 55.8 | 60.3 | 81.6 | 29.0 | 22.0 | 46.7 | 46.1 | 37.8 | 62.2 | 27.4 | 39.4 | |

| Ensemble-based | ENS | 100.0 | 91.7 | 92.5 | 100.0 | 38.7 | 53.0 | 66.6 | 66.4 | 33.5 | 67.8 | 24.7 | 56.1 |

| SVRE | 100.0 | 97.7 | 98.0 | 100.0 | 40.6 | 54.4 | 69.9 | 69.5 | 33.8 | 74.9 | 24.1 | 59.7 | |

| LGV | 97.7 | 69.5 | 69.4 | 93.6 | 23.1 | 29.2 | 43.7 | 51.5 | 34.5 | 52.9 | 24.5 | 37.3 | |

| MBA | 100.0 | 96.0 | 95.2 | 99.8 | 41.9 | 51.8 | 75.1 | 76.8 | 39.5 | 86.1 | 28.7 | 52.1 | |

| CWA | 99.7 | 98.3 | 99.1 | 100.0 | 33.9 | 47.7 | 66.4 | 65.0 | 35.8 | 69.4 | 24.9 | 68.9 | |

| AdaEA | 100.0 | 91.9 | 92.7 | 100.0 | 39.4 | 52.4 | 67.3 | 67.0 | 33.9 | 69.6 | 24.3 | 58.0 | |

| Generation-based | LTP | 99.1 | 98.7 | 98.7 | 99.5 | 45.1 | 69.4 | 92.1 | 90.2 | 31.7 | 96.5 | 21.6 | 29.7 |

| ADA | 69.9 | 47.5 | 45.2 | 63.6 | 8.5 | 11.2 | 31.7 | 29.4 | 29.4 | 37.0 | 20.6 | 16.3 | |

| CDTP | 72.8 | 29.9 | 39.8 | 64.6 | 10.5 | 18.7 | 37.4 | 35.7 | 32.6 | 34.8 | 20.7 | 48.7 | |

Note: We adopt labels.csv.

| Category | Attacks | CNNs | ViTs | Defenses | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-18 | ResNet-101 | ResNeXt-50 | DenseNet-101 | ViT | PiT | Visformer | Swin | AT | HGD | RS | NRP | ||

| Input transformation-based | ODI | 98.9 | 38.6 | 45.5 | 67.0 | 9.4 | 13.9 | 29.5 | 19.9 | 0.1 | 41.4 | 0.0 | 1.0 |

| SU | 99.2 | 7.2 | 8.0 | 19.7 | 0.1 | 0.6 | 2.1 | 1.8 | 0.1 | 2.1 | 0.0 | 0.2 | |

| IDAA | 87.1 | 2.6 | 3.0 | 13.0 | 1.3 | 1.8 | 2.1 | 3.3 | 0.4 | 1.5 | 0.0 | 0.1 | |

| Advanced objective | PoTrip | 100.0 | 3.2 | 5.1 | 15.7 | 0.1 | 0.3 | 1.3 | 1.1 | 0.0 | 3.0 | 0.0 | 0.2 |

| Logit | 99.0 | 13.5 | 18.5 | 38.5 | 1.9 | 2.9 | 8.3 | 3.8 | 0.1 | 14.4 | 0.0 | 0.3 | |

| CFM | 98.3 | 39.6 | 44.8 | 66.1 | 9.6 | 11.4 | 26.6 | 18.9 | 0.2 | 37.6 | 0.0 | 1.6 | |

| Logit-Margin | 100.0 | 13.6 | 19.1 | 42.8 | 1.8 | 3.3 | 8.4 | 4.4 | 0.0 | 14.0 | 0.0 | 0.2 | |

| FFT | 99.8 | 17.5 | 21.6 | 45.1 | 1.3 | 2.8 | 10.3 | 6.6 | 0.1 | 13.2 | 0.0 | 0.4 | |

| Generation-based | TTP | 96.2 | 19.6 | 27.4 | 62.4 | 3.2 | 4.3 | 19.5 | 5.3 | 0.0 | 0.0 | 0.3 | 4.1 |

|

Xiaosen Wang |

Zeyuan Yin |

Zeliang Zhang |

Kunyu Wang |

Zhijin Ge |

Yuyang Luo |

We thank all the researchers who contribute or check the methods. See contributors for details.

We are trying to include more transfer-based attacks. We welcome suggestions and contributions! Submit an issue or pull request and we will try our best to respond in a timely manner.