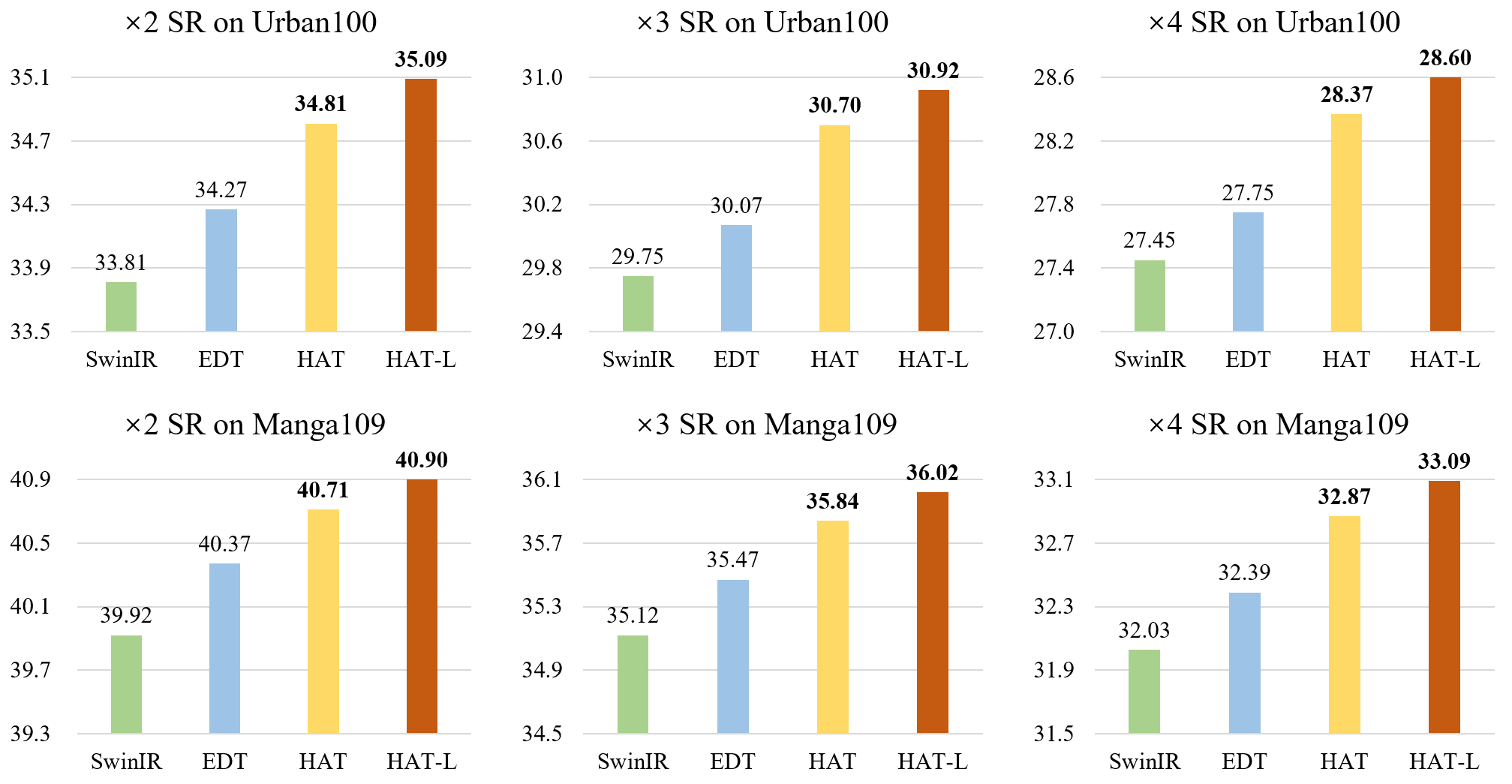

HAT [Paper Link]

Xiangyu Chen, Xintao Wang, Jiantao Zhou and Chao Dong

@article{chen2022activating,

title={Activating More Pixels in Image Super-Resolution Transformer},

author={Chen, Xiangyu and Wang, Xintao and Zhou, Jiantao and Dong, Chao},

journal={arXiv preprint arXiv:2205.04437},

year={2022}

}

pip install -r requirements.txt

python setup.py develop

- Refer to

./options/testfor the configuration file of the model to be tested, and prepare the testing data and pretrained model. - The pretrained models are available at Google Drive or Baidu Netdisk (access code: qyrl).

- Then run the follwing codes (taking

HAT_SRx4_ImageNet-pretrain.pthas an example):

python hat/test.py -opt options/test/HAT_SRx4_ImageNet-pretrain.yml

The testing results will be saved in the ./results folder.

Note that the tile mode is also provided for limited GPU memory when testing. You can modify the specific settings of the tile mode in your custom testing option by referring to ./options/test/HAT_tile_example.yml.

- Refer to

./options/trainfor the configuration file of the model to train. - Preparation of training data can refer to this page. ImageNet dataset can be downloaded at the official website.

- The training command is like

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -m torch.distributed.launch --nproc_per_node=8 --master_port=4321 hat/train.py -opt options/train/train_HAT_SRx2_from_scratch.yml --launcher pytorch

- Note that the default batch size per gpu is 4, which will cost about 20G memory for each GPU.

The training logs and weights will be saved in the ./experiments folder.

The inference results on benchmark datasets are available at Google Drive or Baidu Netdisk (access code: 63p5).

If you have any question, please email chxy95@gmail.com or join in the Wechat group of BasicSR to discuss with the authors.