The AWS DeepRacer Virtual Circuit is run every month and uses reinforcement learning to teach a car to successfully navigate a race track.

Rewards functions are developed by each competitor in order to guide the learning, and these functions can vary widely. One of the experiments I wanted to try was to be overly prescriptive to the car.

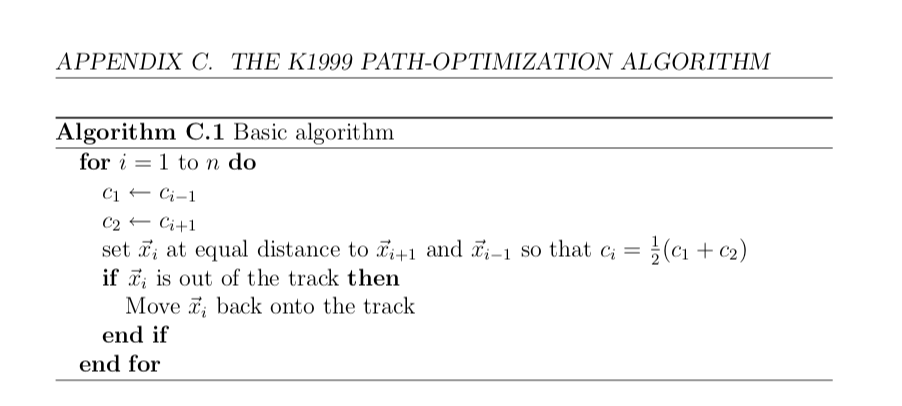

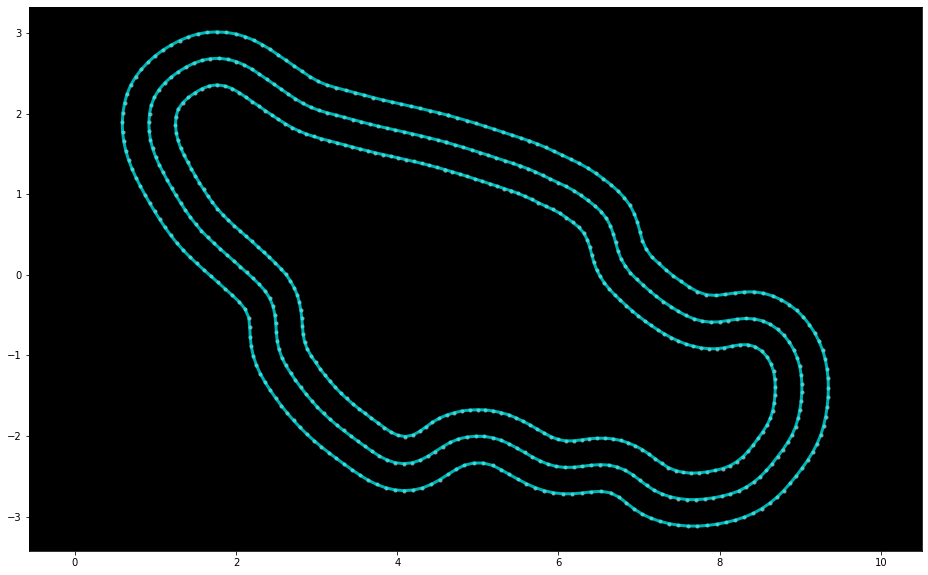

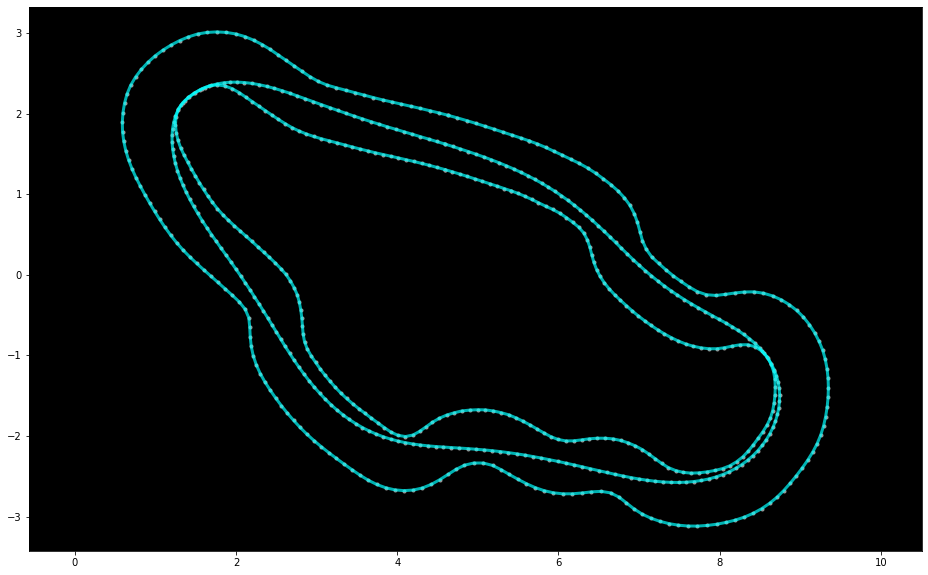

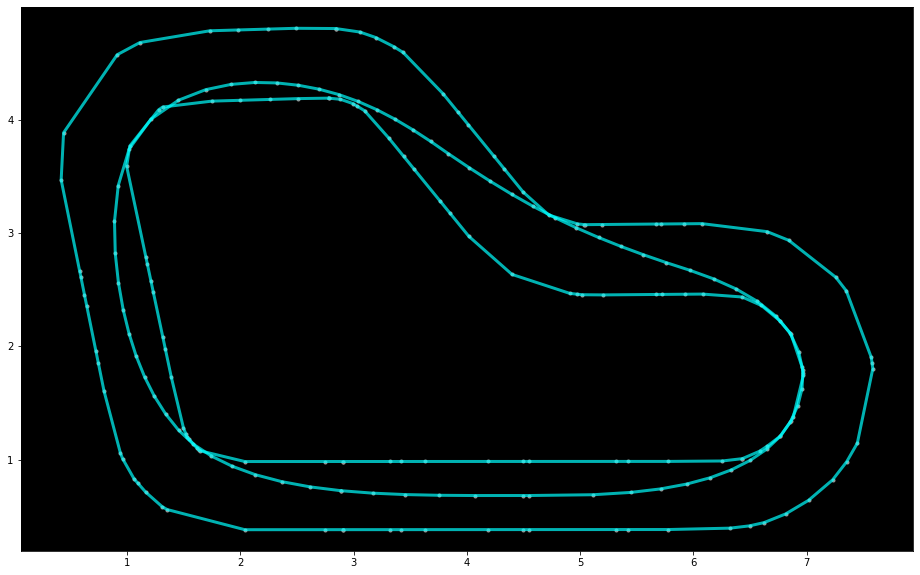

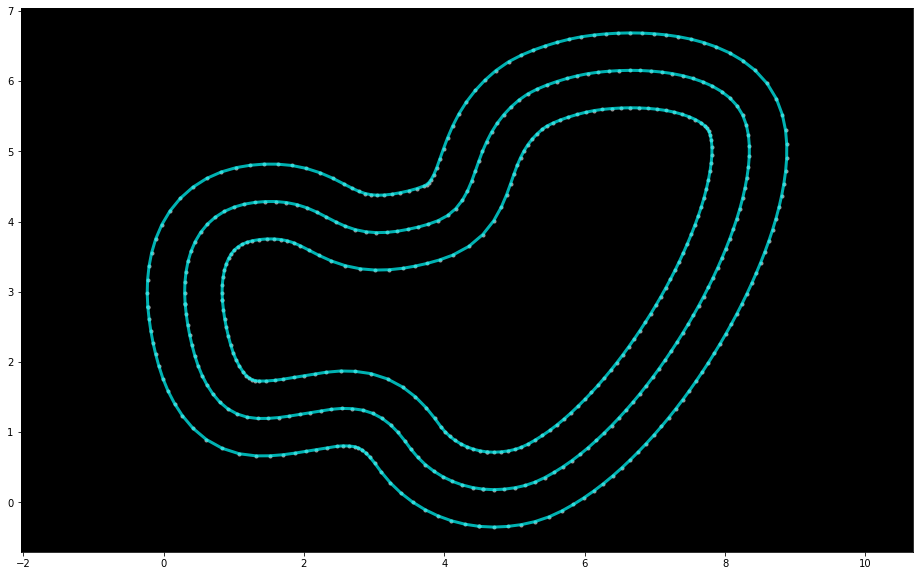

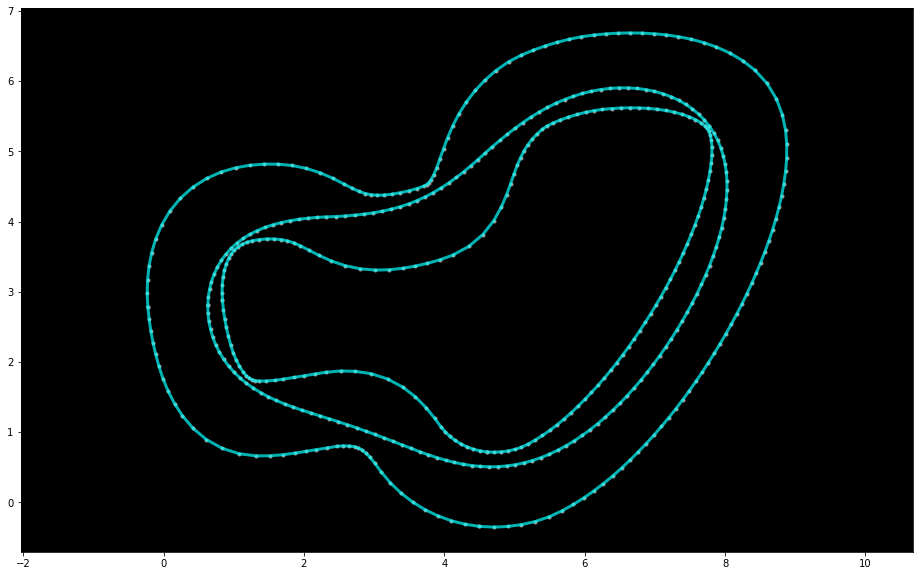

| Original Track | Calculated Race Line | Numpy coordinates |

|---|---|---|

|

|

NumPy: Canada_Training Python Code: Canada_Training.py |

|

|

NumPy: reinvent_base.npy Python Code: reinvent_base.py |

|

|

NumPy: reinvent2019.npy Python Code: reinvent2019.py |

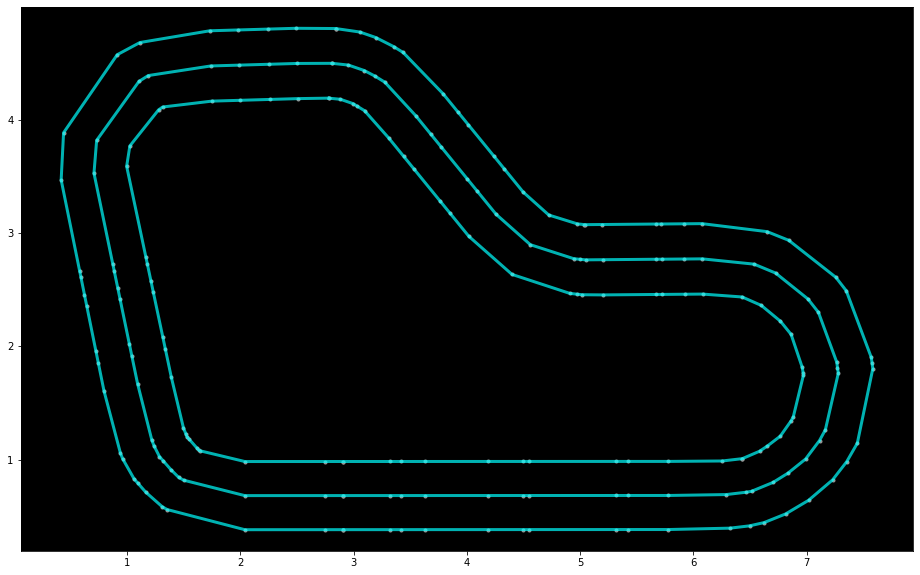

Borrowed directly from Rémi Coulom's PhD Thesis:

The code was hastily written but achieved a good enough result, so I stopped improving it. One limitation is that the points along the race line will never migrate in the opposite direction of curvature, leaving some room for further straightening of the race line.