Introduced in LRVS-Fashion: Extending Visual Search with Referring Instructions

Simon Lepage — Jérémie Mary — David Picard

| Data | Code | Models | Spaces |

|---|---|---|---|

| Full Dataset | Training Code | Categorical Model | LRVS-F Leaderboard |

| Test set | Benchmark Code | Textual Model | Demo |

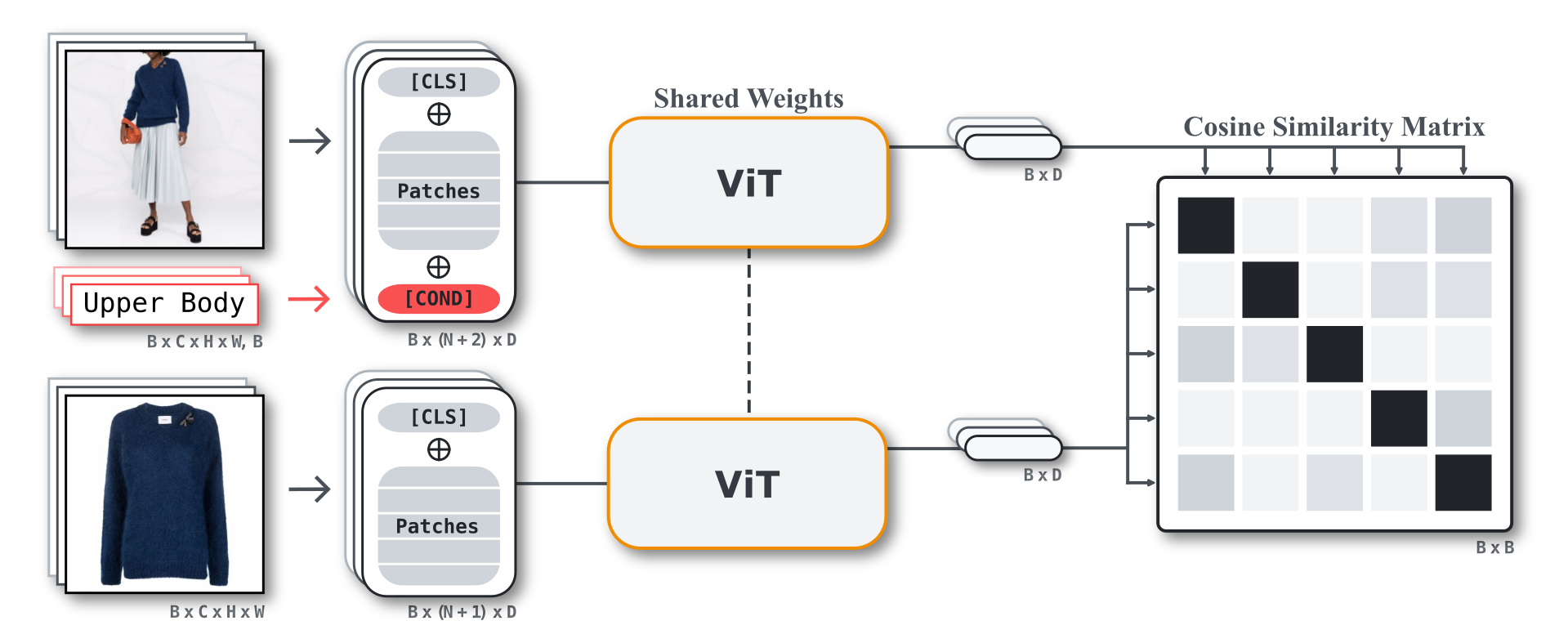

CondViT computes conditional image embeddings, to extract specific features out of complex images. This codebase shows how to train it on LAION — Referred Visual Search — Fashion, with clothing categories. It can easily be modified to use free text embeddings as conditioning, such as BLIP2 captions provided in the dataset.

Categorical CondViT Results :

Textual CondViT Results :

Textual CondViT Results :

git clone git@github.com:Simon-Lepage/CondViT-LRVSF.git

cd CondViT-LRVSF; pip install -e .Prepare CLIP saves in models folder.

import clip

torch.save(clip.load("ViT-B/32")[0].visual.state_dict(), "models/CLIP_B32_visual.pth")

torch.save(clip.load("ViT-B/16")[0].visual.state_dict(), "models/CLIP_B16_visual.pth")Download LRVS-F

We recommend img2dataset to download the images.

-

Training products should be stored in tarfiles in a

TRAINfolder, as the training uses webdataset. For each product, its image should be stored as{PRODUCT_ID}.{i}.jpgand accompagnied by a<PRODUCT_ID>.jsonfile with eachias keys of metadata. This will require reorganising the tarfiles natively produced by img2dataset.Exemple

... 230537.0.jpg 230537.1.jpg 230537.json => { "0": { "URL": "https://img01.ztat.net/article/LE/22/2G/09/CQ/11/LE222G09C-Q11@6.jpg?imwidth=762", "TYPE": "COMPLEX", "SPLIT": "train", [...] }, "1": { "URL": "https://img01.ztat.net/article/LE/22/2G/09/CQ/11/LE222G09C-Q11@10.jpg?imwidth=300&filter=packshot", "TYPE": "SIMPLE", "SPLIT": "train", "CATEGORY": "Lower Body", "blip2_caption1": "levi's black jean trousers - skinny fit", [ ... ] } } ... -

Validation products should also be stored in

VALID/prods.tarfollowing the same format. Validation distractors should be stored inVALID/dist_{i}.taras{ID}.jpg,{ID}.json. The JSON file should directly contain metadata.Exemple

... 989760.jpg 989760.json => { "URL": "https://img01.ztat.net/article/spp-media-p1/0dd705f32f9e4895810d291c76de5ea2/1661e4ee07f342dcb168fed3ab81e78e.jpg?imwidth=300&filter=packshot", "CATEGORY": "Lower Body", "SPLIT": "val_gallery" [...] } ... -

Test data should be stored as

TEST/dist_{i}.parquetandTEST/prods.parquetfiles. Their index should beurl, and have a singlejpgcolumn containing the images as bytes.

Use the following commands to train a model and evaluate it. Additional options can be found in the scripts. Training a ViT-B/32 on 2 Nvidia V100 GPUs should take ~6h.

python main.py --architecture B32 --batch_size 180 --conditioning --run_name CondViTB32

python lrvsf/test/embedding.py --save_path ./saves/CondViTB32_*/best_validation_model.pth

python lrvsf/test/metrics.py --embeddings_folder ./saves/CondViTB32_*/embs/To cite our work, please use the following BibTeX entry :

@article{lepage2023lrvsf,

title={LRVS-Fashion: Extending Visual Search with Referring Instructions},

author={Lepage, Simon and Mary, Jérémie and Picard, David},

journal={arXiv:2306.02928},

year={2023}

}