This project aims to regroup the state-of-the-art approaches that use knowledge distillation for unsupervised anomaly detection. The code is designed to be understandable and simple to allow custom modifications.

You will need Python 3.10+ and the packages specified in requirements.txt.

Install packages with:

pip install -r requirements.txt

To use the project, you must configure the config.yaml file This file allows configuring the main elements of the project.

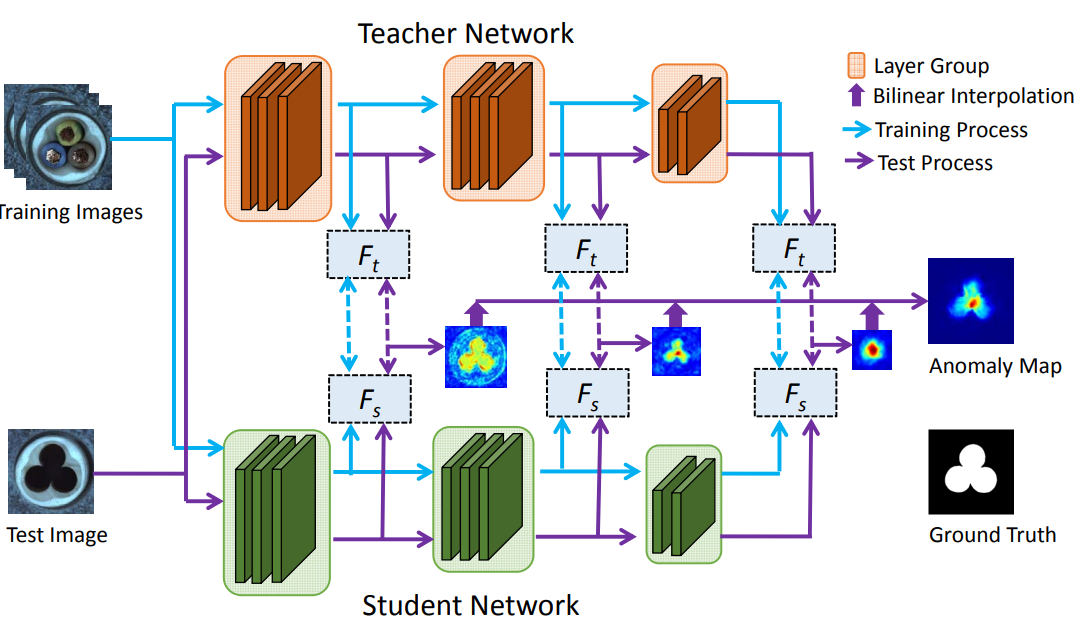

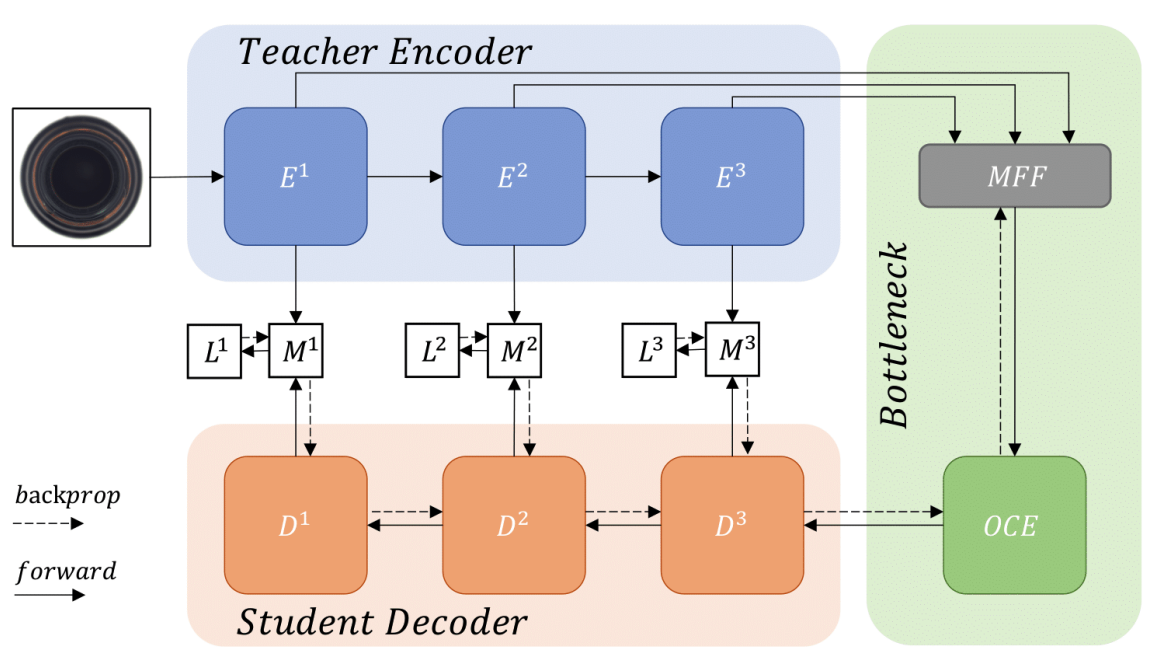

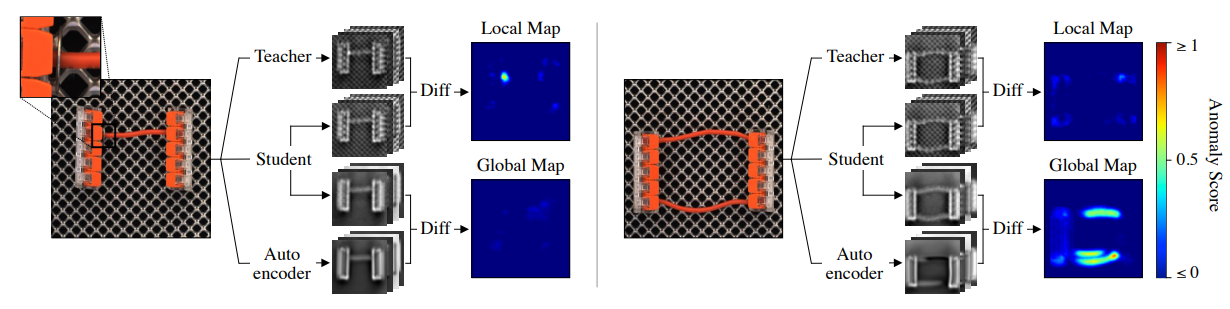

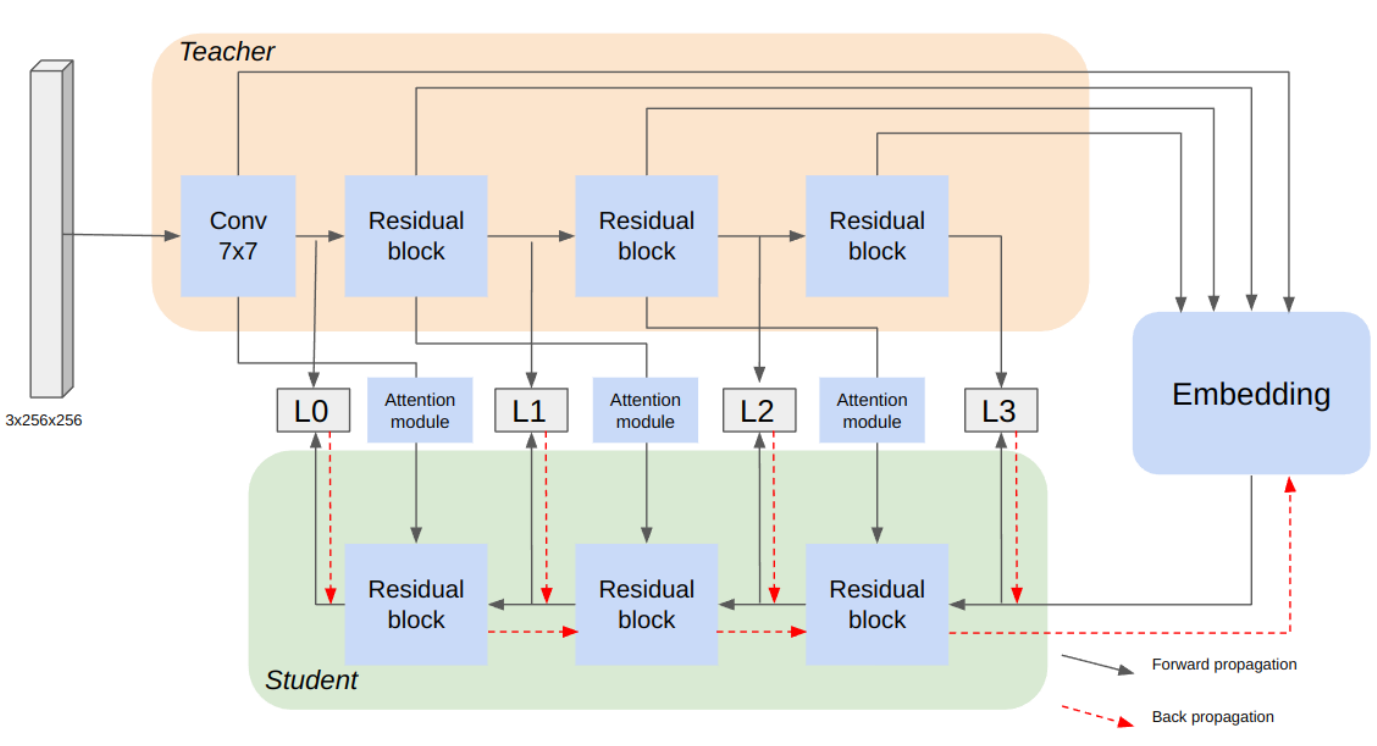

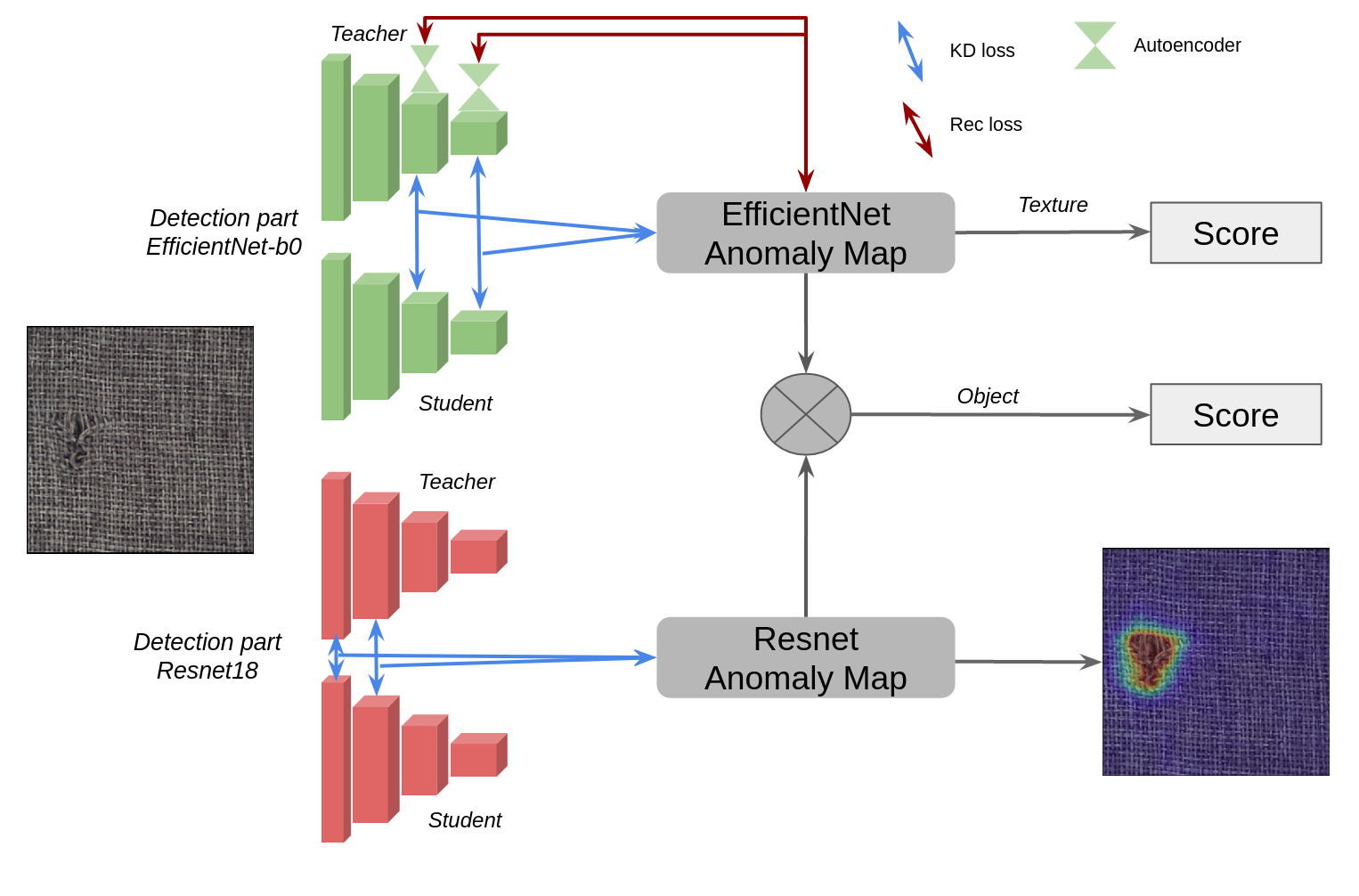

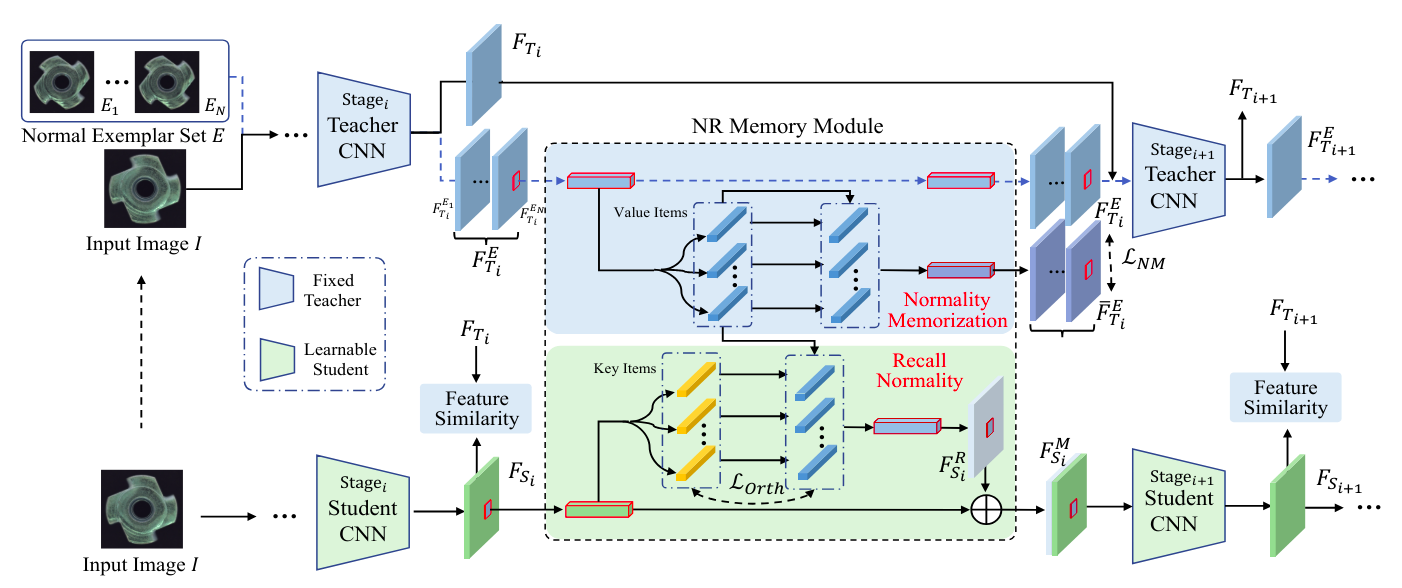

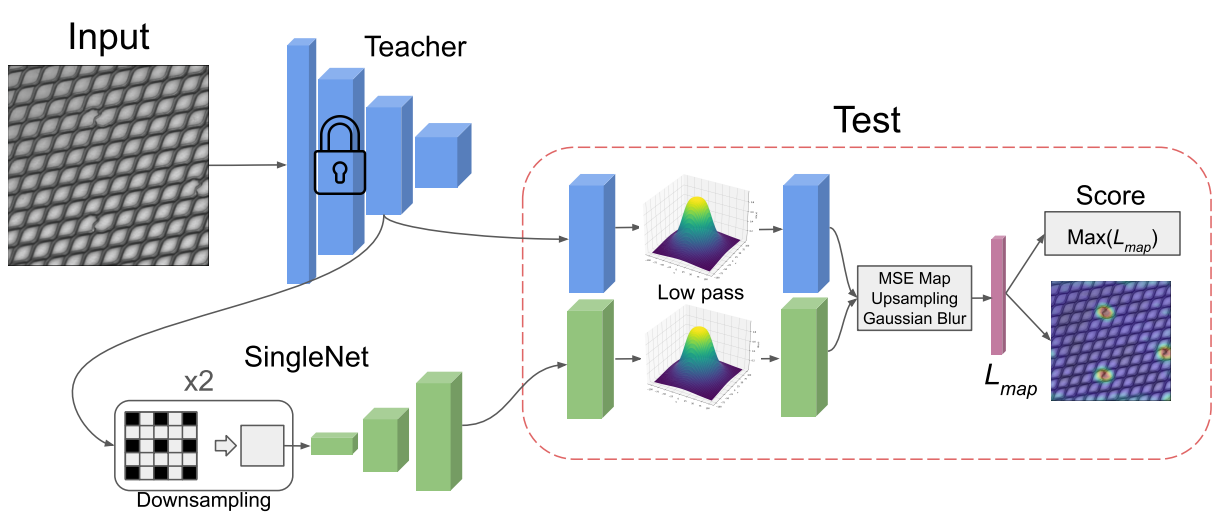

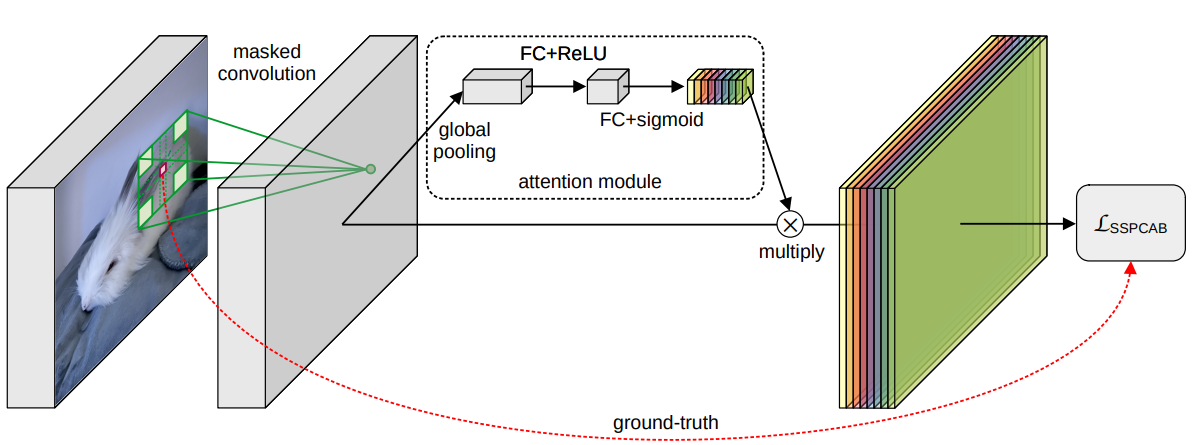

data_path(STR): The path to the datasetdistillType(STR): The type of distillation : st for STPM, rd for reverse distillation, ead for EfficientAD, dbfad for distillation-based fabric anomaly detection, mixed for mixedTeacher, rnst/rnrd for remembering normality (forward/backward), sn for singlenetbackbone(STR): The name of the model backbone (any CNN for st, only resnets and wide resnets for rd, small or medium for ead)out_indice(LIST OF INT): The index of the layer used for distillation (only for st)obj(STR): The object categoryphase(STR): Either train or testsave_path(STR): The path to save the model weightstraining_data(YAML LIST) : To configure hyperparameters (epochs, batch_size, img_size, crop_size, norm and other parameters)

An example of config for each distillType is accessible in configs/

Once configured, just do the following command to train or test (depending of configuration file)

python3 trainNet.py

You can also visualize the feature map of a given layer, you may change the selected layer within the python file

python3 visualization.py

Article1 and Article2

Code inspiration

WACV 2025, article not published yet

This project is licensed under the MIT License.