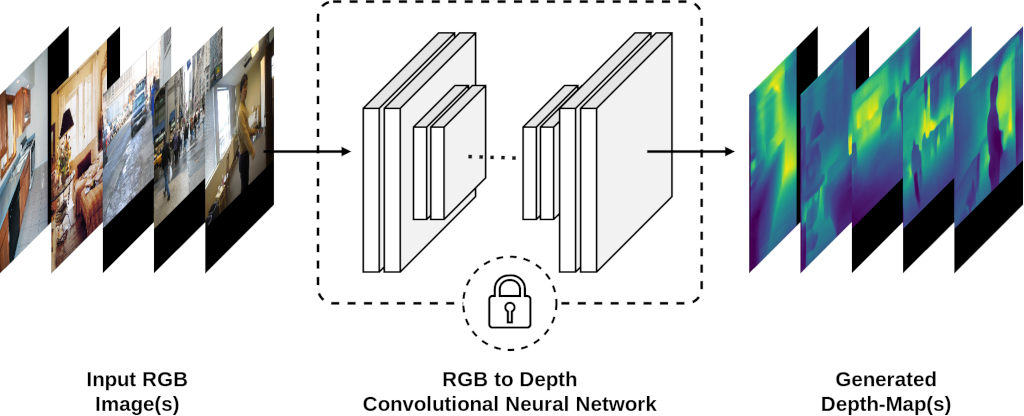

The goal of this repository is to convert the RGB images of Visual-Genome dataset to Depth Maps. This code is part of our paper called "Improving Visual Relation Detection using Depth Maps" and is used to generate VG-Depth dataset. For further information, please visit the main repository here.

This code is based on RGB-to-Depth architecture introduced in (Laina et al., 2016) FCRN-DepthPrediction. The architecture is a fully convolutional neural network built on ResNet-50, and trained in an end-to-end fashion on data from NYU Depth Dataset v2.

The requirements of this project are as follows:

- Python >= 3.6

- Tensorflow

- Numpy

- Pillow

You can run the following script to install the required libraries and download the other dependencies:

./setup_env.sh

This script will perform the following operations:

- Install the required libraries.

- Download the FCRN checkpoint.

- Download the Visual-Genome dataset.

After installing the dependencies, you can perform the conversion by calling convert.py with one of the provided configuration files as an argument:

python3 convert.py json/vg_images/convert_vg_1024.json