A mxnet implementation of fast style transfer, inspired by:

- https://github.com/lengstrom/fast-style-transfer

- https://github.com/zhaw/neural_style

- https://github.com/dmlc/mxnet/tree/master/example/neural-style

releated papers:

- Johnson's Perceptual Losses for Real-Time Style Transfer and Super-Resolution

- Ulyanov's Instance Normalization

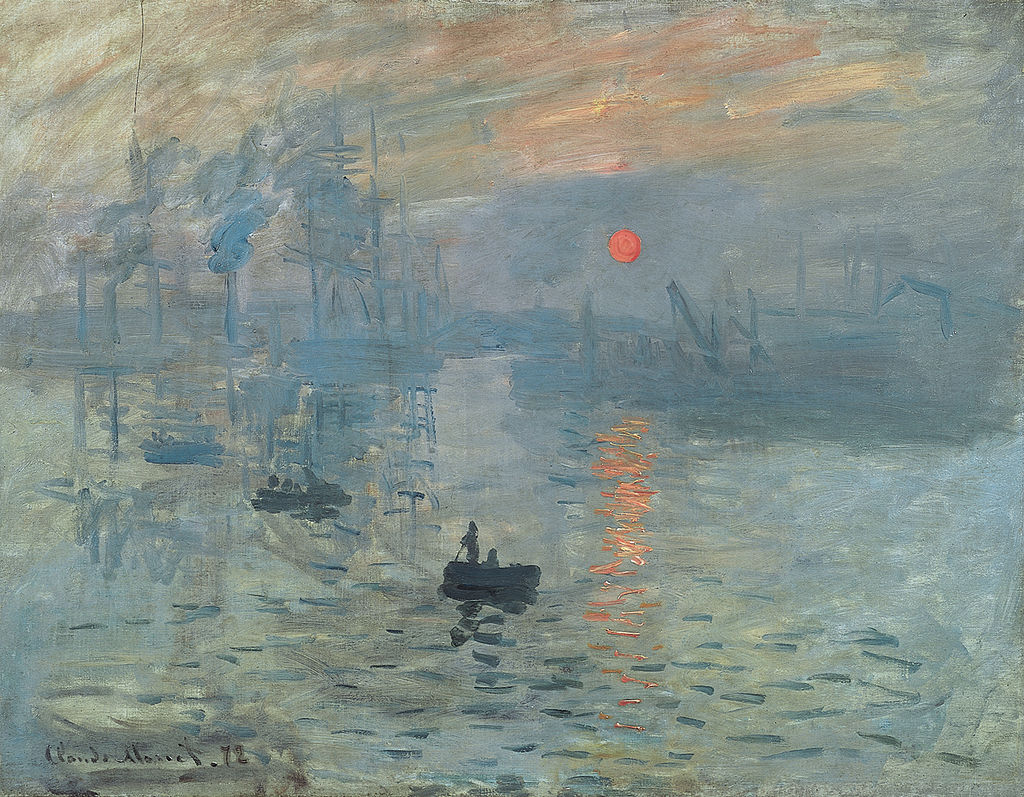

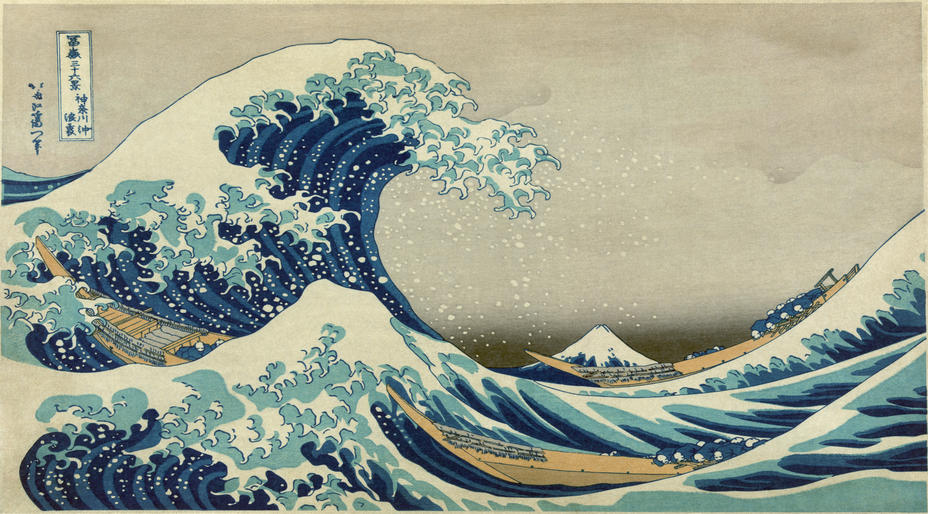

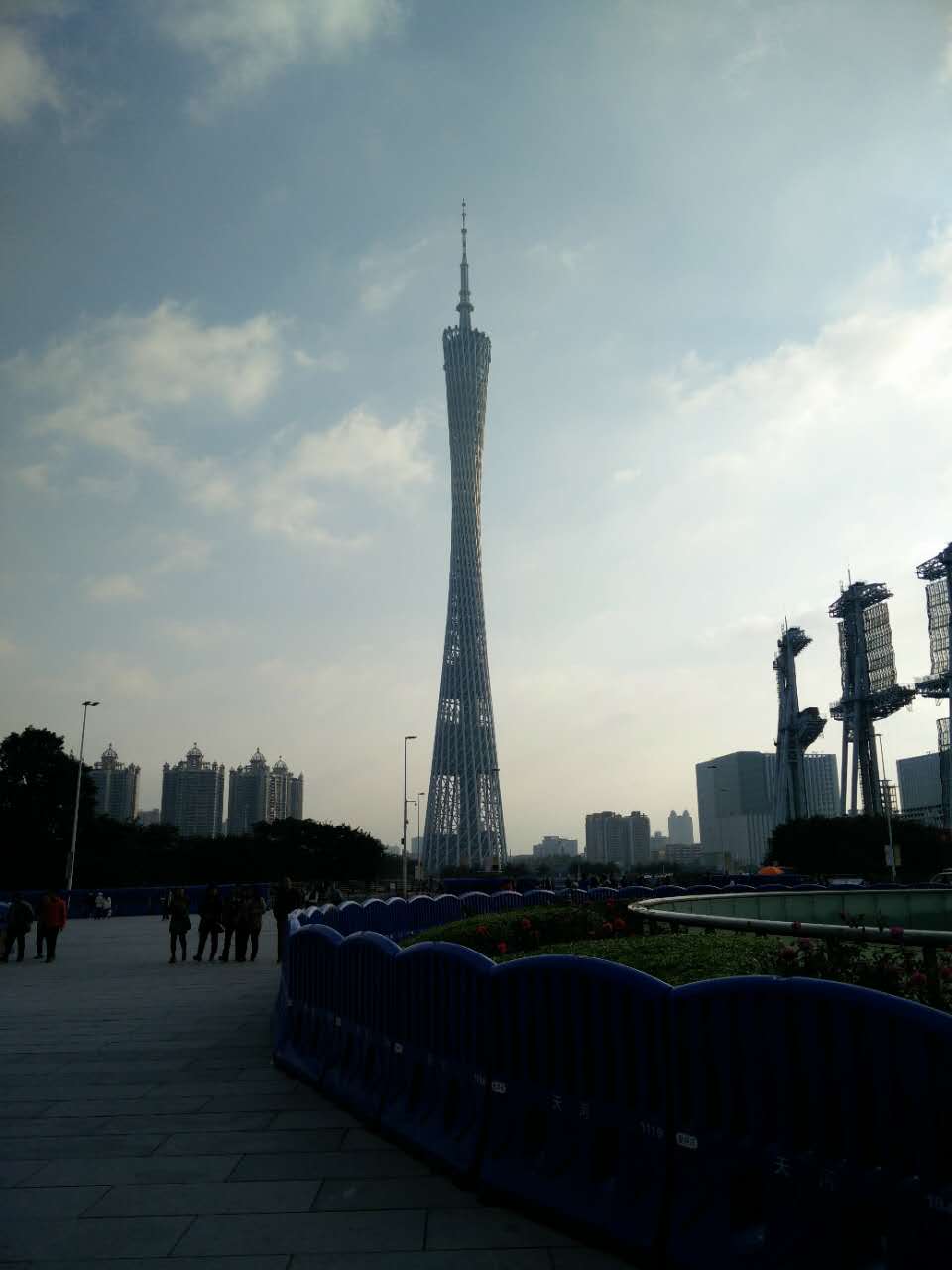

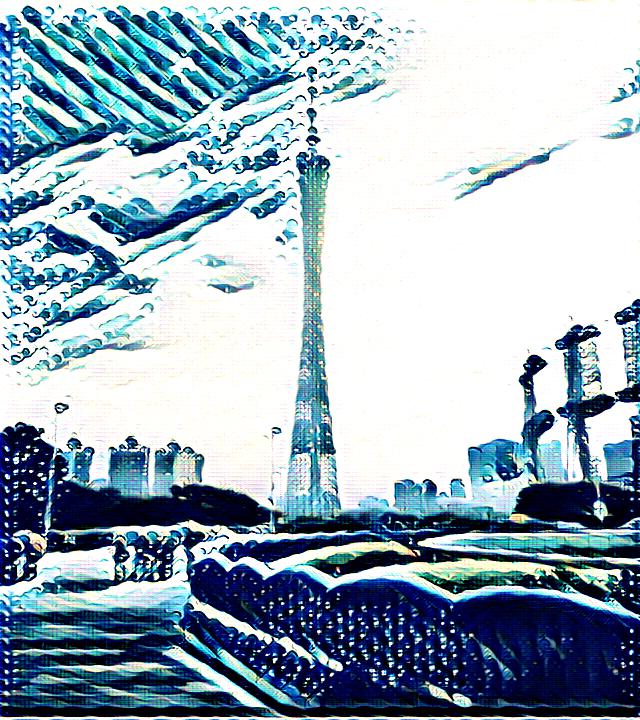

We added styles from various paintings to a photo of Chicago. Click on thumbnails to see full applied style images.

some pretrained model you can find in checkpoints directory.

- MXNet

- Pretrained VGG19 params file : vgg19.params

- Training data if you want to train your own models. The example models is trained on MSCOCO [Download Link](about 12GB)

python train.py --style-image path/to/style/img.jpg \

--checkpoint-dir path/to/save/checkpoint \

--vgg-path path/to/vgg19.params \

--content-weight 1e2 \

--style-weight 1e1 \

--epochs 2 \

--batch-size 20 \

--gpu 0

for more detail see the help information of train.py

python train.py -h

python transform.py --in-path path/to/input/img.jpg \

--out-path path/dir/to/output \

--checkpoint path/to/checkpoint/params \

--resize 720 480 \

--gpu 0

for more detail see the help information of transform.py

python transform.py -h