NeuralC is a lightweight, standalone C++ library for implementing deep feed-forward neural networks (DFF). It features:

- Generalized backpropagation algorithm

- A full matrix library

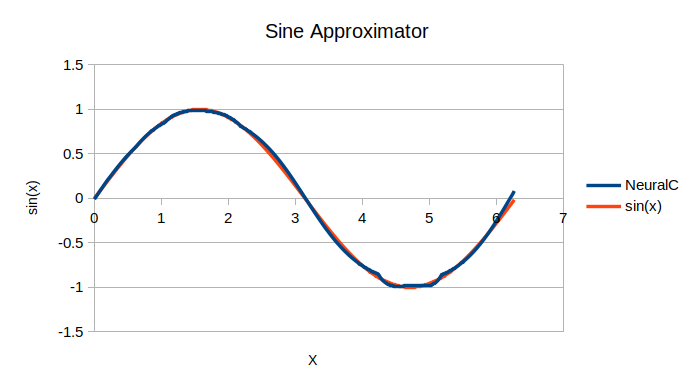

This is a learning exercise hobby project. Here is a visualization of the example sine approximator.

This requires the GNU CBLAS library for fast matrix multiplications.

- Include the

neural.hheader.

#include <neural.h>- Create a

NetworkParametersstruct that defines some key hyperparameters of the network, like the cost function and the individual layers. Each layer is defined by the number of nodes and its activation function.

neural::NetworkParameters params;

params.learning_rate = 1.0;

params.gradient_clip = 1.5;

params.cost_function = neural::quadratic_cost;

params.layers = {

{2, nullptr},

{2, neural::lrelu},

{1, neural::sigmoid},

};- Generate your neural network.

neural::Network network(params);- Load your dataset into a vector of

DataSamplestructures {input, desired output}.

std::vector<neural::DataSample> examples = {

{{1.0, 0.0}, {1.0}},

{{0.0, 1.0}, {1.0}},

{{0.0, 0.0}, {0.0}},

{{1.0, 1.0}, {0.0}},

};- Train your network.

int epochs = 1000;

for(int i = 0; i < epochs; i++) {

network.fit(examples);

}- Display the output of the network by evaluating some input.

for(auto &ex : examples) {

neural::Matrix output = network.forward(ex.input);

for(auto &val : ex.input) {

std::cout << int(val) << " ";

}

output.print();

}- Save the network to disk.

network.save("xor.net");A saved neural network binary file can also be loaded from disk by calling network.load("network_file.net"). This assumes that the network stored on disk follows the same structure as the one it is loaded into.

Read NeuralC source comments for more information. View the examples for other features.

- Reimplement hyperparameters for activation and cost functions

- Implement more activation and cost functions, including those involving vector operations

Code and documentation Copyright (c) 2019-2021 Keith Leonardo

Code released under the BSD 3 License.