Citekit is an open-source, extensible toolkit designed to facilitate the implementation and evaluation of citation generation methods for Large Language Models (LLMs). It offers a modular framework to standardize citation tasks, enabling reproducible and comparable research while fostering the development of new approaches to improve citation quality.

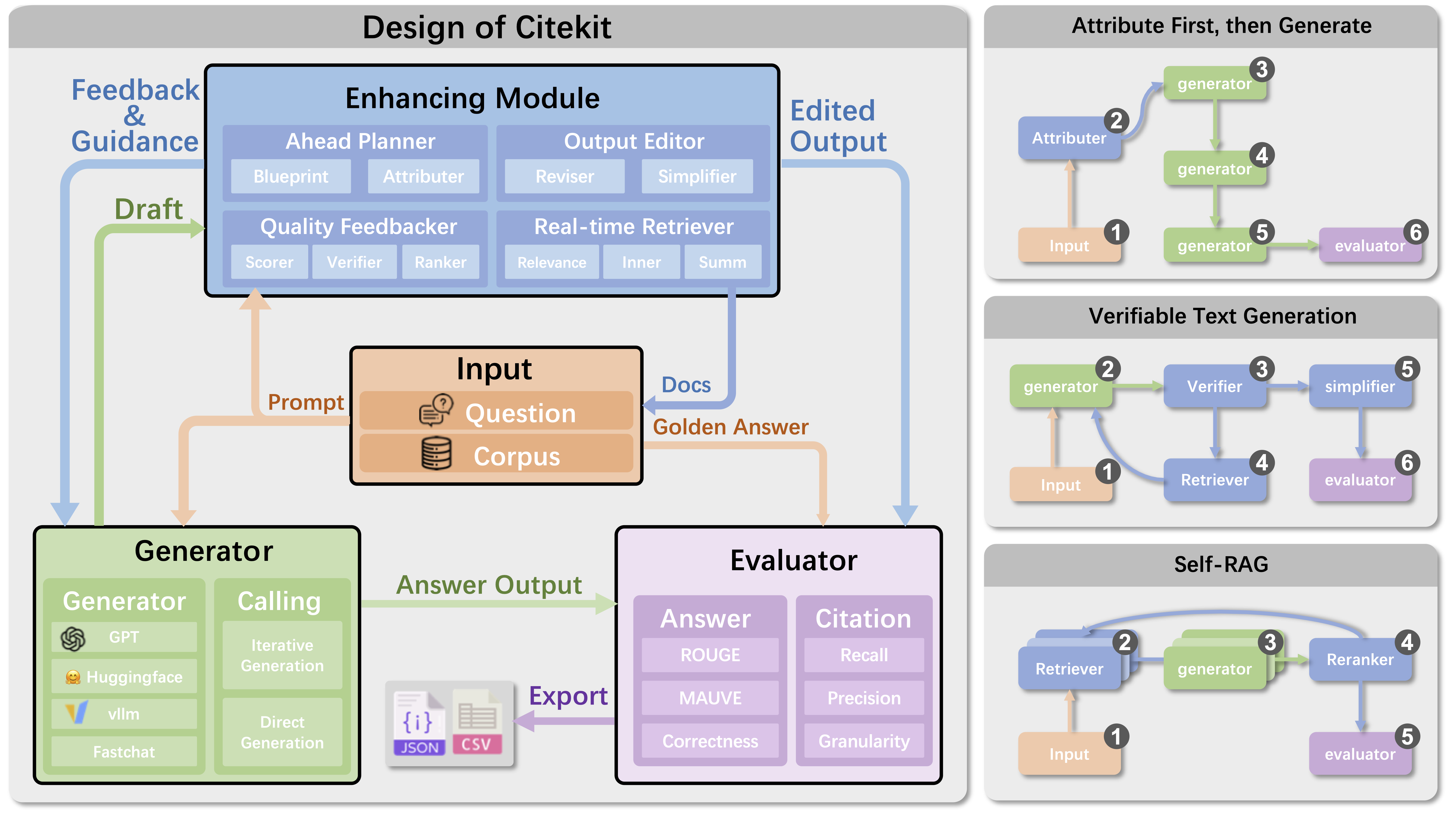

- Modular Design: Citekit is composed of four main modules: INPUT, GENERATION MODULE, ENHANCING MODULE, and EVALUATOR. These modules can be combined to construct pipelines for various citation generation tasks.

- Extensibility: Easily extend Citekit by adding new components or modifying existing ones. The toolkit supports different LLM frameworks, including Hugging Face and API-based models like OpenAI.

- Comprehensive Evaluation: Citekit includes predefined metrics for evaluating both answer quality and citation quality, with support for custom metrics.

- Predefined Recipes: The toolkit provides 11 baseline recipes derived from state-of-the-art research, allowing users to quickly implement and compare different citation generation methods.

To install Citekit, clone the repository from GitHub:

git clone https://github.com/SjJ1017/Citekit.git

cd Citekit

pip install -r requirements.txtTo realize an existing pipeline, for example:

export PYTHONPATH="$PWD"

python methods/ALCE_Vani_Summ_VTG.py --mode text --pr --rouge --qaSome files contain multiple methods. Use --mode to specify the desired method. For any pre-defined metrics, use --metric to enable it. Available metrics include:

rouge,mauveandlengthqa(for ASQA only),qampari(for QAMPARI only), andclaims(for ELI5 only)pr: citation precision and recall

Other optional flags:

model: openai model or model path in huggingface. By default the model isgpt-3.5-turbo, and please setexport OPENAI_API_KEY=your_tokensave_path: the output path of the resultdatasetanddemo: dataset file and the demonstration file for prompts, by default ASQA.ndoc: number of documents. Not applicable for some methods that donot use fixed number of documents.shots: number of few shots

To construct a pipeline, follow the steps in the demonstration.ipynb file or our video on Youtube, a simple example is presented below:

dataset = FileDataset('data/asqa.json')

with open('prompts/asqa.json','r',encoding='utf-8') as file:

demo = json.load(file)

instruction = demo['INST']

prompt = Prompt(template='<INST><question><docs><prefix><span>Answer: ',

components={'INST':'{INST}\n\n',

'question':'Question:{question}\n\n',

'docs':'{docs}\n',

'span':'The highlighted spans are: \n{span}\n\n',

'prefix':'Prefix: {prefix}\n\n',

})

evaluator = DefaultEvaluator(criteria = ['str_em','length','rouge'])

attributer = AttributingModule(model='gpt-3.5-turbo')

llm = LLM(model='gpt-3.5-turbo',prompt_maker=prompt, self_prompt={'INST':instruction})

pipeline = Sequence(sequence = [llm], head_prompt_maker = prompt, evaluator = evaluator, dataset = dataset)

pipeline.run_on_dataset(datakeys=['question','docs'], init_docs='docs')We welcome contributions to improve Citekit. Please submit pull requests or open issues on the GitHub repository.

This project is licensed under the MIT License - see the LICENSE file for details.