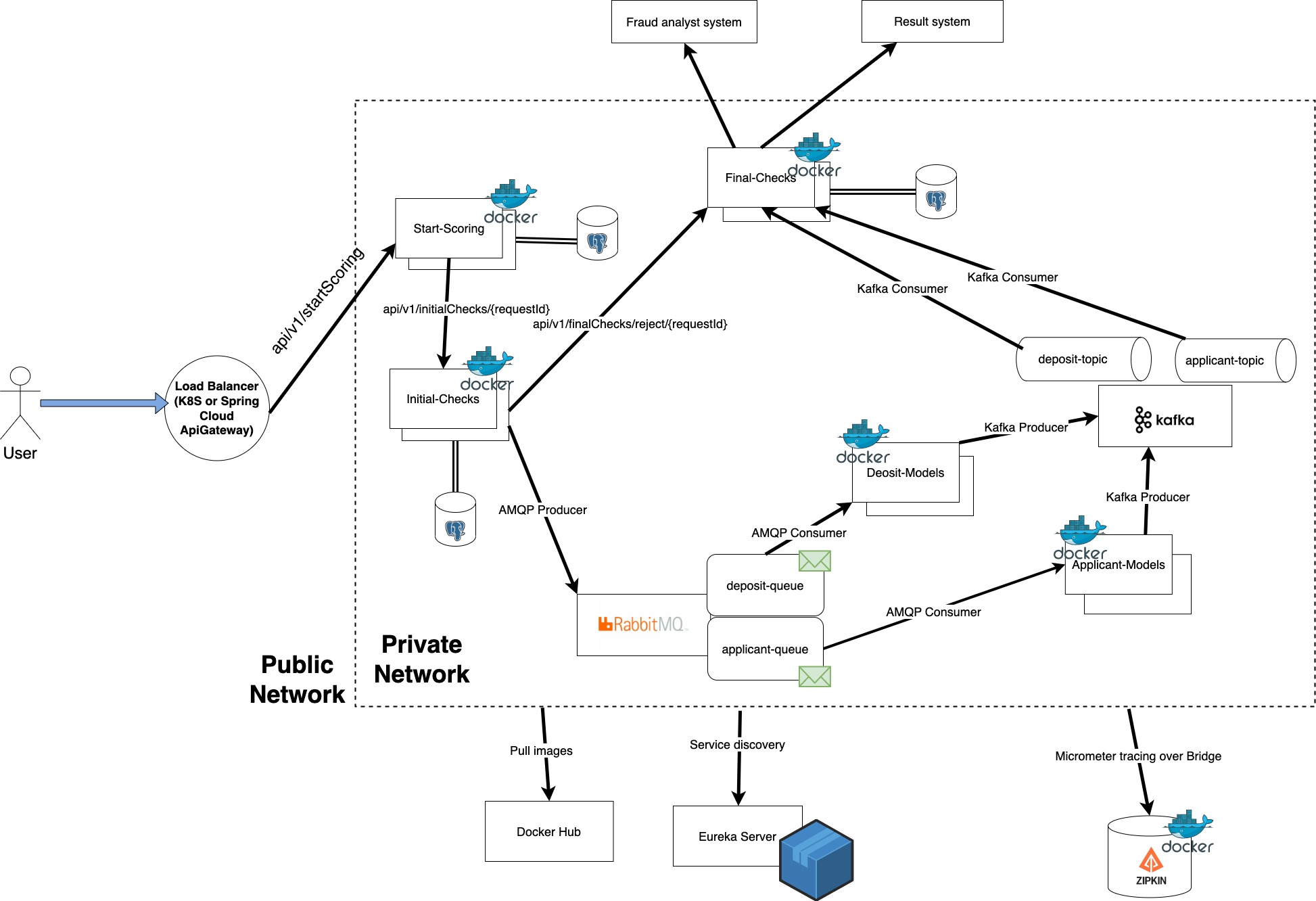

- This is a system which depicts overall flow how clients and deposits are scored/assessed in the banks. It is not a precise copy, but more of a blueprint which can be used as a plan.

- During creation, I simulated certain integrations which are usually done during the process.

Architecture

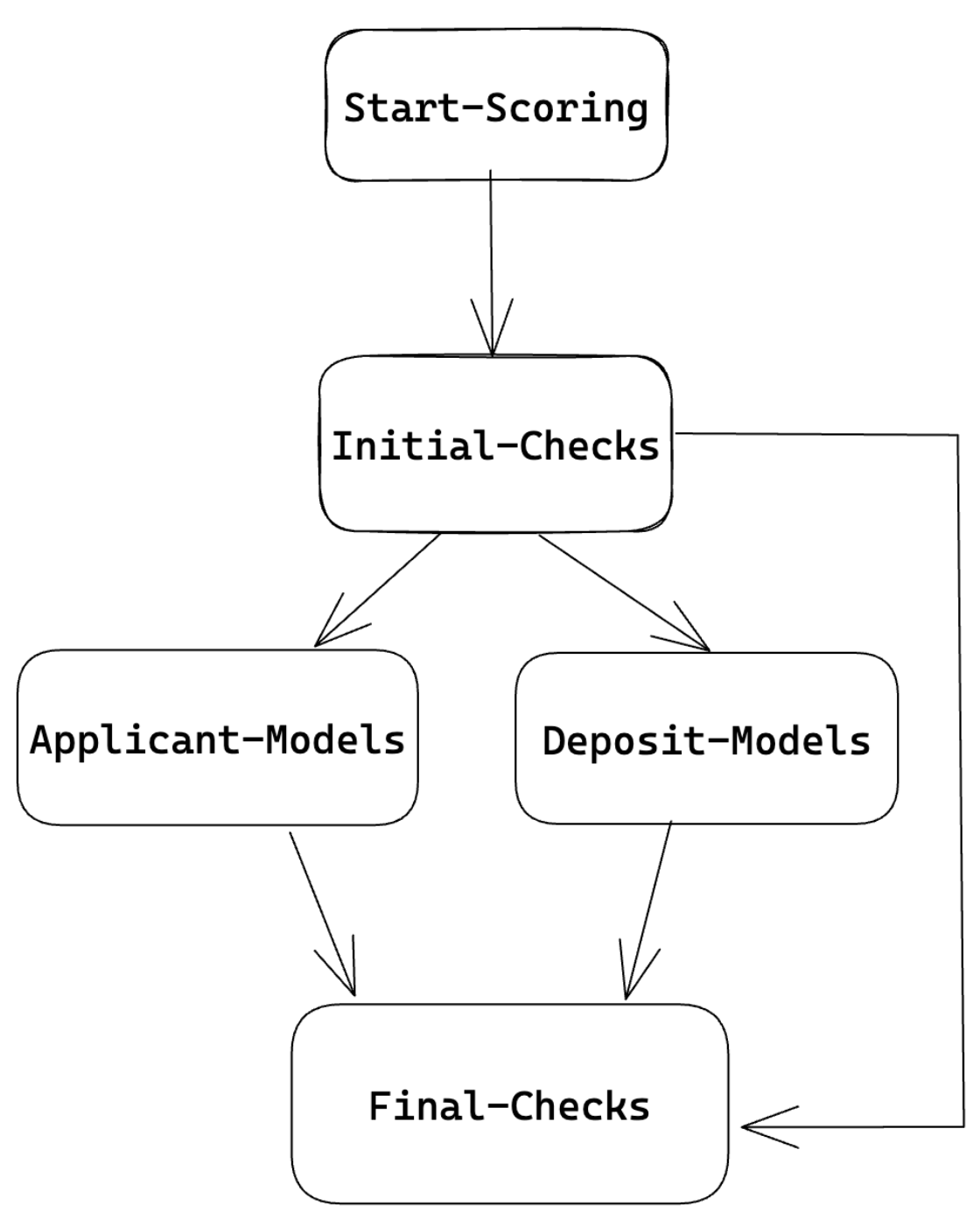

- The system was done using microservices architecture as it enables each service to focus on the domain - DDD.

- Moreover, microservice architecture shines in the system when we want certain parts to fail fast, but certain to wait till the service will wake up. Also, it is much easier to deploy such services in containers if Docker is used, or pods in K8S

Tech stack

- Java 17. Java is used as it is the most common language for such systems due to compiled-time feature and

backward compatibility. 17 version is chosen as it is the latest LTS one. As usual, for minimizing boilerplate

code I use

@Lomboklibrary - For building the project I use maven. As in best practices, I have main

pom.xmlwith parent dependencies and all childpoms either use something from parentpoms or use their own dependencies.- Moreover, I have services which are used as dependencies in other services

poms. It is done to either separate concerns or minimize code duplication as the presented code may be used in multiple services - Next, in services which are SpringBoot applications (not used as complimentary tools) there are 3 main plugins

implemented:

maven-compiler-pluginfor compiling codespring-boot-maven-pluginfor creating standalone application which is able to run on its ownjib-maven-pluginfor creating and pushing docker images to the docker registry. In our case - Docker Hub

- Moreover, I have services which are used as dependencies in other services

- SpringBoot, Spring Cloud are used as main frameworks. Former is used for running application whilst latter mainly for Feign and ApiGateway (this one is added as additional feature to see how Spring Cloud allows to create it)

- RabbitMQ and Kafka are used as brokers. Former is a queue type for decoupling initial-checks service with 2 AI services: applicant-models and deposit-checks. And later is for decoupling 2 AI services mentioned above with final-checks service

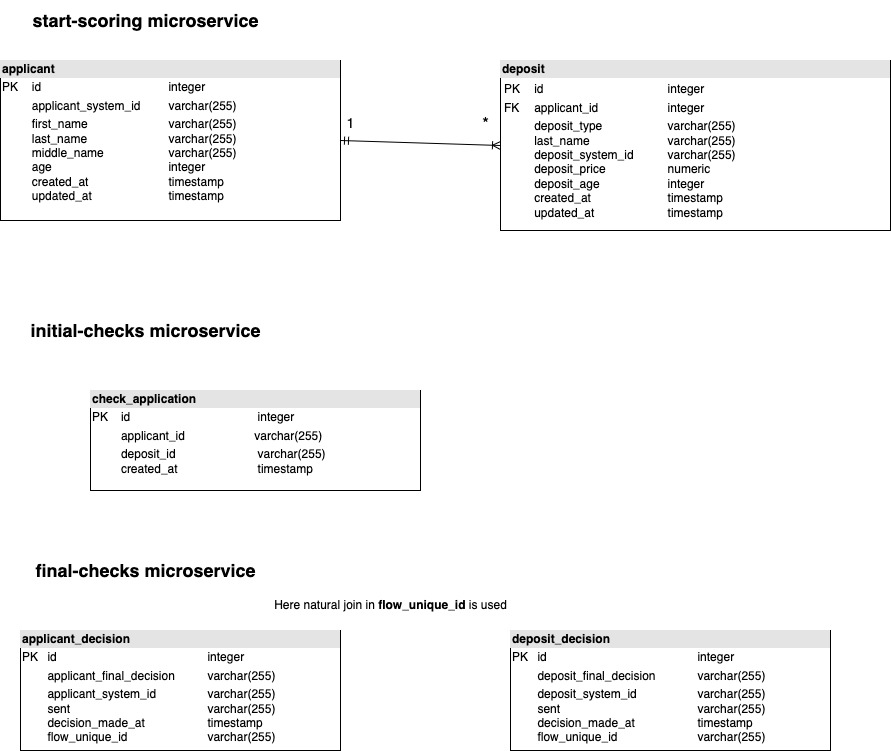

- Each service, where it is required, has PostgreSQL database to store data in it. Database per service is done due to good practice where we don't allow other services to use another service database without communication through the owning service

- Continuing with databases: also I used hibernate, not the raw one, but Spring Data JPA. Traditional

@Entityand@Repository - Observability is a pretty important factor, hence I also implemented it: logs, metrics, tracing:

- TODO: logs are to be stored in the database

- metrics - SpringBoot actuator

- tracing - Micrometer tracing using Brave. ZipKin for visualization

-

start-scoring- It is a service which accepts requests from the outer world (either through API Gateway or direct request)

- It accepts request with 2 objects: applicant and deposit. Then it saves those 2 entities into 2 tables in the database

- After that it sends request to the

initial-checksservice and waits for the response - When response comes back there are 2 main variants. In all cases

start-scoringservice updates last interaction in those 2 tables. 2 main cases:- sends 200 OK to the user that data has been sent accepted and now in the Thread Pool Executor

- there is some error and service catches it and presents the error

-

initial-checks- It is a service which has a complex flow with many checks about applicant and deposit

- At first, it accepts HTTP request from the

start-scoringservice. It saves data to its own database with only one table. This table holds data aboutapplicantId,depositIdandcreatedAt. - Then it separates the request into 2 categories: applicant and deposit. Afterwards 2 separate Thread Pool

Executors are activated and there is a complex system which assesses those 2 entities. In current version those checks have

logand serve as a template (plus random case that REJECT decision has been made during those checks). Each of the 2 flows has FlowContext variable which is sent from flow to flow which keeps all the required data about the applicant or deposit. - If some check worked - it uses method in CheckAction abstract class which in turn calls another

@Servicewhich sends request to thefinal-checksservice bypassing all AI models services (here FeignClient is used).final-checkswill deal with it - persists data and sends request to the required system - If everything worked well - data is sent to 2 different queues in RabbitMQ using producer:

- one for applicant and other - deposit

-

applicant-models- Service responsible for scoring applicant using AI models

- It has Rabbit Listener, consumer, which listens to the certain queue

- As new data comes in, it kicks the process of scoring using the models. In current version it doesn't have AI models

- After everything is done and decision is given, data is sent to topic in Kafka dedicated for applicants

-

deposit-models- Service responsible for scoring deposit using AI models

- It also has Rabbit Listener, consumer, which listens to the certain queue

- As new data comes in, it kicks the process of scoring using the models. In current version it doesn't have AI models

- After everything is done and decision is given, data is sent to topic in Kafka dedicated for deposits

-

final-checks- It is a service where Kafka Listeners listen to new data in 2 topics: for applicants and deposits

- After new data comes in, it is persisted to the database

- Important: here application uses Natural Join instead of traditional foreign key as there 2 unrelated topics, and it would be much more difficult and resource consuming to use ids

- There is a Scheduled service which runs every specified time frame and takes data from the database based on Sent status.

Of course, here there is a lock applied so as no race condition occurred where same data is sent.

There are 2 main choices:

- Decision is ACCEPT or REJECT: data is sent to the system about decisions

- Decision is MANUAL: data is sent to fraud analyst

- Also, it has endpoint for

initial-checksservice which accepts requests from it when application failed on some checks in that service. In this case data will be persisted with REJECT decision.- Important: here as mentioned earlier, there are 2 separate tables which will be JOINed in Scheduled using Natural JOIN

- Below you can see ER diagram of the database in the microservices Link to the image

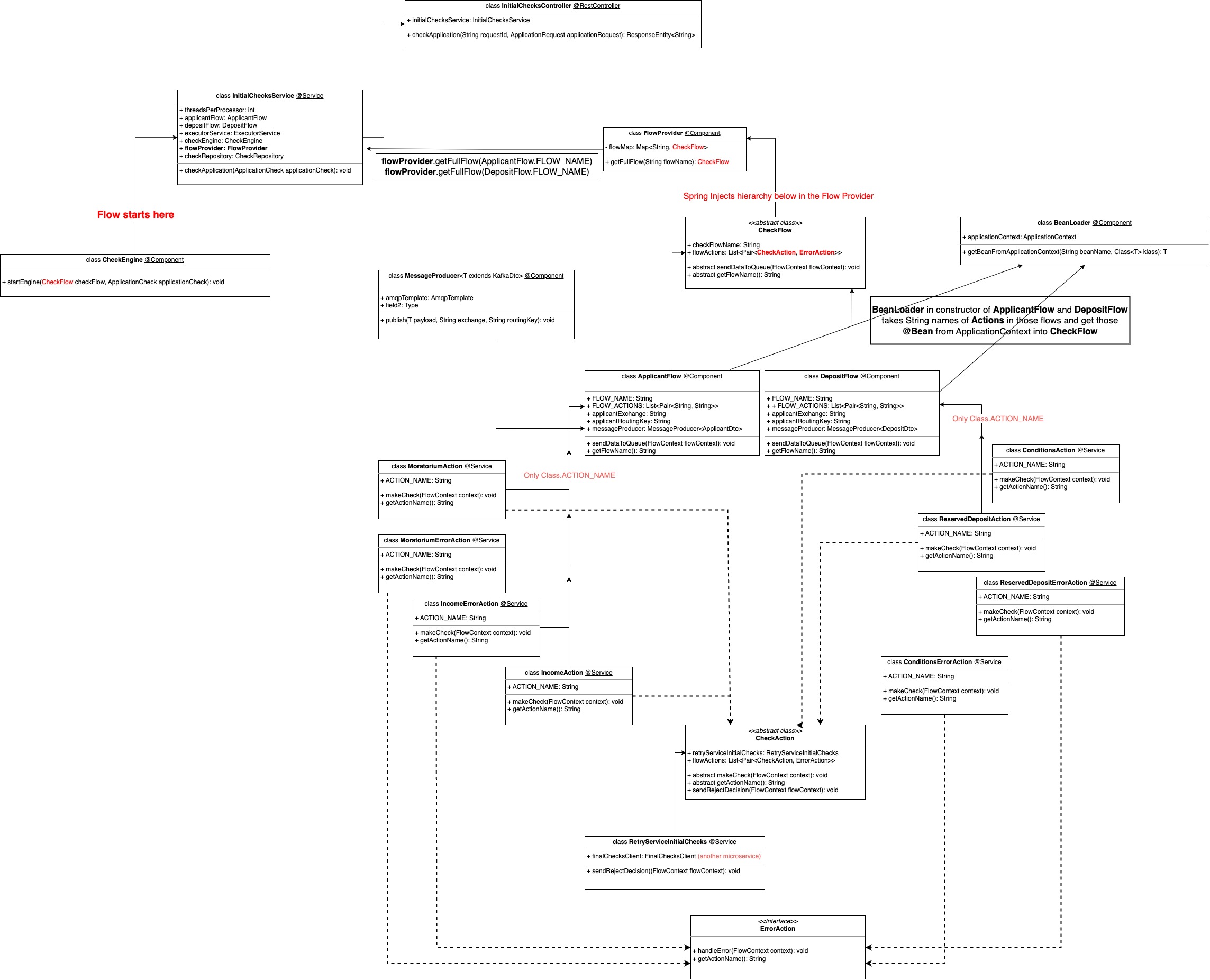

- Here there is a class UML diagram which depicts how classes in the flow depend on each other

- Everything starts with a

InitialChecksController. It accepts request fromStartScoringservice and persists in a dedicated table. - Then

InitialChecksServicekicks in which gets 2 separate flows for applicant and deposit:ApplicantFlowandDepositFlowwith one parent -CheckFlow:- Each Flow has String names of Actions and ErrorActions. In the constructor of each flow there is a

BeanLoaderclass which accepts String name of the class and class type (here I use parent classes:CheckActionandErrorAction). Then it gets those classes from Spring ApplicationContext (as those actions are@Service). Then those classes go to theCheckFlowwhere they are attached toflowNameandflowActionswhich are got from ApplicationContext. - Afterwards,

CheckFlowgets Autowired to theFlowProviderviaCheckFlowparent class =>ApplicantFlowandDepositFloware triggered being children and injection (aka autowiring) happens due to those classes being@Component. Then the chain above is activated.

- Each Flow has String names of Actions and ErrorActions. In the constructor of each flow there is a

- More info about

CheckActionhierarchy:CheckActionabstract class andErrorActioninterface which have children in the hierarchy. For now, they can be seen incom.initialchecks.process.flow.checkactions- Each child of

CheckActionhas checks to which verify the applicant or deposit. If some check pans out - it usesRetryServiceInitialChecks@Serviceto make a request toFinal-Checksmicroservice via REST - If no check works - produces message to applicant and deposit queues in RabbitMQ

- In

CheckEnginethere is an iteration overCheckActions children where those actions are activated via abstract method in abstract class- If some error is caught - we switch to error action in

CheckEngine

- If some error is caught - we switch to error action in

- Example of flow iteration with error handling:

for (Pair<CheckAction, ErrorAction> actionPair : actions) {

final CheckAction action = actionPair.getFirst();

final ErrorAction errorAction = actionPair.getSecond();

try {

action.makeCheck(flowContext);

} catch (RuntimeException ex) {

log.error("Error while processing flow = {}, action = {}",

flowContext.getFlowName(),

action.getActionName(),

ex

);

errorAction.handleError(flowContext);

}

}