Paper Link- Smartforms

Initiation, monitoring, and evaluation of development programmes can involve field-based data collection about project activities. This data collection through digital devices may not always be feasible though, for reasons such as unaffordability of smartphones and tablets by field-based cadre, or shortfalls in their training and capacity building. Paper-based data collection has been argued to be more appropriate in several contexts, with automated digitization of the paper forms through OCR (Optical Character Recognition) and OMR (Optical Mark Recognition) techniques. We contribute with providing a large dataset of handwritten digits, and deep learning based models and methods built using this data, that are effective in real-world environments. We demonstrate the deployment of these tools in the context of a maternal and child health and nutrition awareness project, which uses IVR (Interactive Voice Response) systems to provide awareness information to rural women SHG (Self Help Group) members in north India. Paper forms were used to collect phone numbers of the SHG members at scale, which were digitized using the OCR tools developed by us, and used to push almost 4 million phone calls.

Handwritten Digit Dataset - ✅

Code and pretrained models - ✅

Library for designing new forms - ❌ (coming soon!)

End-to-end Code for homography based roi extraction and recognition - ❌ (coming soon!)

- python >= 3.0

- tensorflow >= 2.0

- tensorflow-addons

- numpy==1.19.5

- pandas == 1.4.2

- PIL

- OpenCV

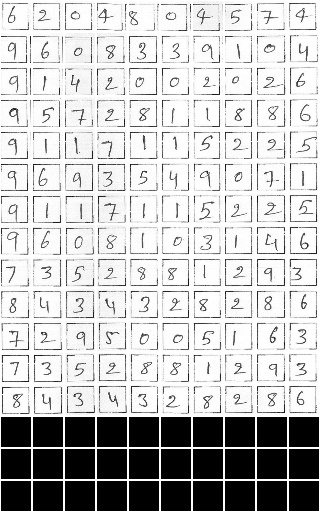

The dataset consists of a grid of 16X10 cells, in which each cell contains a handwritten digit of size 32X32. The size of the digit image is 30X30. There is a white(255) boundary of 1 pixel around the digit. A cell can be empty if there is no digit.

Fig.2 - Single Digit DatasetDownload the datasets from here- Gdrive

Clone the repository using the command-

git clone https://github.com/Smartforms2022/Smartforms.git

Download the dataset from the above link and put all the files in the data folder.

cd Single_Digit_Recognition

Train the model by running the train.sh file. This file specifies the location of the image folder, the ground truth file, the train/val/test split file, and the directory to which the weights should be saved. You may modify the paths in this file as necessary. If None is specified for the train/val/test split file, the code will generate a new split.

python ./code/train_embedding.py \

--image_folder_path=./data/form2 \

--output_path=./weights/form2 \

--ground_truth_path=./data/form2_gt.txt \

--split_path=./data/form2_split3.pkl

python ./code/train_classifier.py \

--image_folder_path=./data/form2 \

--output_path=./weights/form2 \

--ground_truth_path=./data/form2_gt.txt \

--split_path=./data/form2_split3.pkl \

--weight_path=./weights/form2/embedding.h5

Run the test.sh file to validate the model, weights for the trained models are included in the Weights folder. You may modify the paths in this file as necessary.

python ./code/test.py \

--image_folder_path=./data/form2 \

--weight_path=./weights/form2/classifier1.h5 \

--ground_truth_path=./data/form2_gt.txt \

--split_path=./data/form2_split3.pkl

Following are the 2D UMAP visualizations of the embedding obtained from the Softmax and Triplet loss based model-

Fig.3 - 2D Visualization of Embeddings