Yin Yihang, Qingzhong Wang, Siyu Huang, Haoyi Xiong, Xiang Zhang

Please check our arXiv version here for the full paper with supplementary. We also provide our poster in this repo. Our oral presentation video at AAAI-2022 can be found on YouTube, for both the brief introduction and the full presentation.

rdkit

pytorch 1.10.0

pytorch_geometric 2.0.2$ python download_dataset.pyThere are many settings for semi-supervised learning, you can change to other experiments by changing the --exp flag. The node attribute augmentation is by default disabled, you can enable it by setting the --add_mask flag to True.

$ python main.py --exp=joint_cl_exp --semi_split=10 --dataset=MUTAG --save=joint_cl_exp --epochs=30 --batch_size=128 --lr=0.01You can use the give script to run multiple experiments, you may need to adjust the hyper-parameter like batch_size to make it runnable on your devices.

$ sh run.sh$ sh un_exp.sh$ cd transfer

$ wget http://snap.stanford.edu/gnn-pretrain/data/chem_dataset.zip

$ unzip chem_dataset.zip

$ rm -rf dataset/*/processed$ sh run_chem.shIf you find this work helpful, please kindly cite our paper.

@article{yin2021autogcl,

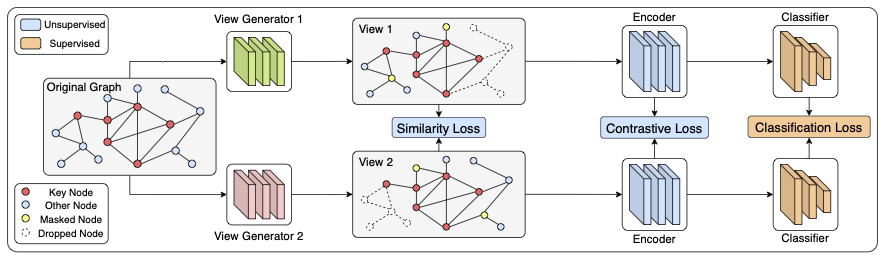

title={AutoGCL: Automated Graph Contrastive Learning via Learnable View Generators},

author={Yin, Yihang and Wang, Qingzhong and Huang, Siyu and Xiong, Haoyi and Zhang, Xiang},

journal={arXiv preprint arXiv:2109.10259},

year={2021}

}