Event-Driven-Stock-Market-Prediction 1.0

Description

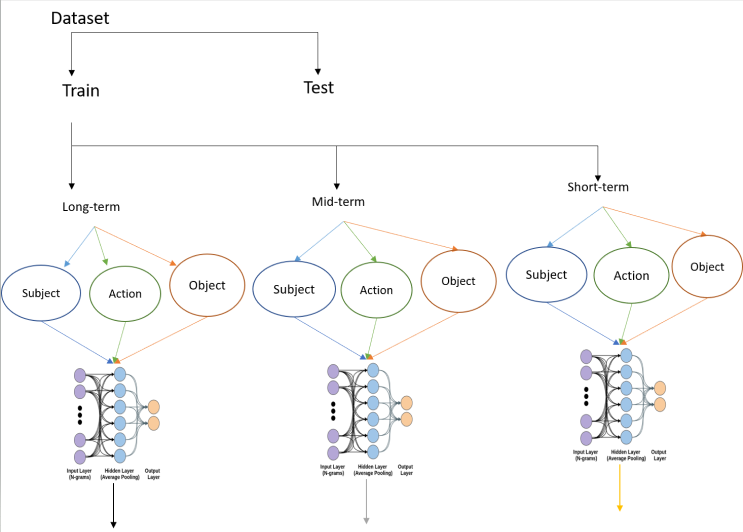

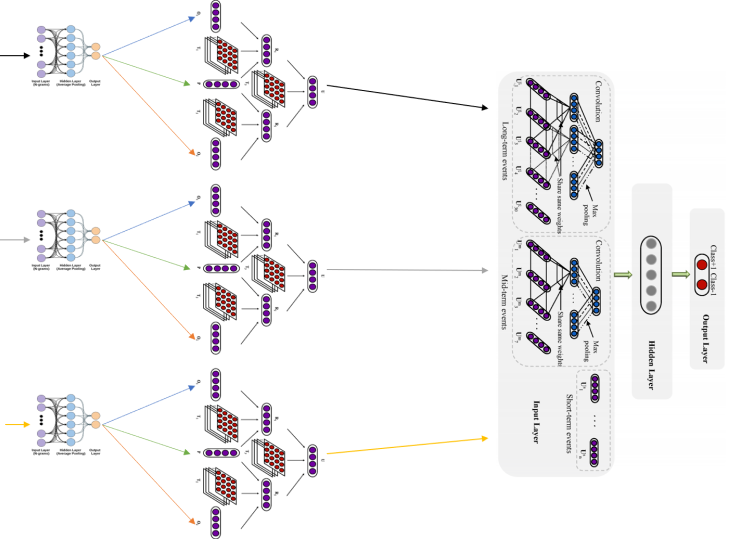

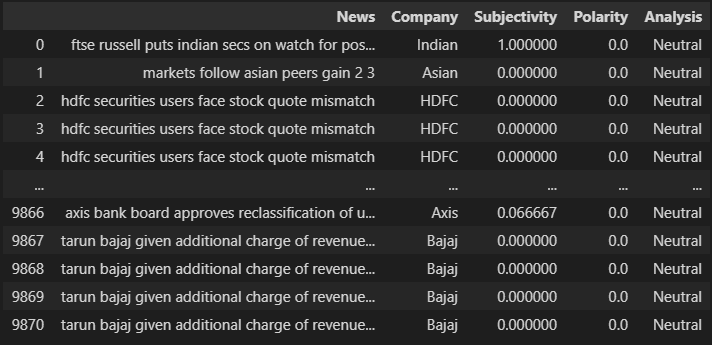

- Project is all about a system that predicts stock prices using the events occurring day by day. Events have been extracted from the archives section of the newspapers available online. Then the extracted headlines are been divided into three parts i. e. actor, relation, and object. After some preprocessing and generation of the word vectors, these texts are sent for calculating entity relation representation to the novel neural tensor network. Further, they are processed using a deep convolutional neural network with a max-pooling layer, and the result is been concluded into two classes positive and negative.

Badges

Visuals

-

Dataset --> Triplet Extraction --> Word-Embeddings(FastText model)

-

Word-Embeddings --> NTN model --> convolutional model

Setup

- Backend

-

This repository contains Backend-side code for front-end and its installations process click here

-

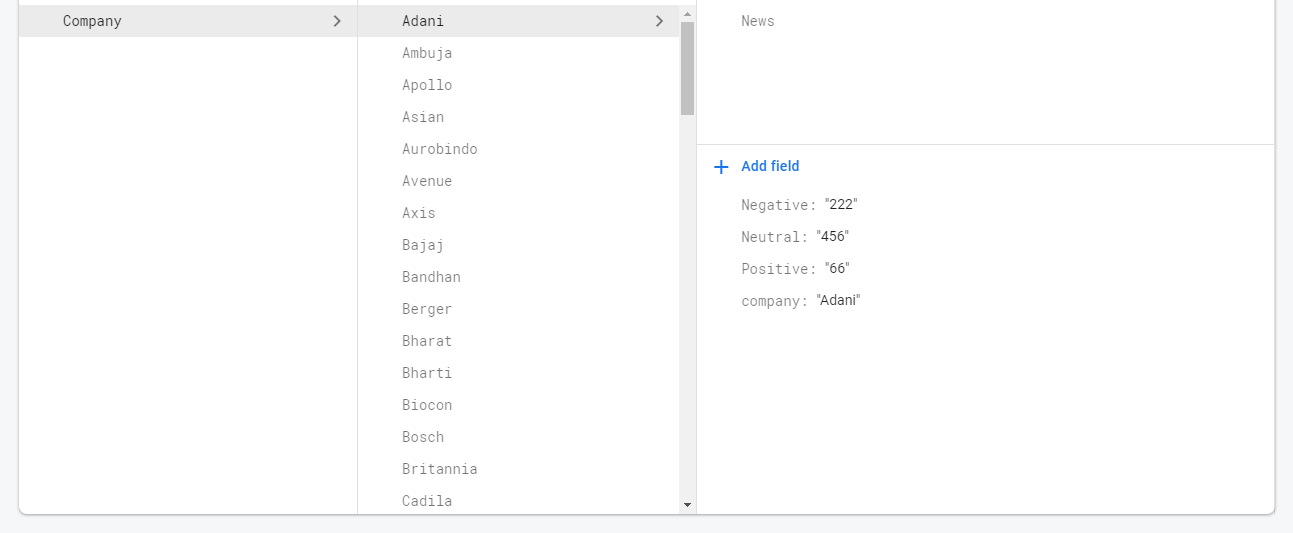

For setting up the backend, I had used Google-Firebase on both the sides, pushing predicted Data of news and tweets to the firebase, using [firebase admin] https://firebase.google.com/docs/database/admin/start#python package, the hyperlink contains setup documentation about the firebase admin and its queries.

-

If you are new you need to first Register/Sign up @ Google-Firebase with email you want, go to console build a project and start. Follow the follwing structure and flow ofo database, for the code to work easily,

Imports needed

import firebase_admin

from firebase_admin import credentials

from firebase_admin import db

Fetch the service account key JSON file contents

cred = credentials.Certificate('path/to/serviceAccountKey.json')

Initialize the app with a service account, granting admin privileges

firebase_admin.initialize_app(cred, {

'databaseURL': 'https://databaseName.firebaseio.com'

})

As an admin, the app has access to read and write all data, regradless of Security Rules

ref = db.reference('restricted_access/secret_document')

print(ref.get())

Frameworks/Methods used

-

Beautiful soup(bs4) - web scraping tool

-

NTN model for action - for getting entity relationship representation

-

Convolution model - for training the representation and using it for predicting sentiments

- Frontend

-

The front-end is been posted into different repo, to visit click here

-

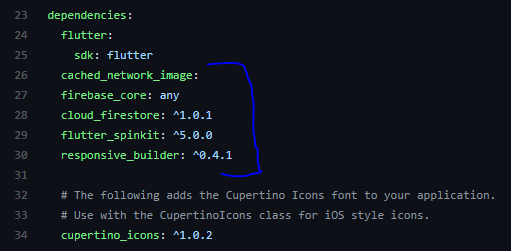

Used flutter for development - flutter is a UI/UX framework for more details checkout few resources to get you started if this is your first Flutter project:

-

For help getting started with Flutter, view our online documentation, which offers tutorials, samples, guidance on mobile development, and a full API reference.

-

for setting things up,

-

Install flutter if not, using this

-

After installation open the cmd prompt and type

flutter create project-name -

Copy the lib folder from the repo, Predictor./lib/ to your new project and also import the dependencies from pubspec.yaml

-

after copy pasting, to save dependencies run

flutter pub getin the terminal, or click Packages get in IntelliJ or Android Studio.

-

-

For web deployment on flutter follow this documentation

-

For connecting it to firebase console follow this

-

Last but not the least, find out some awesome queries from here.

for more details regarding flutter please contact me @ myiotproduct@gmail.com

do check my other Flutter projects,

-

Connect apps - EnginnerApp UserApp AdminApp

Usage

-

Firstly run top_stocks.ipynb file to get names of top stocks(Indian) which are established in BSE and NSE

link = "https://money.rediff.com/companies/market-capitalisation" dayres = requests.get(link) daysoup1 = bs4.BeautifulSoup(dayres.text, 'html.parser')the snippet in from the file, denoting that web scraping is been performed on the link given above.

-

a new file will be created named topL.csv, containing the web scraped names of top stocks. topL

-

Further, execute the extract_news.py for extracting the news of the top List.

-

or run directly the analysis.ipynb for processing the tweets for prediction.

-

at last run the predicted.ipynb for passing data to the firebase.(Please make changes in firebase_admin before running this file)

Support

-

Live demo @ Predictor.

-

Contact thorugh email

Road-map

-

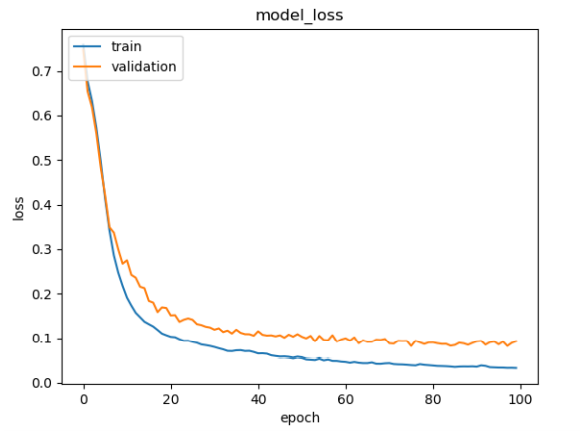

With the help of NTN and CNN, I had built 2 models Chronologically, where in the first model I had just used the raw embeddings for the sentiment analysis and after which for more efficiency, I had converted the word embeddings into event embeddings using the fasttext model and NTN model.

-

Just for the sake of displaying, I had craeted a prototype website containing the information of the predicted stocks.

-

The comparison of word-embedding-based models and event-embedding-based models supports our previous assertion that events are better features for stock market prediction than terms.

Project Status

- I would be completing my garduation first, after which I would redesign the structure of the whole project and than pass it to the community with many advances in my mind.