Multi-Granularity Hierarchical Attention Fusion Networks for Reading Comprehension and Question Answering

If anyone would love to test the performance on SQuAD, please tell me the final score. You just need to change the coca_reader.py to read in dataset of SQuAD.

Paper: http://www.aclweb.org/anthology/P18-1158

Allennlp: https://github.com/allenai/allennlp

First you should install allennlp and make sure you have downloaded the elmo and glove. You will find the version information in config/seperate_slqa.json. You could also use elmo by url, please turn to allennlp tutorials for help.

cd SLQA

mkdir elmo

mkdir glove

Then for train, run:

allennlp train config/seperate_slqa.json -s output_dir --include-package coca-qa

To modified the parameters for the model, you can see config/seperate_slqa.json.

The clean for useless config file and further improvement are pending.

For performance on SQuAD1.1 and further improvement, please see the issue page.

- Rewrite the model using allennlp. It seems that it would run successfully. I'm waiting for the performance.

- Add simple flow layer and the config file is slqa_h.json in package config.

- It seems that the performance is not good enough.

- The

text_field_embedderreceives a list oftoken embedders and concatenates their output in an arbitrary order. So if we usesplitwe can't make sure the part with dimension of 1024 is the output ofelmo_token_embedder. As a result, I split it as three seperatetext_field_embedders. - The fuse function in the paper is wrote as the same one. But I think the fuse layers of passage, question and self-aligned passage representation use different weight and they should not share gradients. So I use three different fuse layers.

- Change the self attention function from

D = AA^TtoD = AWA^T

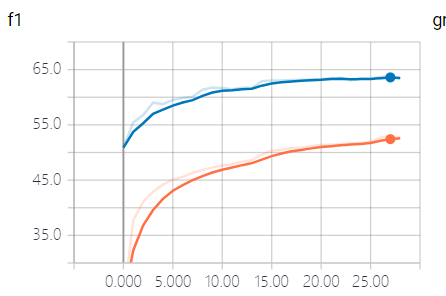

I think this version will be the final. Since I don't know how to reach the performance mentioned in paper where it's good than bidaf with self-attention and elmo. The final F1 score on CoQA is 61.879 where bidaf++ can reach 65. Besides, I didn't use any previous questions and answers. May be the performance with historical information is good enough but I have no time to test now.

- The module for predicting the question as yes/no/not is not good enough, and this may have a bad influence on predicting the start scores and end scores, so we need to fix it. I will consider using a bi-linear function on question vector and learned self-attentioned passage vector to generate the answer choice logits.

- Try to have a better performance.

- Add more manual features.

- Test performance with previous information.

- Test performance on SQuAD1.1.