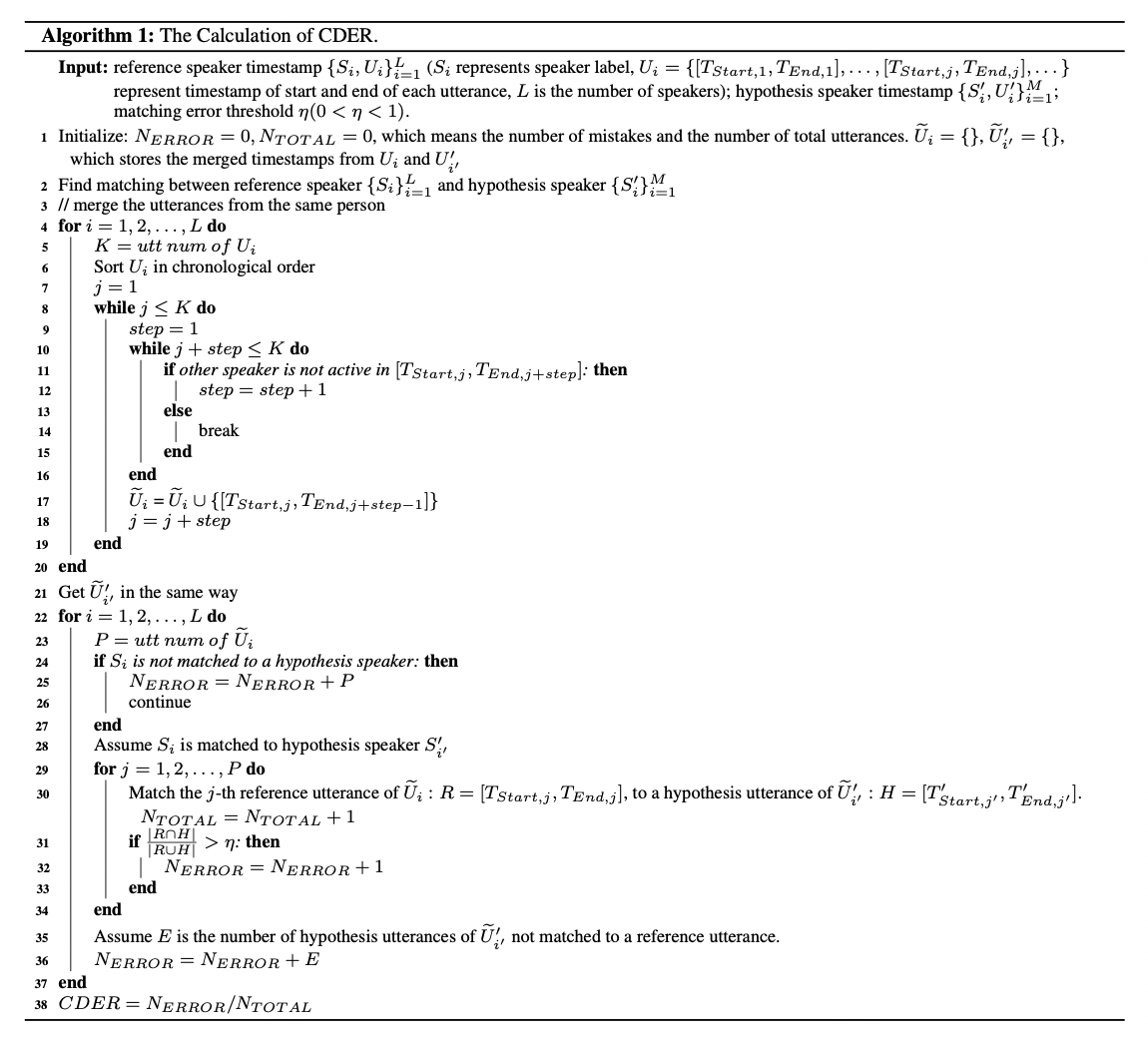

DER can reasonably evaluate the overall performance of the speaker diarization system on the time duration level. However, in real conversations, there are cases that a shorter duration contains vital information. The evaluation of the speaker diarization system based on the time duration is difficult to reflect the recognition performance of short-term segments. Our basic idea is that for each speaker, regardless of the length of the spoken sentence, all type of mistakes should be equally reflected in the final evaluation metric. Based on this, we intend to evaluate the performance of the speaker diarization system on the sentence level under conversational scenario (utterance level). We adopt Conversational-DER (CDER) to evaluate the speaker diarization system.

Both the reference and hypothesis(system) should be saved in RTTM (Rich Transcription Time Marked) format.

python3 score.py -s hypothesis_rttm_path -r reference_rttm_pathnumpy

pyannote.coreFor CDER (Conversational Diarization Error Rate) calculation, we will firstly merge the utterances from the same person. For example, assuming

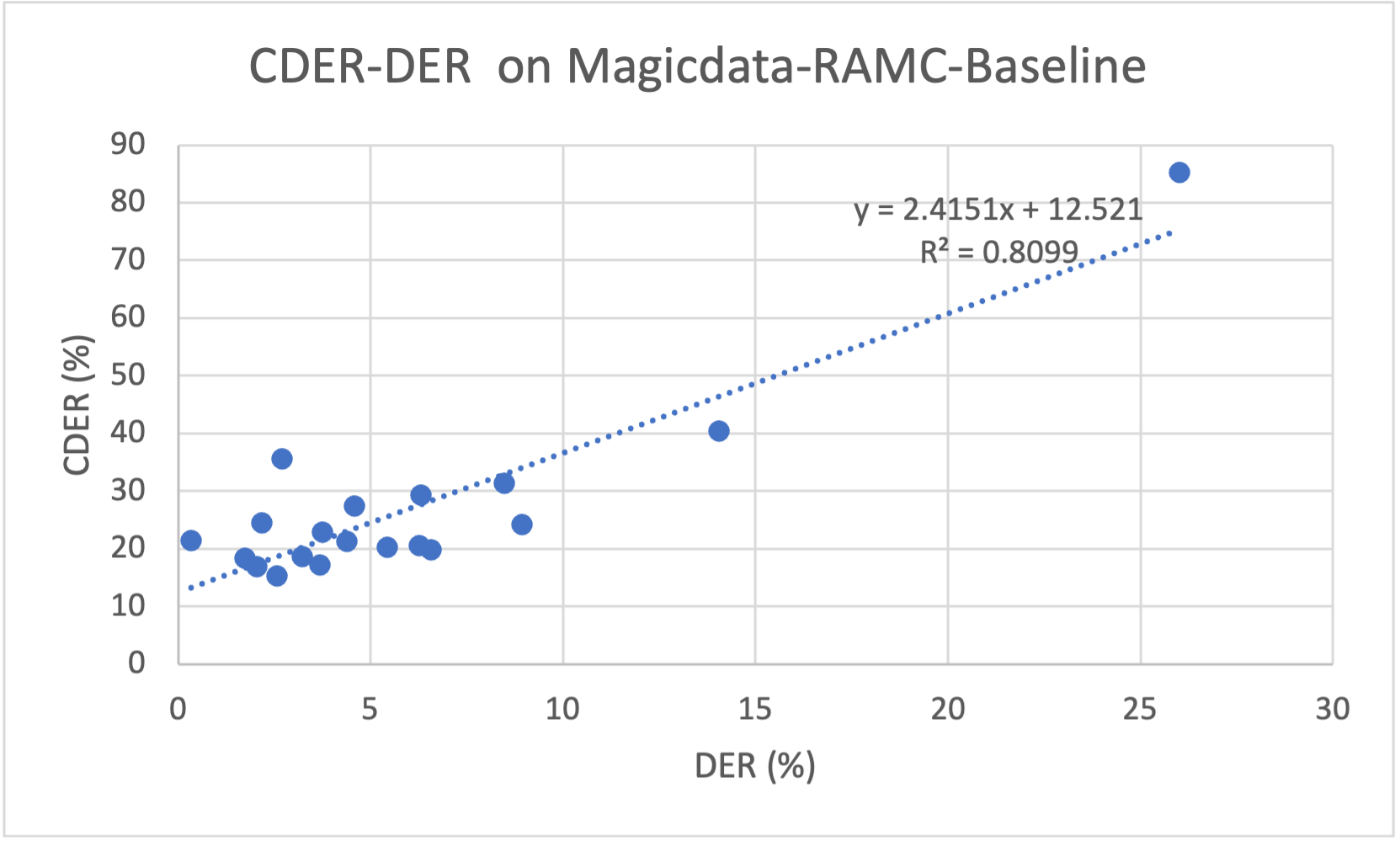

We use CDER to evalate speaker diarization result of our MagicData-RAMC baseline.

python3 score.py -s ./magicdata_rttm_pre -r ./rttm_gt_test

CTS-CN-F2F-2019-11-15-1421 CDER = 0.198

CTS-CN-F2F-2019-11-15-1422 CDER = 0.356

CTS-CN-F2F-2019-11-15-1423 CDER = 0.242

CTS-CN-F2F-2019-11-15-1426 CDER = 0.314

CTS-CN-F2F-2019-11-15-1428 CDER = 0.246

CTS-CN-F2F-2019-11-15-1434 CDER = 0.173

CTS-CN-F2F-2019-11-15-1447 CDER = 0.169

CTS-CN-F2F-2019-11-15-1448 CDER = 0.206

CTS-CN-F2F-2019-11-15-1449 CDER = 0.213

CTS-CN-F2F-2019-11-15-1452 CDER = 0.187

CTS-CN-F2F-2019-11-15-1458 CDER = 0.293

CTS-CN-F2F-2019-11-15-1461 CDER = 0.214

CTS-CN-F2F-2019-11-15-1463 CDER = 0.184

CTS-CN-F2F-2019-11-15-1468 CDER = 0.154

CTS-CN-F2F-2019-11-15-1469 CDER = 0.230

CTS-CN-F2F-2019-11-15-1470 CDER = 0.275

CTS-CN-F2F-2019-11-15-1473 CDER = 0.203

CTS-CN-F2F-2019-11-15-1475 CDER = 0.852

CTS-CN-F2F-2019-11-15-1477 CDER = 0.404

Avg CDER : 0.269The relationship between CDER and DER on the speaker diarization result of MagicData-RAMC baseline is following.

If you use cder in your paper,please kindly consider citing our upcoming CDER description paper (will appear in iscslp 2022).

If you use MagicData-RAMC dataset in your research, please kindly consider citing our paper:

@article{yang2022open,

title={Open Source MagicData-RAMC: A Rich Annotated Mandarin Conversational (RAMC) Speech Dataset},

author={Yang, Zehui and Chen, Yifan and Luo, Lei and Yang, Runyan and Ye, Lingxuan and Cheng, Gaofeng and Xu, Ji and Jin, Yaohui and Zhang, Qingqing and Zhang, Pengyuan and others},

journal={arXiv preprint arXiv:2203.16844},

year={2022}

}

If you have any questions, please contact us. You could open an issue on github or email us.

| Authors | |

|---|---|

| Gaofeng Cheng | chenggaofeng@hccl.ioa.ac.cn |

| Yifan Chen | chenyifan@hccl.ioa.ac.cn |

| Runyan Yang | yangrunyan@hccl.ioa.ac.cn |

| Qingxuan Li | liqx20@mails.tsinghua.edu.cn |

[1] NIST. (2009). The 2009 (RT-09) Rich Transcription Meeting Recognition Evaluation Plan. https://web.archive.org/web/20100606041157if_/http://www.itl.nist.gov/iad/mig/tests/rt/2009/docs/rt09-meeting-eval-plan-v2.pdf

[2] Ryant, N., Church, K., Cieri, C., Du, J., Ganapathy, S. and Liberman, M., 2020. Third DIHARD challenge evaluation plan. arXiv preprint arXiv:2006.05815.