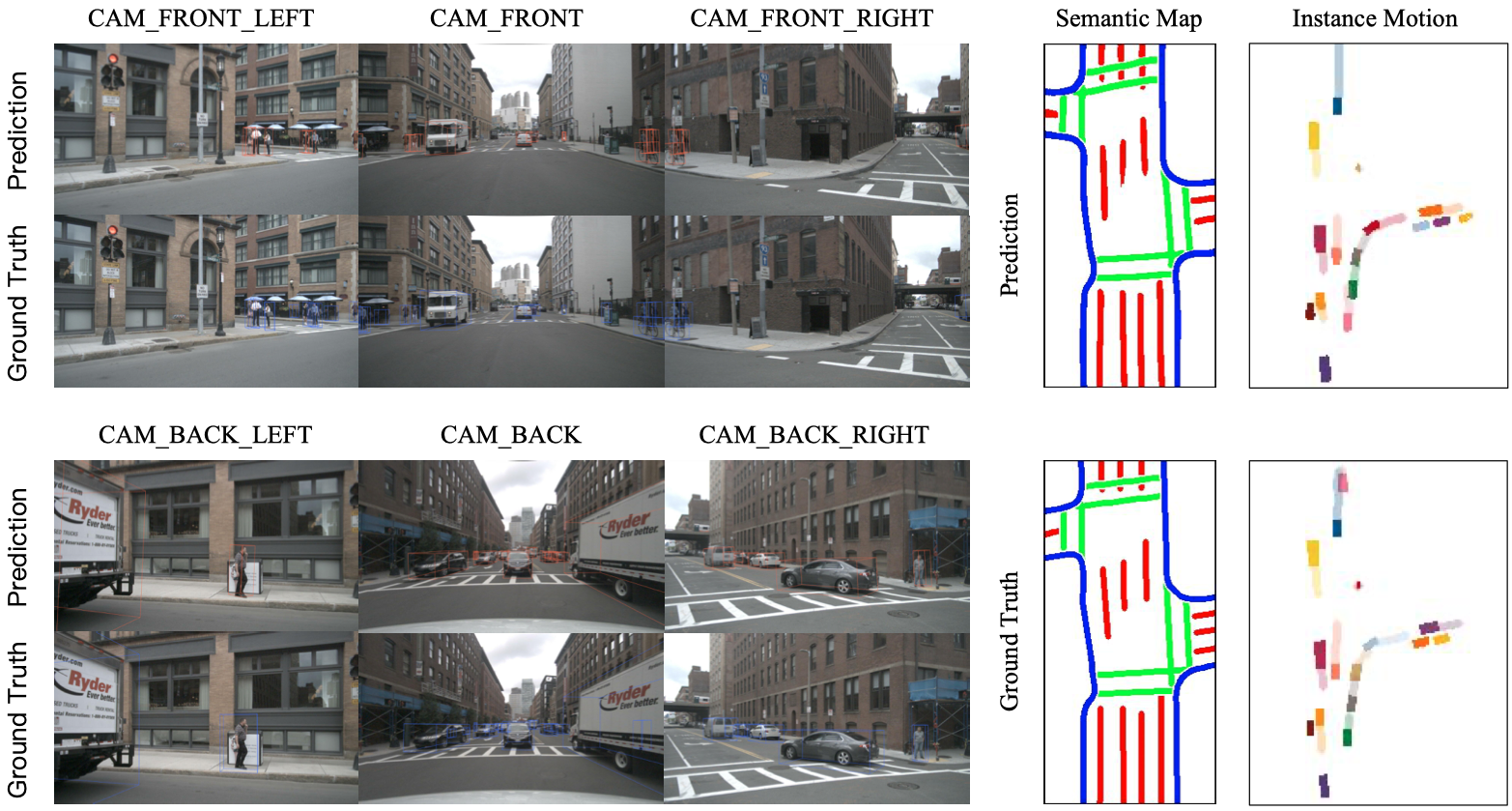

The official implementation of the paper BEVerse: Unified Perception and Prediction in Birds-Eye-View for Vision-Centric Autonomous Driving.

- 2022.07.20: We release the code and models of BEVerse.

| Method | mAP | NDS | IoU (Map) | IoU (Motion) | VPQ | Model |

|---|---|---|---|---|---|---|

| BEVerse-Tiny | 32.1 | 46.6 | 48.7 | 38.7 | 33.3 | Google Drive |

| BEVerse-Small | 35.2 | 49.5 | 51.7 | 40.9 | 36.1 | Google Drive |

Please check installation for installation and data_preparation for preparing the nuScenes dataset.

Please check getting_started for training, evaluation, and visualization of BEVerse.

This project is mainly based on the following open-sourced projects: open-mmlab, BEVDet, HDMapNet, Fiery.

If this work is helpful for your research, please consider citing the following BibTeX entry.

@article{zhang2022beverse,

title={BEVerse: Unified Perception and Prediction in Birds-Eye-View for Vision-Centric Autonomous Driving},

author={Zhang, Yunpeng and Zhu, Zheng and Zheng, Wenzhao and Huang, Junjie and Huang, Guan and Zhou, Jie and Lu, Jiwen},

journal={arXiv preprint arXiv:2205.09743},

year={2022}

}

@article{huang2021bevdet,

title={BEVDet: High-performance Multi-camera 3D Object Detection in Bird-Eye-View},

author={Huang, Junjie and Huang, Guan and Zhu, Zheng and Yun, Ye and Du, Dalong},

journal={arXiv preprint arXiv:2112.11790},

year={2021}

}