🎉 NEW: We now support YOLOv10! Train and detect with the latest YOLO version. 🎉

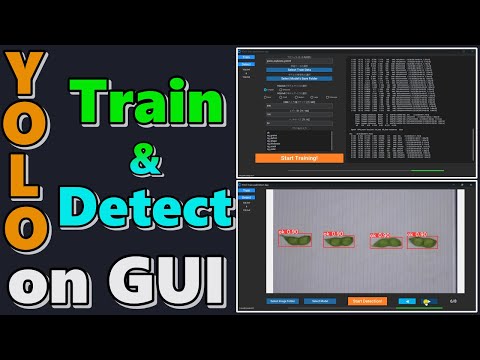

This application is a user-friendly GUI tool built with PyTorch, Ultralytics library, and CustomTkinter. It allows you to easily develop and train YOLOv8 and YOLOv9 models, and perform object detection on images, videos, and webcam feeds using the trained models. The detection results can be saved for further analysis.

↓ Please watch the instructional video (in English) uploaded on YouTube to check out the specific operation.

- Clone this repository:

git clone https://github.com/SpreadKnowledge/YOLO_train_detection_GUI.git- Navigate to the project directory:

cd your-repository- Create a virtual environment:

python -m venv venv- Activate the virtual environment:

- For Windows:

venv\Scripts\activate - For macOS and Linux:

source venv/bin/activate

- Install the required dependencies:

pip install -r requirements.txt- Clone this repository:

git clone https://github.com/SpreadKnowledge/YOLO_train_detection_GUI.git- Navigate to the project directory:

cd your-repository- Create a new Anaconda environment:

conda create --name yolo-app python=3.12- Activate the Anaconda environment:

conda activate yolo-app- Install the required dependencies:

pip install -r requirements.txtBefore training your YOLO model, you need to prepare the training data in the YOLO format. For each image, you should have a corresponding text file with the same name containing the object annotations. The text file should follow the format:

<class_id> <x_center> <y_center> <width> <height>

<class_id>: Integer representing the class ID of the object.<x_center>,<y_center>: Floating-point values representing the center coordinates of the object bounding box, normalized by the image width and height.<width>,<height>: Floating-point values representing the width and height of the object bounding box, normalized by the image width and height.

Place the image files and their corresponding annotation text files in the same directory.

To run the YOLO Train and Detect App, execute the following command:

python main.pyIn the Train tab, you can train your own YOLO model:

- Enter a project name (alphanumeric only).

- Select the directory containing your training data (images and annotation text files).

- Choose the directory where you want to save the trained model.

- Select the model size for YOLOv9 (Compact or Enhanced) or YOLOv8 (Nano, Small, Medium, Large, or ExtraLarge).

- Specify the input size for the CNN (e.g., 640).

- Set the number of epochs for training (e.g., 100).

- Enter the batch size for training (e.g., 16).

- Input the class names, one per line, in the provided text box.

- Click the "Start Training!" button to begin the training process.

Note: Make sure to provide all the required information, or the training process will not start.

In the Image/Video tab, you can perform object detection on images or videos:

- Select the folder containing the images or videos you want to process.

- Choose the trained YOLO model file (.pt) for detection.

- Click the "Start Detection!" button to initiate the detection process.

- The detection results will be displayed in the application window.

- Use the navigation buttons (◀ and ▶) to browse through the processed images or video frames.

Note: Ensure that you have selected the correct folders and model file, or the detection process will not work.

In the Camera tab, you can perform real-time object detection using a webcam:

- Select the trained YOLO model file (.pt) for detection.

- Choose the directory where you want to save the detection results.

- Enter the camera ID (e.g., 0 for the default webcam).

- Click the "START" button to begin the real-time detection.

- The live camera feed with object detection will be displayed in the application window.

- Press the "ENTER" key to capture and save the current frame and its detection results.

- Click the "STOP" button to stop the real-time detection.

Note: Make sure that you have selected the correct model file and save directory, and entered a valid camera ID, or the real-time detection will not function properly.