Experience RAG in just a few minutes with free LLM model inference on NVIDIA GPU!

Follow the 1~5 steps below, then run this Colab.

Step 1. Go to the Try NVIDIA NIM APIs page, then choose Login at the top right to log in or register (Corporate Email is recommended):

Step 2. On the home page, select one of the available models (ex. llama3-70b-instruct):

Step 3. Generate the API Key of the model and keep it; we will use this API later to perform inference through the NVIDIA Cloud-based LLM:

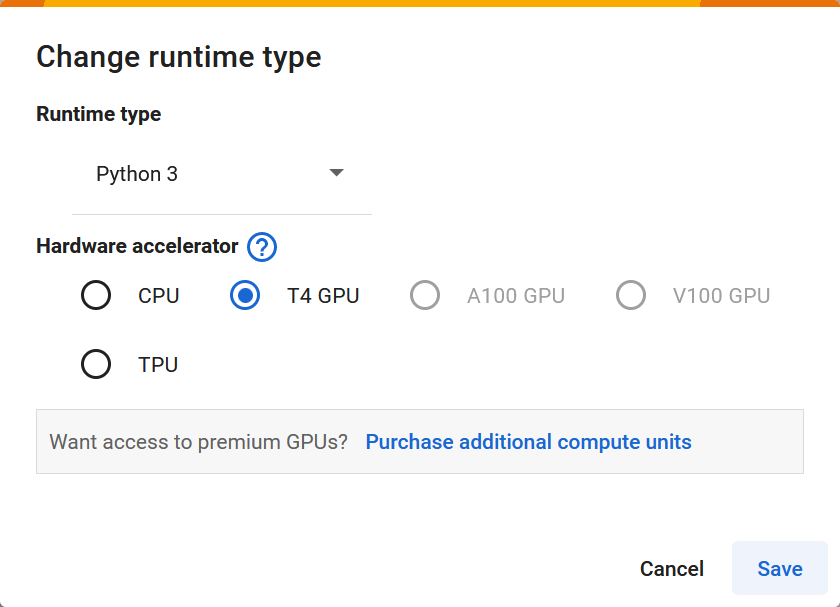

Step 4. Open colab and make sure you select Runtime > Change runtime type > T4 GPU.

Step 5. Paste your API Key into the cell at the beginning of the notebook, and then run the cell to access the Model on NGC: