This is the code for the FedPG-BR framework presented in the paper:

Flint Xiaofeng Fan, Yining Ma, Zhongxiang Dai, Wei Jing, Cheston Tan and Kian Hsiang Low. "Fault-Tolerant Federated Reinforcement Learning with Theoretical Guarantee." In 35th Conference on Neural Information Processing Systems (NeurIPS-21), Dec 6-14, 2021.

The experimental results in in the published paper were obtained using a computing server with the following configurations: Ubuntu 18.04 with a 14 cores (28 threads) Intel(R) Core(TM) i9-10940X CPU @ 3.30GHz and 64G memory. Our experiments can be easily reproduced on a moderate machine with no GPUs. The only tricky thing in setting up the experiment might be the versions of mujoco and mujoco-py. If you have difficulties in the setup, feel free to open an issue by specifying the dependencies of your environment and the command you run.

This paper provides the theoretical ground to study the sample efficiency of Federated Reinforcement Learning with respect to the number of participating agents, accounting for Byzantine agents. Specifically, we aim to answer the following questions:

- how to learn a better policy when sampling capability is restricted (e.g., limited samples)

- does the setup of federated learning guarantee the sample efficiency improvement of reinforcement learning agents?

- how does this improvement correlates with the number of participating agents?

- what happens if some agents turn into faulty agents (ie., Byzantine agents)?

- Federated version of policy gradient running SCSG (Stochastically Controlled Stochastic Gradient, see the scsg paper) optimization

- SCSG enables a refined control over the PG estimation variance

- a gradient-based probabilistic Byzantine-filter to remove or reduce the effects of Byzantine agents

- its empirical success in the RL problems relies on variance-reduced estimation of the policy gradient

- Python 3.7

- Pytorch 1.5.0

- numpy

- tensorboard

- tqdm

- sklearn

- matplotlib

- OpenAI Gym

- Box2d [for running experiments on LunarLander environment]

- mujoco150

- mujoco-py 1.50.1.68 [for running experiments on HalfCheetah environment]

mujoco and mujoco-py we used in this repo. We did not test our code on newer version of mujoco.

$ conda create -n FedPG-BR pytorch=1.5.0

$ conda activate FedPG-BR

Please then follow the instructions here to setup mujoco and install mujoco-py. Please download the legacy version of mujoco (mujoco150) as we did not test using the latest version of mujoco. Proceed once you have ensured that mujoco_py has been successfully installed.

Alternatively, you can skip the installations of mujoco and mujoco-py. Then you can still play our code with CartPole (tested), LunarLander (tested) and other similar environments (not tested).

proceed with

$ pip install -r requirements.txt

To check your installation, run

python run.py --env_name HalfCheetah-v2 --FedPG_BR --num_worker 10 --num_Byzantine 0 --log_dir ./logs_HalfCheetah --multiple_run 10 --run_name HalfCheetah_FedPGBR_W10B0

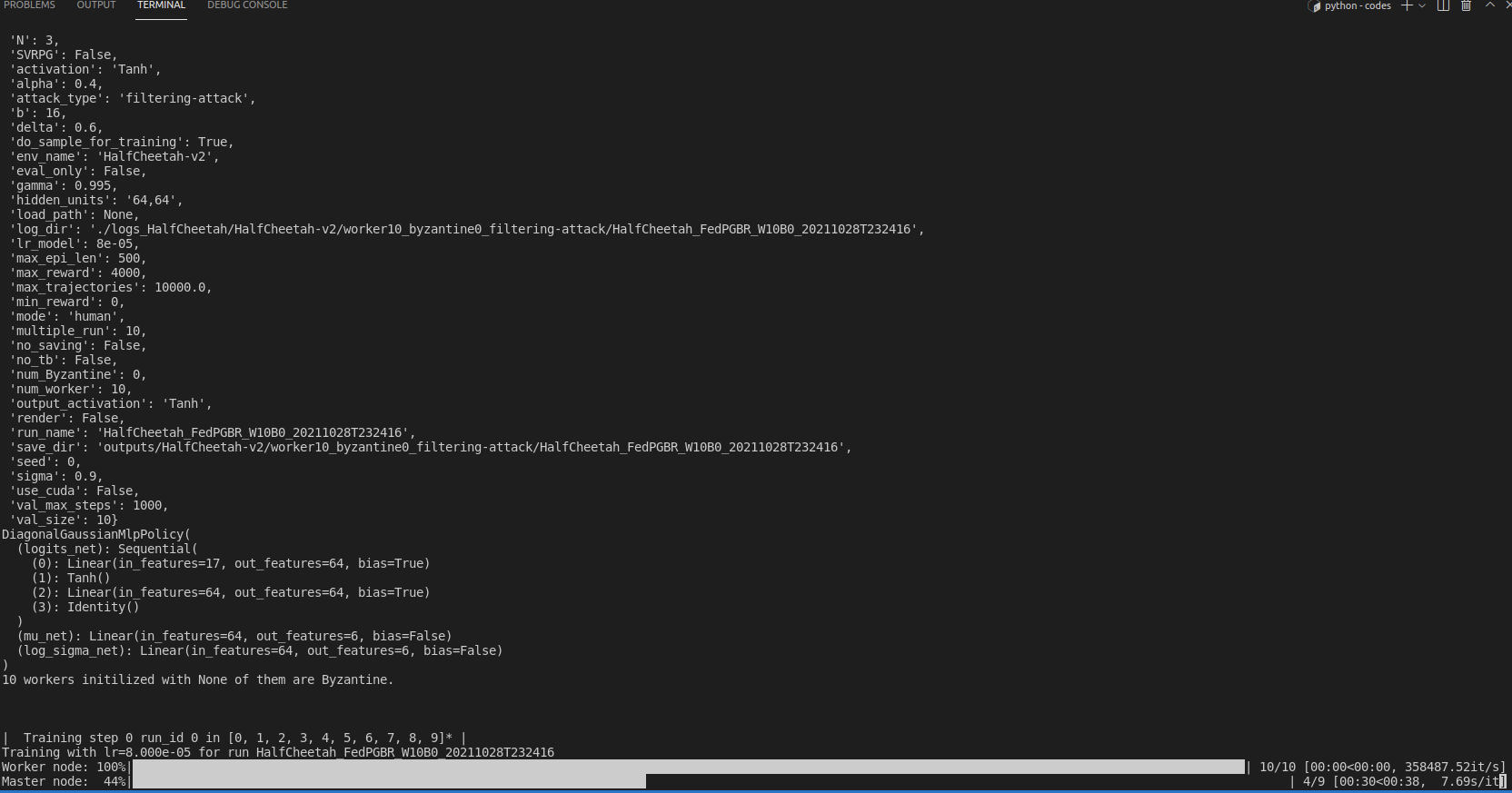

If terminal returns messages similar to those shown below, then your installation is all good.

If you do not have mujoco installed, then change the environment to CartPole can be simply done by running:

python run.py --env_name CartPole-v1 --FedPG_BR --num_worker 10 --num_Byzantine 0 --log_dir ./logs_CartPole --multiple_run 10 --run_name CartPole_FedPGBR_W10B0

To reproduce the results of FedPG-BR (K= 10) in Figure 1 of our paper for the HalfCheetah task, run the following command:

python run.py --env_name HalfCheetah-v2 --FedPG_BR --num_worker 10 --num_Byzantine 0 --log_dir ./logs_HalfCheetah --multiple_run 10 --run_name HalfCheetah_FedPGBR_W10B0

To reproduce the results of FedPG-BR (K= 10B= 3) in Figure 2 of our paper where 3 Byzantine agents are Random Noise in theHalfCheetah task environment, run the following command:

python run.py --env_name CartPole-v1 --FedPG_BR --num_worker 10 --num_Byzantine 3 --attack_type random-noise --log_dir ./logs_Cartpole --multiple_run 10 --run_name Cartpole_FedPGBR_W10B3

Replace --FedPG_BR with --SVRPG for the results of SVRPG in the same experiment.

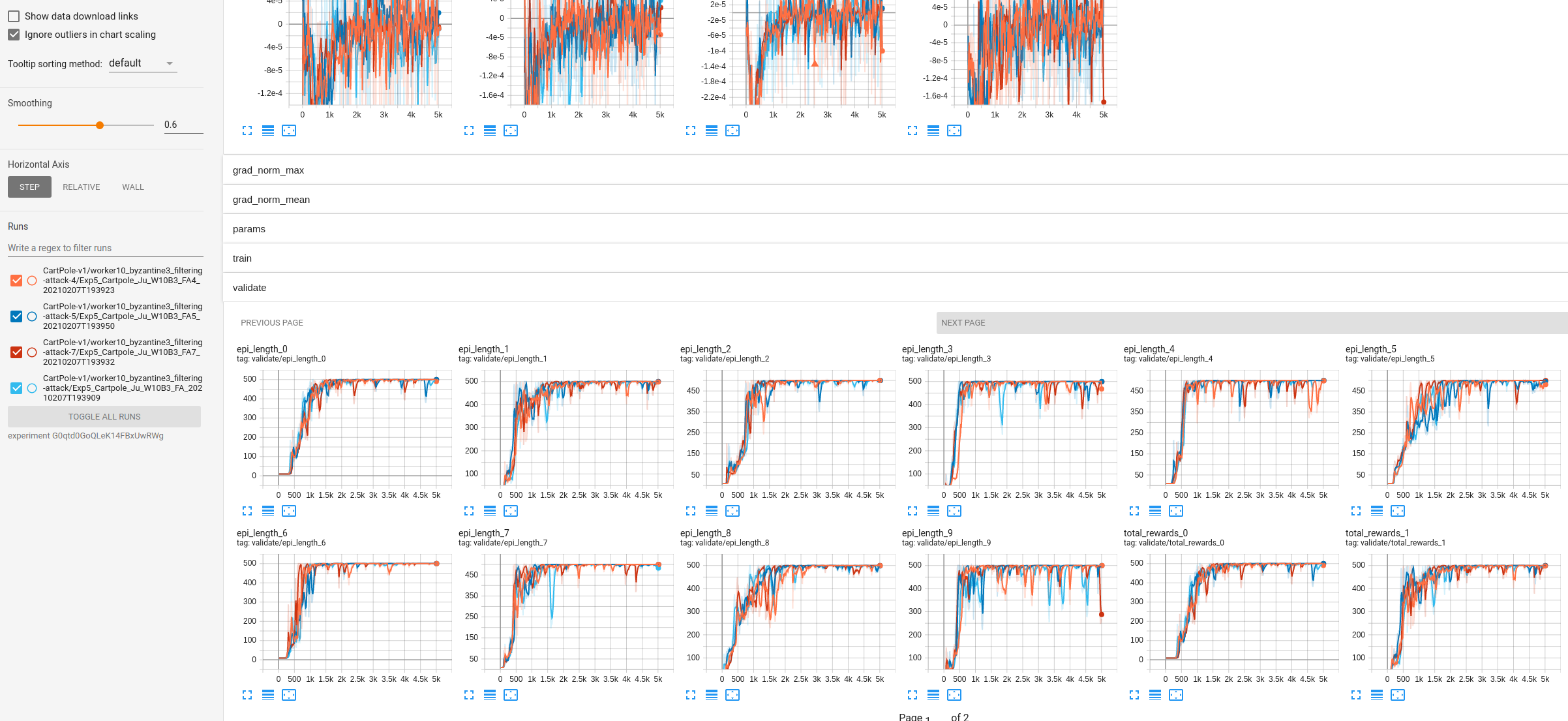

All results including all statistics will be logged into the directory passed into --log_dir, which can be visualized in tensorboard by running tensorboard respectively, e.g.,

tensorboard --logdir logs_Cartpole/ --host localhost --port 8008

You will be able to visualize different stats of each run there, e.g.,

To visualize the behavior of the learnt policy, run the experiment in evaluation mode with rendering option on. For example:

$ python run.py --env_name CartPole-v1 --FedPG_BR --eval_only --render --load_path PATH_TO_THE_SAVED_POLICY_MODEL

See the video file ./FedPG-BR_demo_edited.mp4

This research/project is supported by ASTAR under its RIE$2020$ Advanced Manufacturing and Engineering (AME) Industry Alignment Fund – Pre Positioning (IAF-PP) (Award A$19$E$4$a$0101$) and its ASTAR Computing and Information Science Scholarship (ACIS) awarded to Flint Xiaofeng Fan. Wei Jing is supported by Alibaba Innovative Research (AIR) Program.

@inproceedings{

fan2021faulttolerant,

title={Fault-Tolerant Federated Reinforcement Learning with Theoretical Guarantee},

author={Flint Xiaofeng Fan and Yining Ma and Zhongxiang Dai and Wei Jing and Cheston Tan and Bryan Kian Hsiang Low},

booktitle={Advances in Neural Information Processing Systems},

editor={A. Beygelzimer and Y. Dauphin and P. Liang and J. Wortman Vaughan},

year={2021},

url={https://openreview.net/forum?id=ospGnpuf6L}

}