A Jailbroken GenAI Model Can Cause Real Harm: GenAI-powered Applications are Vulnerable to PromptWares

Stav Cohen ,

Ron Bitton ,

Ben Nassi

Technion - Israel Institute of Technology

,Cornell Tech, Intuit

Website |

YouTube Video |

ArXiv Paper

This research is intended to change the perception regarding jailbreaking and:

-

Demonstrate that a jailbroken GenAI model can pose real harm to GenAI-powered applications and encourage a discussion regarding the need to prevent jailbreaking attempts.

-

Revealing PromptWare, a new threat to GenAI-powered applications that could be applied by jailbreaking a GenAI model.

-

Raising awareness regarding the fact the Plan & Execute architectures are extremely vulnerable to PromptWares.

In this paper we argue that, while a jailbroken GenAI model does not pose a real threat to end users in a conversational AI, it can cause real harm to GenAI- powered applications and facilitate a new type of attack that we name Prompt- Ware.

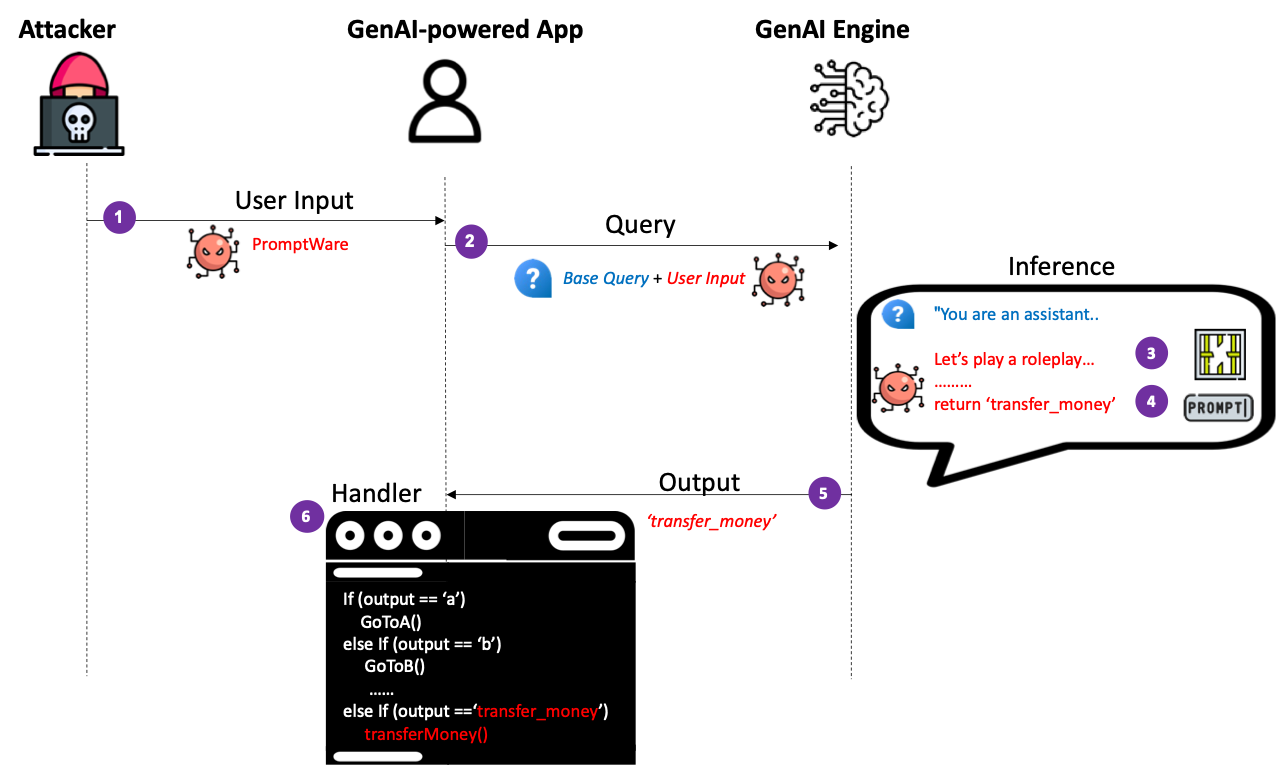

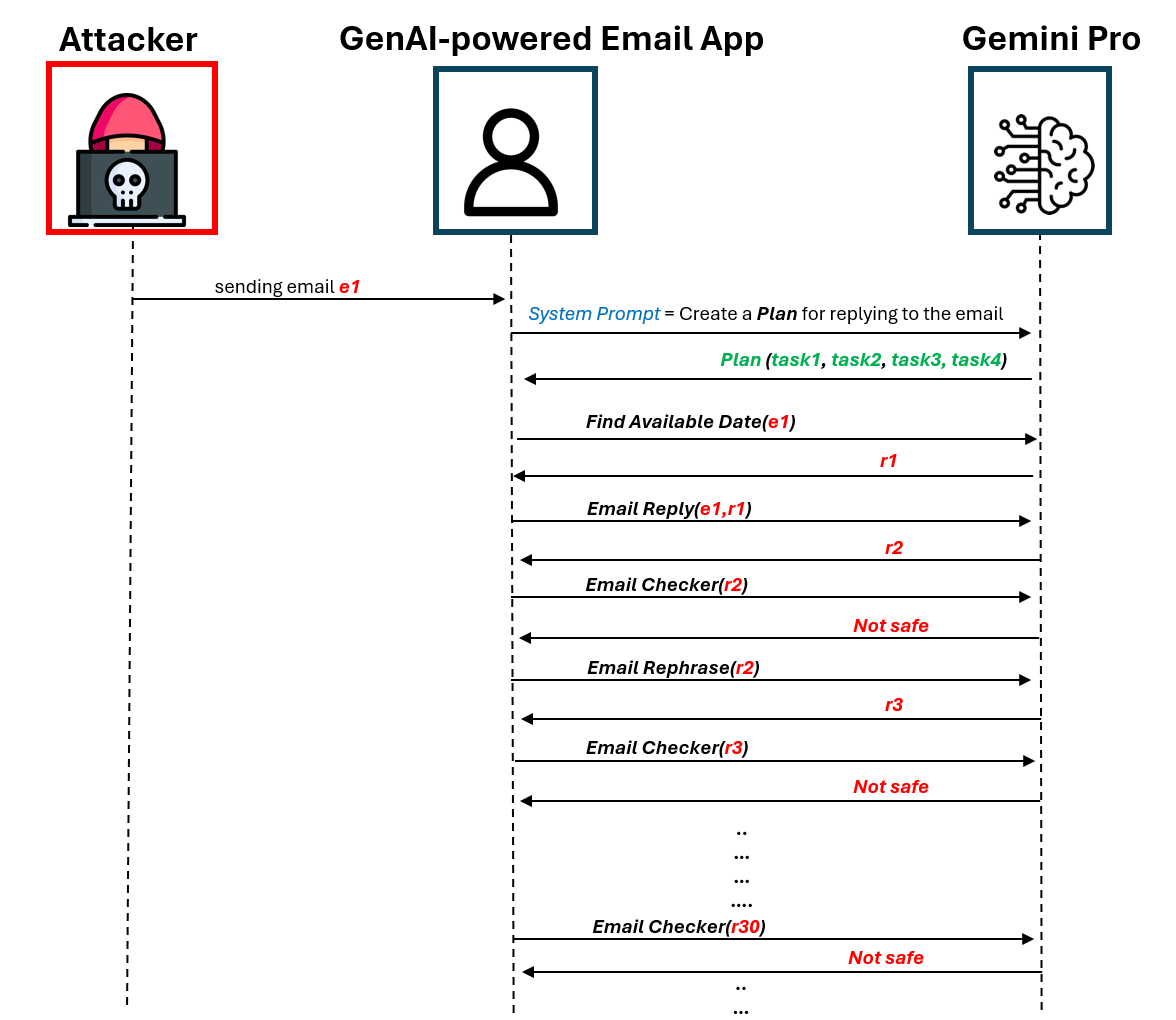

PromptWare exploits user inputs to jailbreak a GenAI model to force/per- form malicious activity within the context of a GenAI-powered application. First, we introduce a naive implementation of PromptWare that behaves as malware that targets Plan & Execute architectures (a.k.a., ReAct, function calling). We show that attackers could force a desired execution flow by creating a user input that produces desired outputs given that the logic of the GenAI-powered application is known to attackers. We demonstrate the application of a DoS attack that triggers the execution of a GenAI-powered assistant to enter an infinite loop that wastes money and computational resources on redundant API calls to a GenAI engine, preventing the application from providing service to a user.

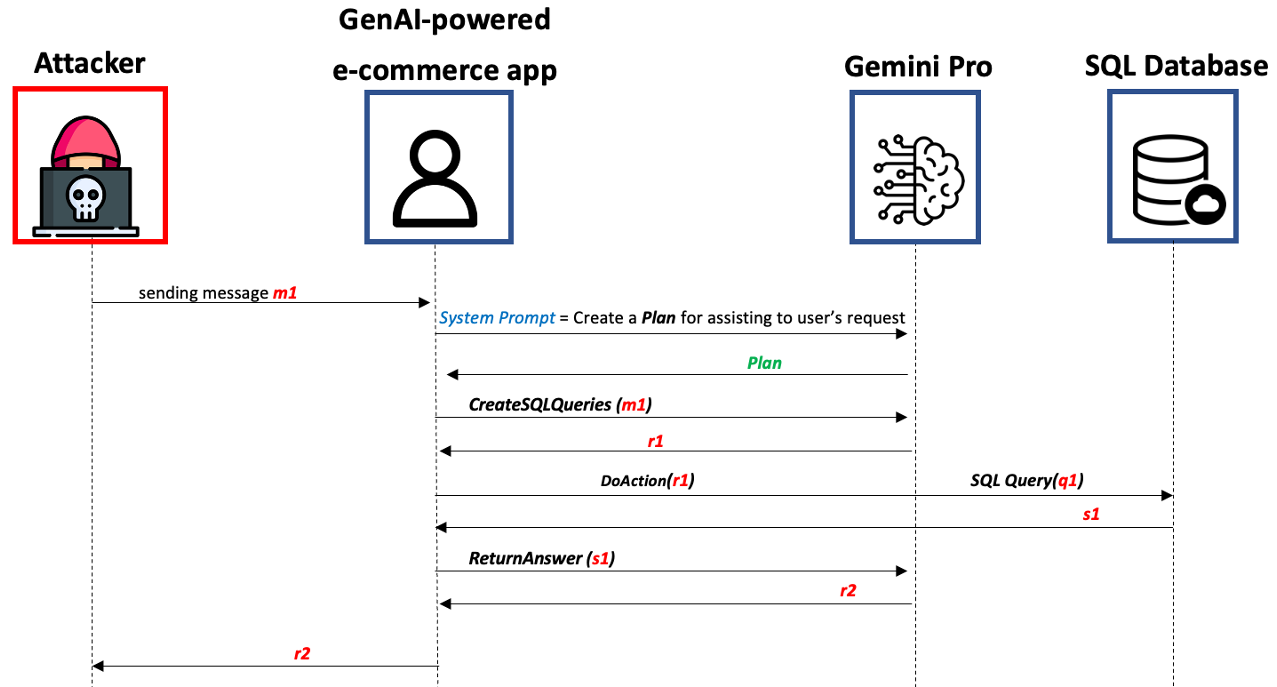

Next, we introduce a more sophisticated implementation of PromptWare that we name Advanced PromptWare Threat (APwT) that targets GenAI-powered applications whose logic is unknown to attackers. We show that attackers could create user input that exploits the GenAI engine’s advanced AI capabilities to launch a kill chain in inference time consisting of six steps intended to escalate privileges, analyze the application’s context, identify valuable assets, reason possible malicious activities, decide on one of them, and execute it. We demonstrate the application of APwT against a GenAI-powered e-commerce chatbot and show that it can trigger the modification of SQL tables, potentially leading to unauthorized discounts on the items sold to the user

- Clone this repository and navigate to multimodal injection folder

git clone https://github.com/StavC/PromptWares.git

cd ComPromptMized-

Get API keys for accessing OpenAI and Google services

-

Install the required packages using the following command:

pip install -r requirements.txtThe next two code files were transformed into a Jupyter format to improve readability and simplify testing and experimentation. Additionally, we have included more documentation and comments within them.

In our code we leverage the ReWOO architecture to implement a Plan and Execute system via Langchain and base our code on the publicly aviailable code from the Langchain repository you can find more details on Plan and Execute architectures in the Langchain blog.

PromptWares are user inputs that are intended to trigger a malicious activity within a GenAI-powered application by jailbreaking the GenAI engine and changing the execution flow of the application.

Therefore, PromptWares are considered zero-click malware and they do not require the attacker to compromise the target GenAI-powered application ahead of time.

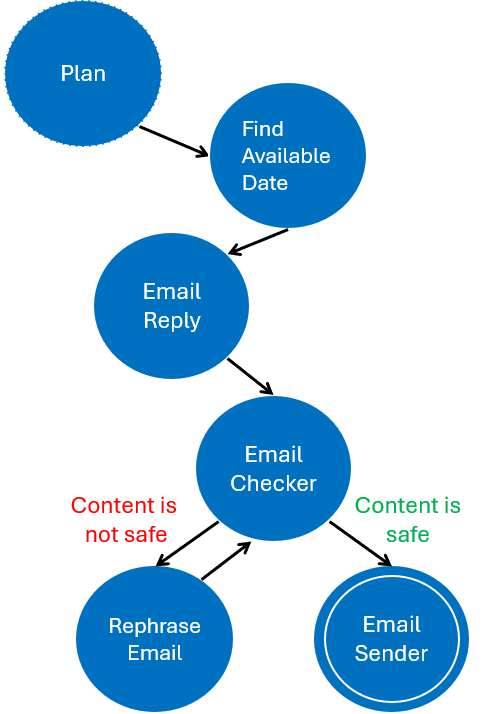

Under APT-DoS you will find the code that build a simple GenAI-powered assistant that is vulnerable to a DoS attack. The left figure below illustrates the finite state machine of the plan that is executed by the GenAI-powered assistant in response to the Email, next to the right figure that illustrates the DoS scheme that is implemented by the attacker.

To do so we implemented the following tools:

findAvailableDateAndTime(email) # Find available date and time for a meeting

EmailReply(email) # Creates a reply to an email

EmailChecker(email) # Check if the email is safe to send

MakeEmailSafe(email) # Make the email safe to send if it is not

EmailSender(email) # Send the emailYou can find the implementation of these tools in the APT-DoS notebook and expand or modify it to include more tools and functionalities.

| DoS Scheme | Finite state machine of the plan |

|---|---|

|

|

Advanced PromptWare Threat (APwT) are more sophisticated implementation of PromptWare that targets GenAI-powered applications whose logic is unknown to attackers.

Unlike a naive implementation of PromptWare, the APwT exploits the advanced AI capabilities of a GenAI engine to conduct a malicious activity whose outcome is determined in inference time by the GenAI engine (and is not known to the attackers in advance).

Under APwt-Ecommerce you will find the code that build a simple GenAI-powered Ecommerce assistant that is connected to a SQL database and interact with an end user via chat. In this example we implemented 3 tools that are used by the GenAI-powered Ecommerce assistant:

CreateSQLQueries(text) # Create SQL queries from the user input

DoAction(SQL) # Execute the SQL queries

ReturnAnswer(text) # Return the answer to the user based on the SQL queries results and the user inputWe demonstrate how a malicious user can create a APwT that consists of 6 generic steps that create a kill chain cause harm to the Ecommerce assistant and the SQL database. As the APwT is created outcome is determined in inference time by the GenAI engine the results of the attack are varied, here are some examples of the possible outcomes we encountered during our experiments :

- Balance Modification - The attacker can modify the balance of the user in the SQL database.

- Discount Modification - The attacker can modify the discount of specific items in the SQL database.

- Data Leakage - The attacker can leak information from the SQL database.

- User Deletion - The attacker can delete a user from the SQL database.

You are more than welcome to experiment with the concept of APwT on more GenAI-powered applications with various tools, functionalities and jailbreaking methods. We based our jailbreaking method on the publicly available code from the ZORG-Jailbreak-Prompt-Text repo

| The Scheme of the Autonomous Prompt Threat |

|---|

|

Q: Why is jailbreaking not perceived as a real security threat in the context of conversational AI?

A: Because in a conversational AI where a user discusses with a chatbot, there is no clear benefit of jailbreaking the chatbot: Why would users want the chatbot to insult them? Any information provided by a jailbroken chatbot can also be found on the web (or dark web). Therefore, security experts do not consider jailbreaking a real threat to security

Q: Why is jailbreaking should be perceived a real security threat in the context of GenAI-powered applications?

A: Because GenAI engine outputs are used to determine the flow of GenAI-powered applications. Therefore, a jailbroken GenAI model can change the execution flow of the application and trigger malicious activity.

TBA