Minimal Deep Learning library with limited feature set, assembled as a final project for the Artificial Intelligence course I've taken in my third year @FMI.

Took inspiration from the book neuralnetworksanddeeplearning, a blog series on

neural networks, and my own experience with how pytorch NNs are implemented.

I called it "Fake" as a joke, knowing it can't be taken seriously when compared

with libraries used as "industry standards" (like pytorch - which I'm going to

reference here).

- Linear layer

- activation functions

- Sigmoid

- ReLU (at least the math says so)

- LeakyReLU

- Tanh

- Loss functions

- MSE

- optimizer

- SGD

- saving / loading models

- MNIST dataloader

- cross entropy loss & softmax (I'm not really sure the math correct)

- dropout layer

- convolution layers

- pooling layers

- batch normalization layers

- Adam optimizer

- standardized dataloader (though it most likely works on that precise kaggle csv format)

- preprocessing wrappers

- multithreading

- compatibility layer for loading external models

It would be an understatement to say that I underestimated the amount of work needed to, not only write, but also understand what I'm writing. In, the end I stuck with what I managed to understand and pushed to deliver a complete package that can be used for a proper demo.

- Understanding backpropagation.

- Getting back propagation to work. There were a lot of issues with the matrix multiplications ⊹ not aligning properly.

- Figuring out I'm getting bad results, due to not following standard practices (normalizing input data, normalizing initial weights and biases)

- Small issues, which are hard to debug due to the complex nature of such a system

- ReLU doesn't seem to perform too well (I hoped it would 💔)

Comparing with similar implementations in pytorch I noticed minimal computational overhead and negligible performance differences.

For a model with:

- layers:

- (784 x 100) @ Sigmoid

- (100 x 10)

- MSE loss

- 50 epochs training

- SGD optimizer with

0.1learning rate

| The Fake One | The real deal |

|---|---|

| Time: 6m40s | Time: 5m41s |

| Acc: 93.63% | Acc: 97.36% |

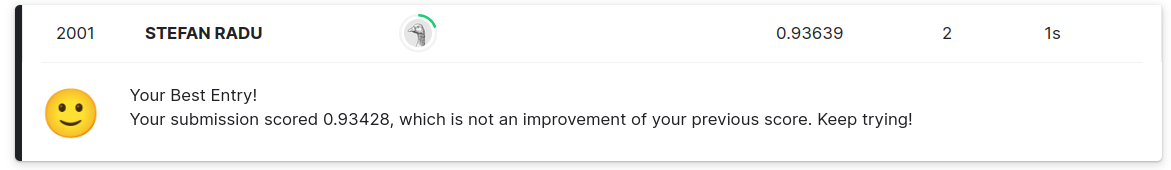

- With a kaggle submission for this model I landed on the exact position of my birth year (which is totally intended).

From my understanding a similar network using the ReLU activation should perform better, yet in my case it performed really poorly and caused me all sorts of issues (overflows, nan, etcetera) ⚙️

| The Fake One | The real deal |

|---|---|

| Time: 29s | Time: 20s |

| Acc: 84.21% | Acc: 96.59% |

I ran the following in order to assess how much time it would take for similar networks to achieve similar performance. The results speak for themselves.

We can observe a minimal computational overhead and a negligible performance difference between my Fake ML Library and pytorch.

For a model with:

- layers:

- (784 x 100) @ Tanh

- (100 x 100) @ Tanh

- (100 x 100) @ Tanh

- (100 x 10) @ Tanh

- SGD optimizer

| The Fake One | The real deal |

|---|---|

| MSE loss | Cross Entropy Loss |

| 0.001 learning rate | 0.1 learning rate |

| Time: 9m40s | Time: 30s |

| Acc: 94.07% | Acc: 95.21% |

| 50 epochs | 5 epochs |

Epoch 055 -> loss: 0.1524; acc: 0.9371 | val_loss: 0.1528; val_acc: 0.9407 | elasped time: 9m40s

vs

Epoch [5/5], Step [921/938], Loss: 0.8425 | Accuracy on the 10000 test images: 95.21 %

ashwins blog

covnetjs

3b1b nn playlist

nnadl

understandin back propagation

cross entropy & softmax

pytorch code for comparison

There might have been other resources I've missed. 🥲

Although the performance of the ReLu activation function in my tests was as bad as it gets, the real Relu compensated for it and helped me push through with this project.

thanks Relu. i am forever grateful