An example of how to train a control policy using Ray RLlib and EnergyPlus Python API.

Requires Python 3.8+, EnergyPlus 9.3+

Look for a pre-built docker image in packages and follow instructions to pull it.

Alternatively, build the docker image in docker/ folder:

docker build -t rllib-energyplus .Run the container

docker run --rm --name rllib-energyplus -it rllib-energyplusInside the container, run the experiment

cd /root/rllib-energyplus

python3 rllibenergyplus/run.py --idf model.idf --epw LUX_LU_Luxembourg.AP.065900_TMYx.2004-2018.epw --framework torchEdit requirements.txt and add the deep learning framework of your choice (TensorFlow or PyTorch)

python3 -m venv env

source env/bin/activate

pip install -r requirements.txtAdd EnergyPlus folder to PYTHONPATH environment variable:

export PYTHONPATH="/usr/local/EnergyPlus-23-1-0/:$PYTHONPATH"Make sure you can import EnergyPlus API by printing its version number

$ python3 -c 'from pyenergyplus.api import EnergyPlusAPI; print(EnergyPlusAPI.api_version())'

0.2python3 rllibenergyplus/run.py \

--idf /path/to/model.idf \

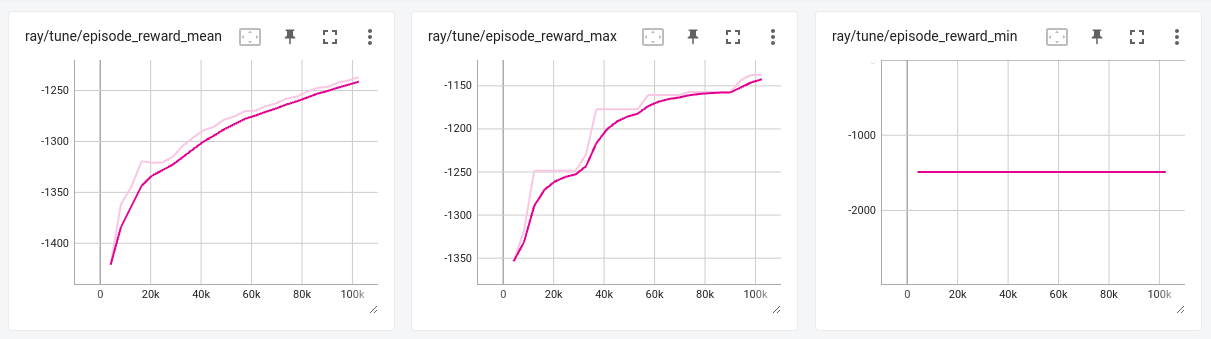

--epw /path/to/LUX_LU_Luxembourg.AP.065900_TMYx.2004-2018.epwExample of episode reward stats obtained training with PPO, 1e5 timesteps, 2 workers, with default parameters + LSTM, short E+ run period (2 first weeks of January). Experiment took ~20min.

Tensorboard is installed with requirements.

To track an experiment running in a docker container, the container must be started with --network host parameter.

Start tensorboard with:

tensorboard --logdir ~/ray_results --bind_all