This repository is the implementation of contextual topic identification model. The model is based on LDA probabilistic topic assignment and pre-trained sentence embeddings from BERT/RoBERTa. The analysis is conducted on the dataset of game reviews on the steam platform.

Product reviews are important as influencing people's choices especially for online shopping. We usually have dreadfully huge numbers of reviews for whatever products. However, many platforms have barely a satisfying categorization system for the reviews when it comes to what the reviewers are really talking about. Steam, for example, has a very carefully designed system where people can do a lot of things, but still there is no such access to categorizing the reviews by their semantic meanings.

Therefore, we provide a topic identification procedure thats combines both bag-of-words and contextual information to explore potential semantically meaningful categories out of the oceans of steam reviews.

Clone the repo

git clone https://github.com/Stveshawn/contextual_topic_identification.git

cd contextual_topic_identification

and make sure you have dataset in the data folder (you can specify the path in the bash script later).

To run the model and get trained model objects and visualization

run the bash script on your terminal

sudo bash docker_build_run.sh

The results will be saved in the docs folder with corresponding model id (Method_Year_Month_Day_Hour_Minute_Second).

Four parameters can be specified in the bash script

samp_size: number of reviews used in the modelmethod={"TFIDF", "LDA", "BERT", "LDA_BERT"}: method for the topic modelntopic: number of topicsfpath=/contextual_topic_identification/data/steam_reviews.csv: file path to the csv data

To run a test case on the sampled test data, do

sudo bash test.sh

The dataset (Steam review dataset) is published on Kaggle covering ~480K reviews for 46 best selling video games on steam.

To successfully run the model, you should have this dataset downloaded (kaggle authentication required) and placed in the data folder (or specify your own file path in the bash script).

To identify the potential topics of the target documents, traditional approaches are

-

Latent Dirichlet Allocation

-

Embedding + Clustering

Although LDA generally works well for topic modeling tasks, it fails with short documents, in which there isn’t much text to model and documents that don’t coherently discuss topics. Using only bag-of-words information also make it quite limited.

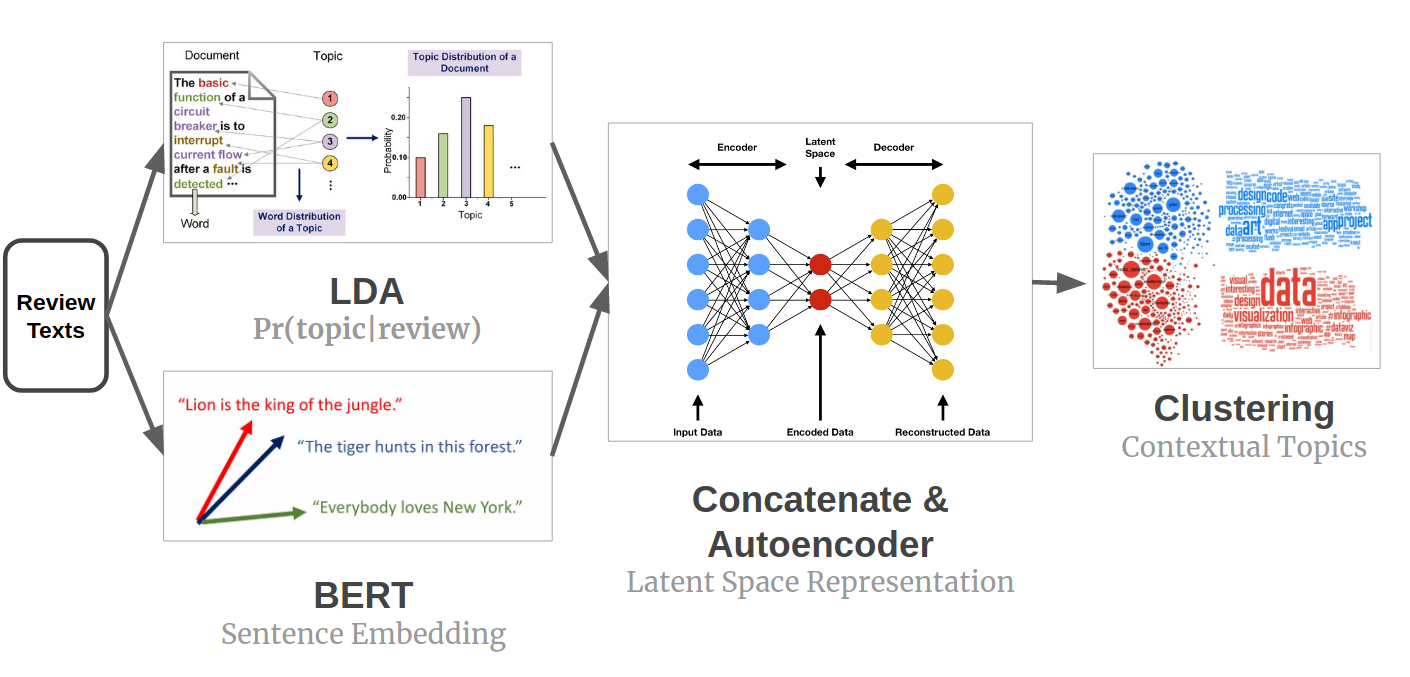

The contextual topic identification model leverages both bag-of-words and contextual information by including both the LDA topic probabilities and the sentence embeddings. The model is as follows

where we

- take the information from LDA probabilistic topic assignment (

v_1) and sentence embeddings (v_2) - concatenate

\lambda * v_1andv_2to getv_{full} - learn the latent space representation

v_{latent}of by autoencoder - implement clustering on the latent space representations.

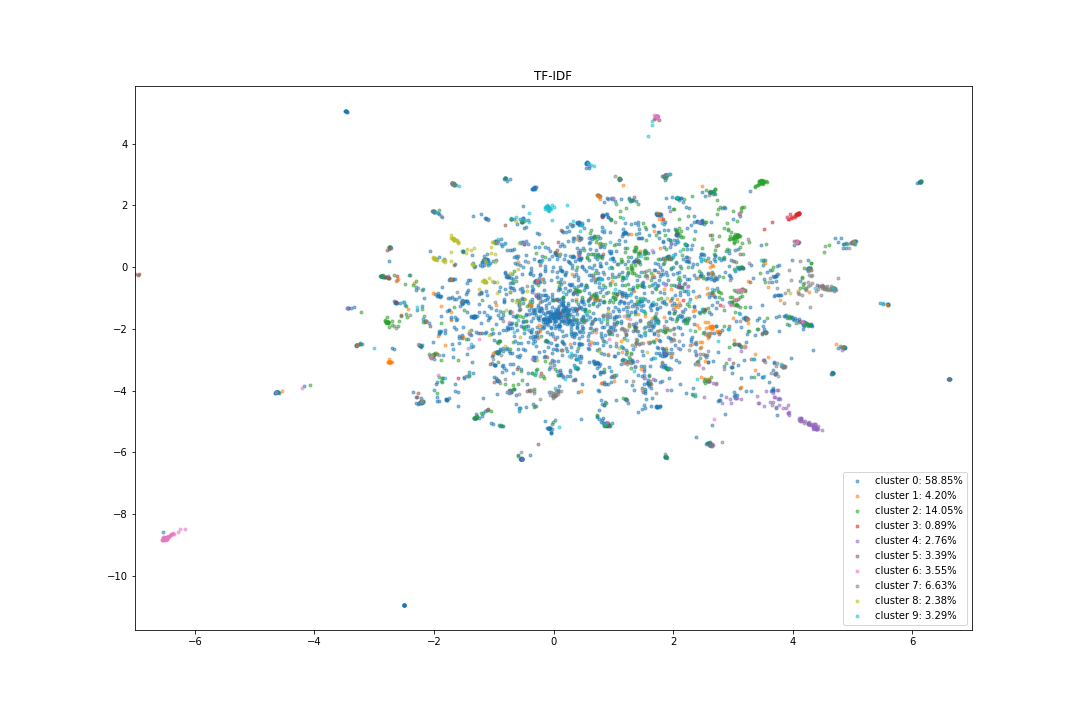

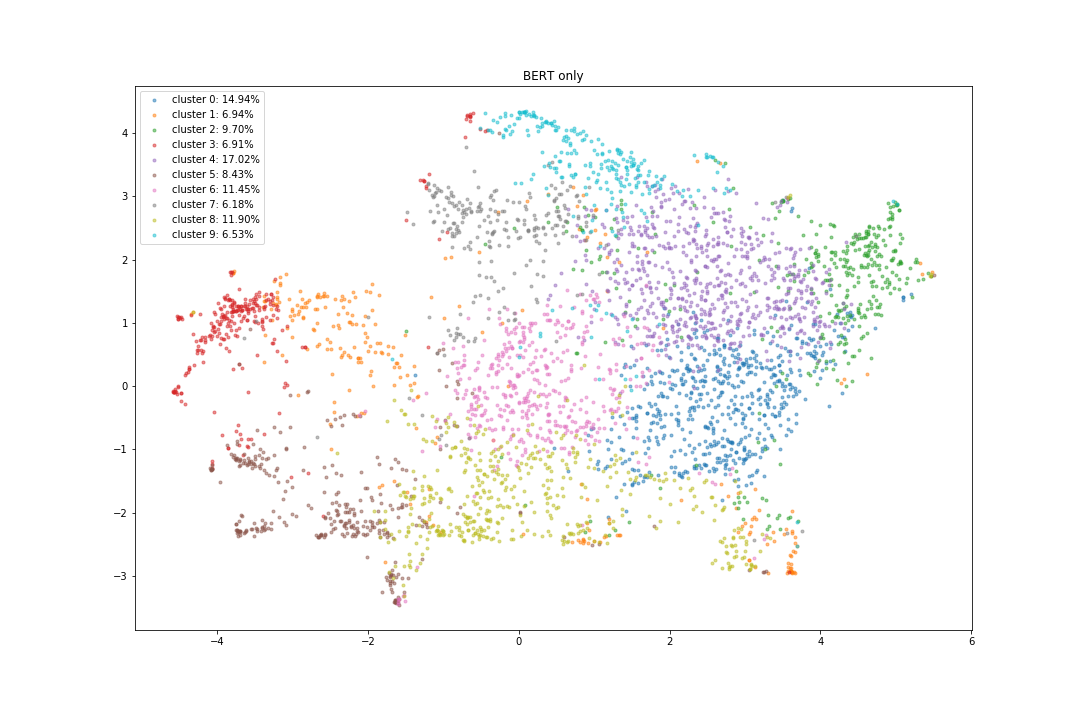

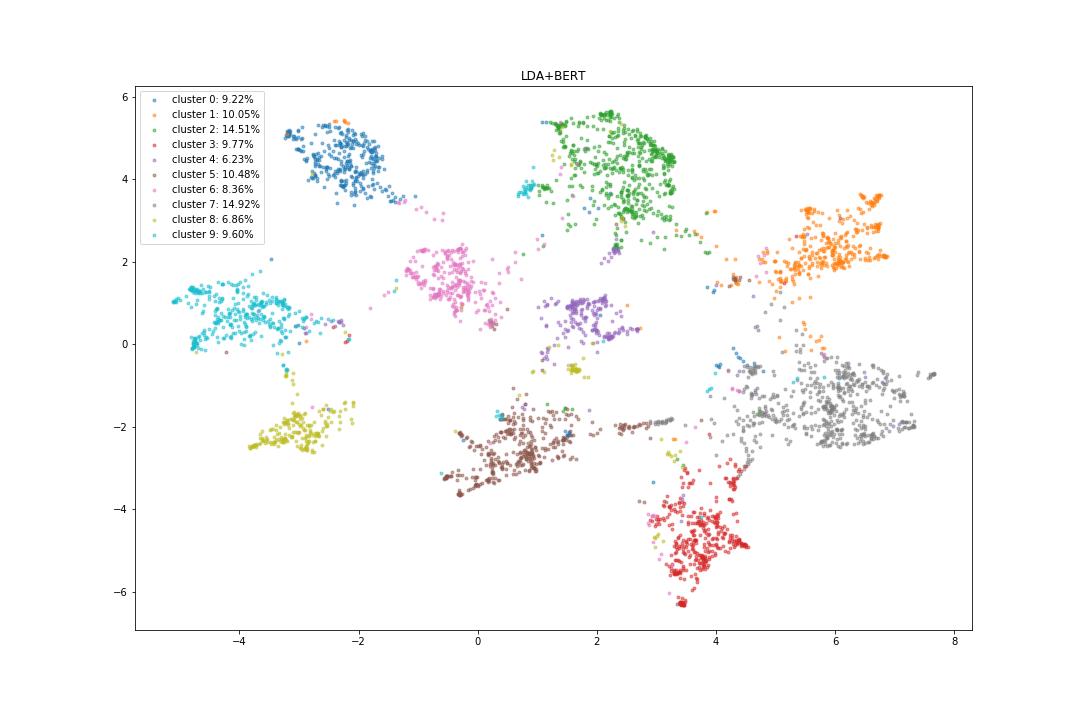

Visualizations (2D UMAP) of clustering results with different vectorization methods with n_topic=10

| TF-IDF | BERT | LDA_BERT |

|---|---|---|

|

|

|

Evaluation of different topic identification models with n_topic=10

| Metric\Method | TF-IDF + Clustering | LDA | BERT + Clustering | LDA_BERT + Clustering |

|---|---|---|---|---|

| C_Umass | -2.161 | -5.233 | -4.368 | -3.394 |

| CV | 0.538 | 0.482 | 0.547 | 0.551 |

| Silhouette score | 0.025 | / | 0.063 | 0.234 |

Sentence Transformers: Sentence Embeddings using BERT / RoBERTa / DistilBERT / ALBERT / XLNet with PyTorch

SymSpell: 1 million times faster through Symmetric Delete spelling correction algorithm

Gensim: Topic Modelling in Python