PyTorch implementation of paper "MVHuman: Tailoring 2D Diffusion with Multi-view Sampling For Realistic 3D Human Generation", arXiv 2024.

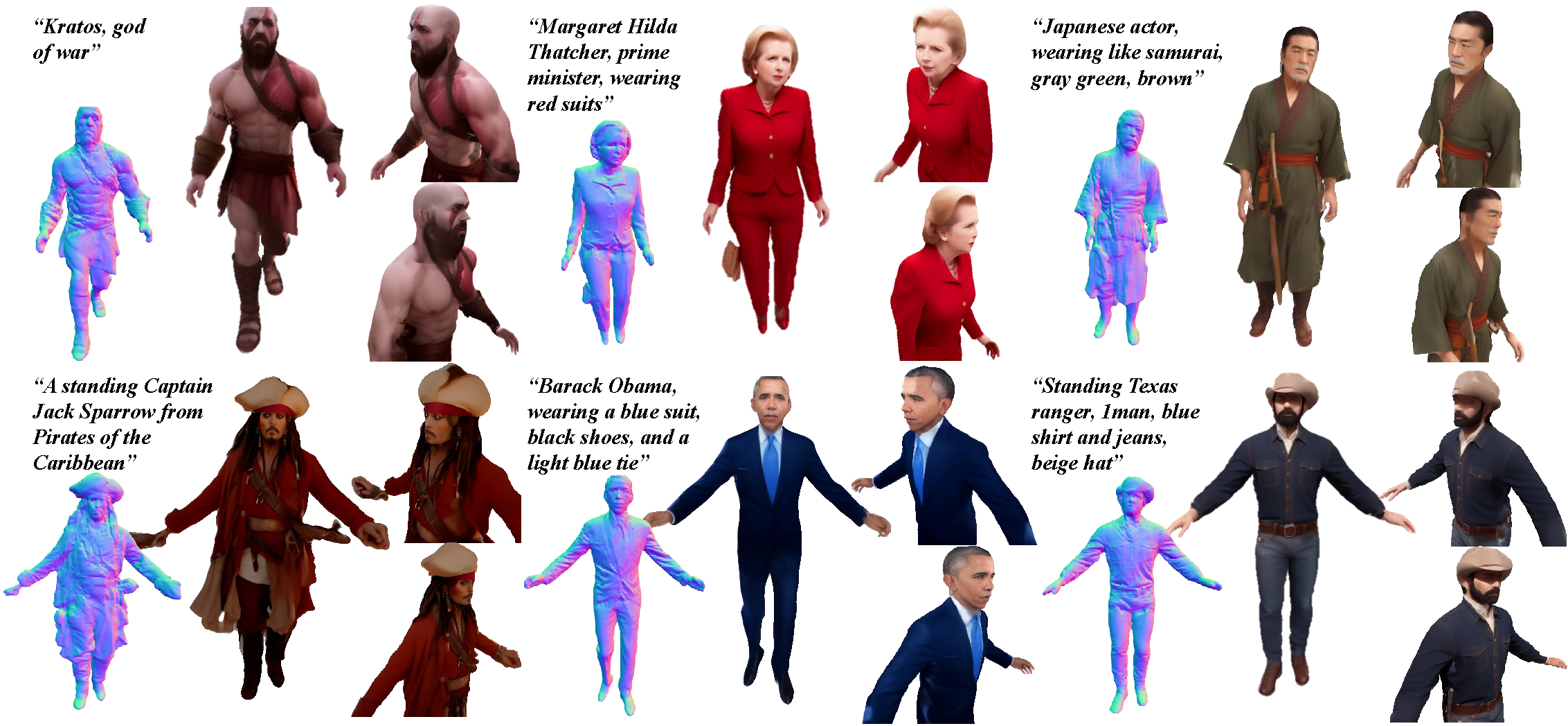

MVHuman: Tailoring 2D Diffusion with Multi-view Sampling For Realistic 3D Human Generation

Suyi Jiang, Haimin Luo, Haoran Jiang, Ziyu Wang, Jingyi Yu, Lan Xu

git clone git@github.com:SuezJiang/MVHuman.git

cd MVHuman

conda create -n mvhuman python==3.8.16

conda activate mvhuman

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidia

pip install -r requirements.txtTested on Ubuntu with RTX 3090.

There are two example data in data, data/4_views is a simplified scene of 4 fullbody views, data/16_views is a complete scene of 8 fullbody and 8 upperbody views.

You can paste your Hugging Face token in TOKEN.

You can set the diffuser cache directory in config/config.py (change the cache_dir).

For the 16_views case,

$ python pipeline_multiview.py config=./config/deg_45_16view.yamlFor the 4_views case,

$ python pipeline_multiview.py config=./config/deg_90s_4view.yamlResults will be written to {case_dir}/results.

We follow similar data structure with nerfstudio.

@article{jiang2024mvhuman,

title={MVHuman: Tailoring 2D Diffusion with Multi-view Sampling For Realistic 3D Human Generation},

author={Suyi, Jiang and Haimin, Luo and Haoran, Jiang and Ziyu, Wang and Jingyi, Yu and Lan, Xu},

journal={arXiv preprint},

year={2024}

}