ETDS: Equivalent Transformation and Dual Stream Network Construction for Mobile Image Super-Resolution (CVPR 2023)

By Jiahao Chao, Zhou Zhou, Hongfan Gao, Jiali Gong, Zhengfeng Yang*, Zhenbing Zeng, Lydia Dehbi (*Corresponding author).

This is the official Pytorch implementation of Equivalent Transformation and Dual Stream Network Construction for Mobile Image Super-Resolution (paper/ supp).

The previous version of ETDS, PureConvSR, won the third place in the Mobile AI & AIM: Real-Time Image Super-Resolution Challenge (website / report).

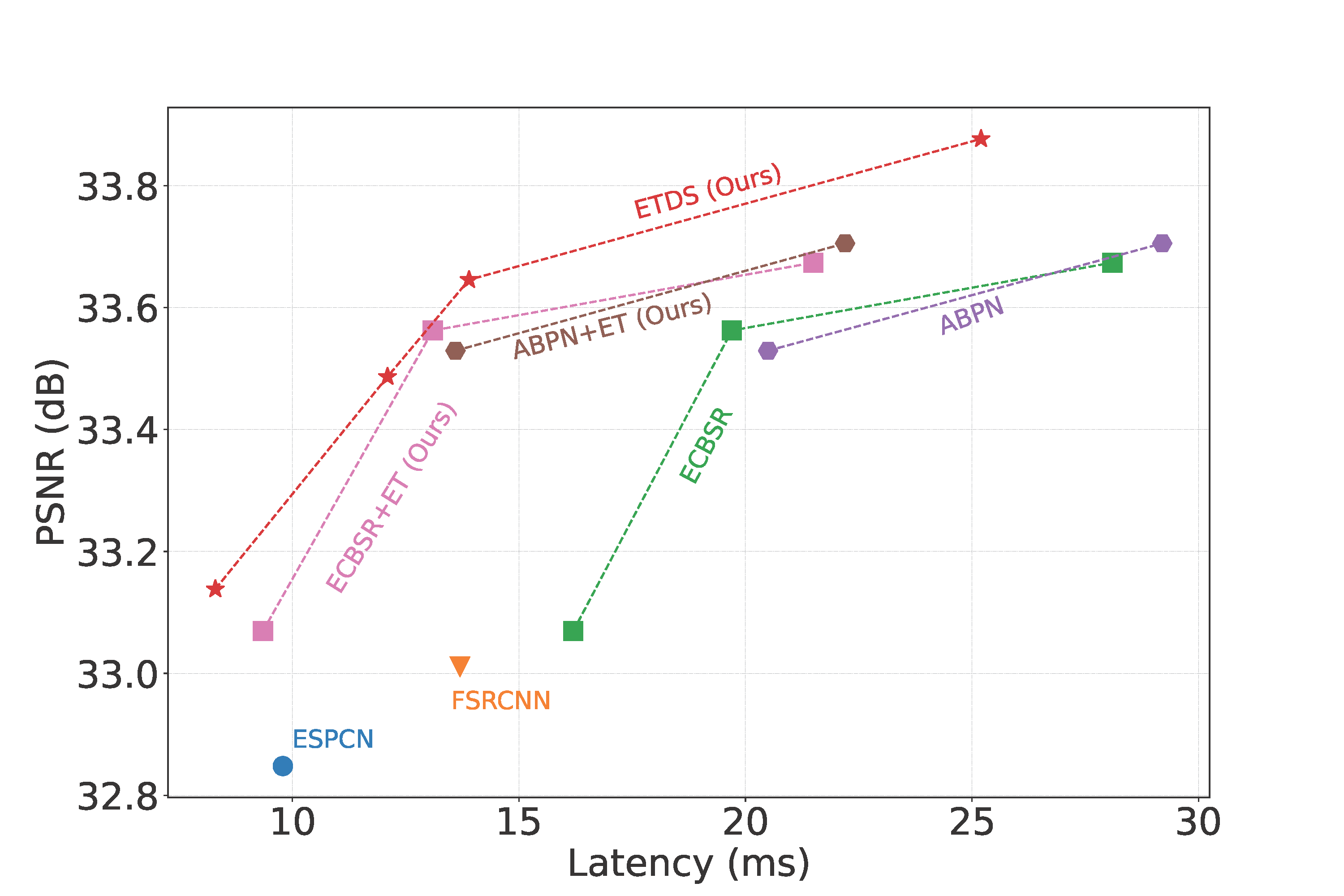

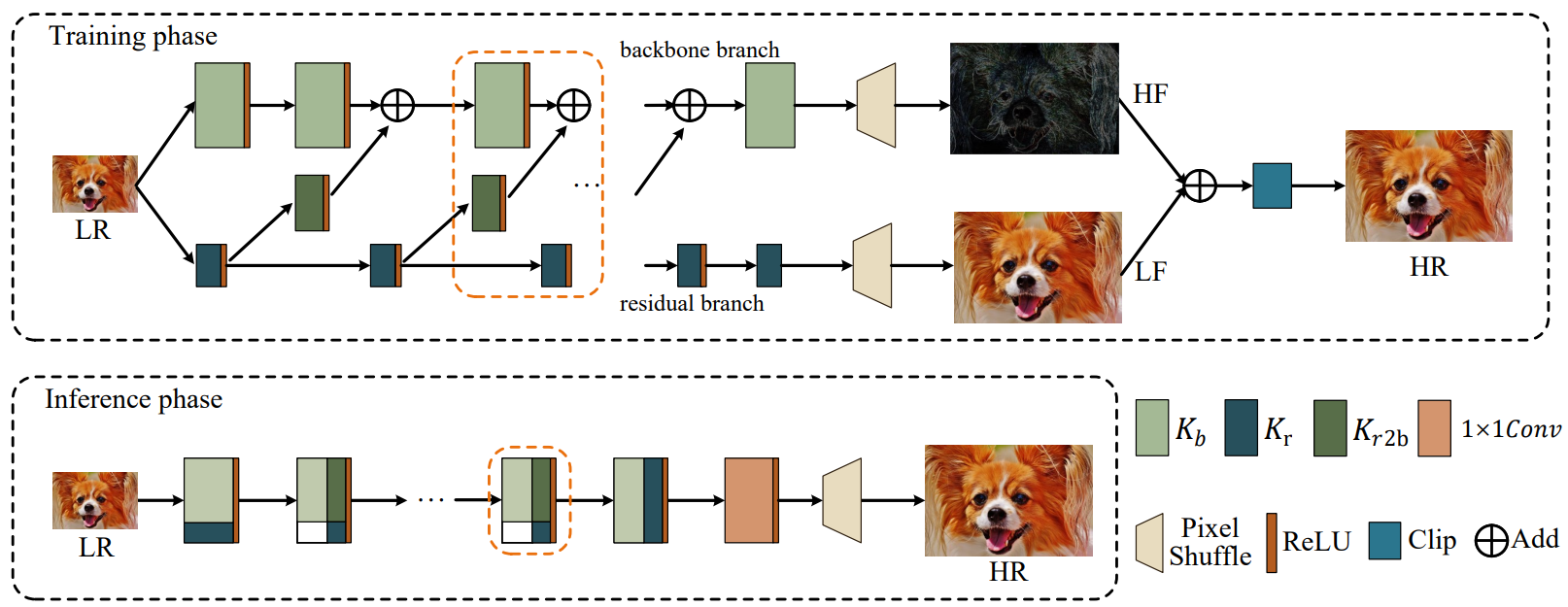

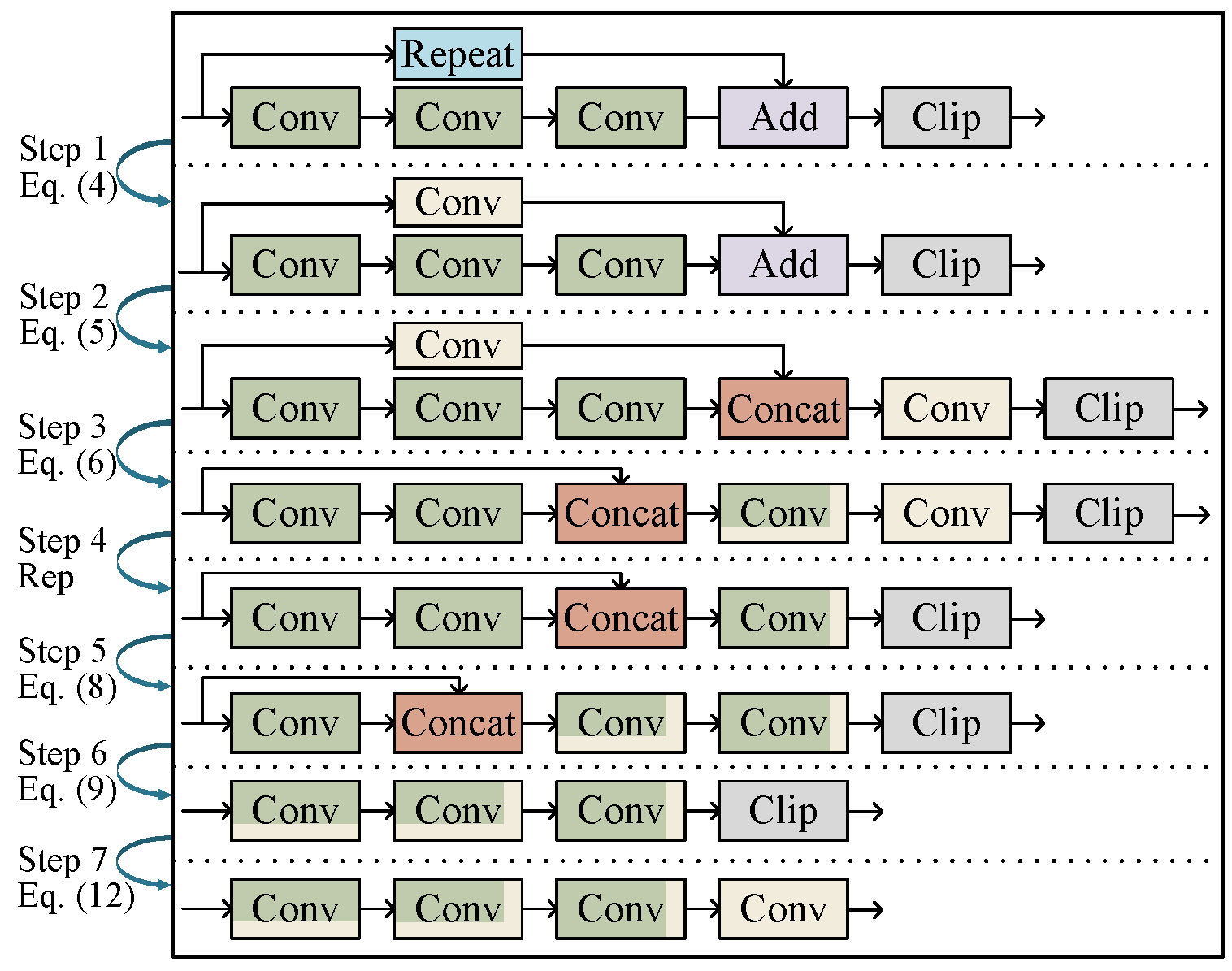

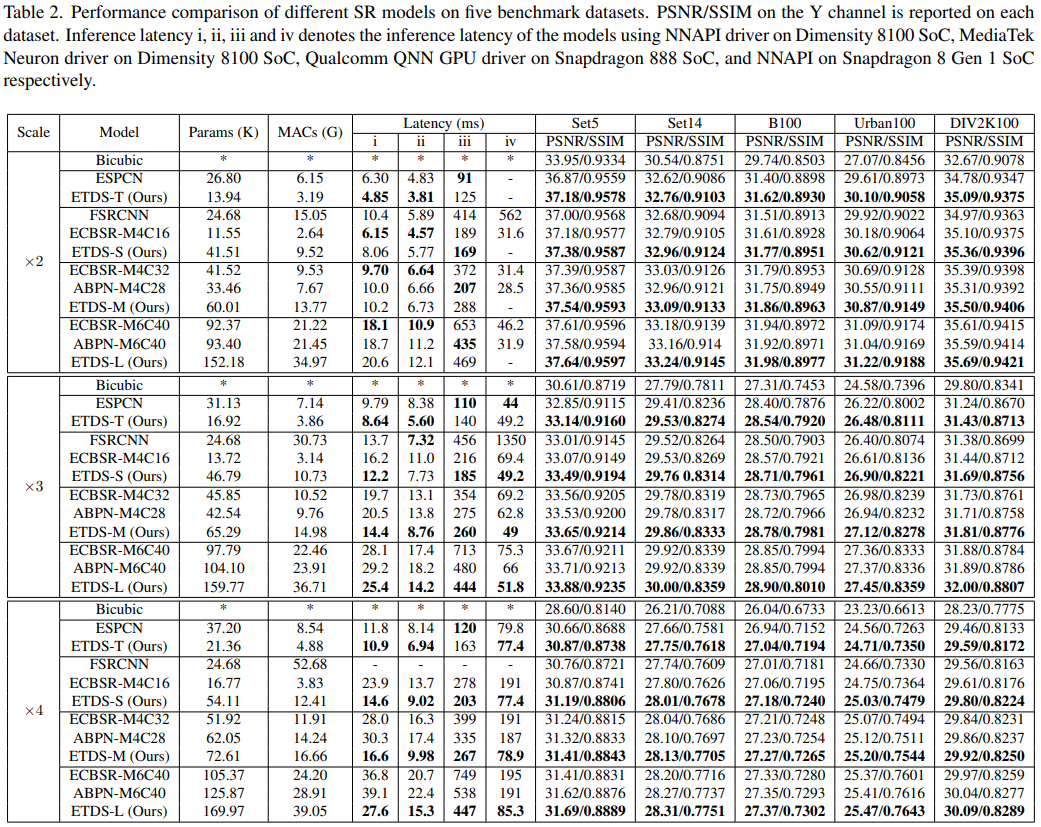

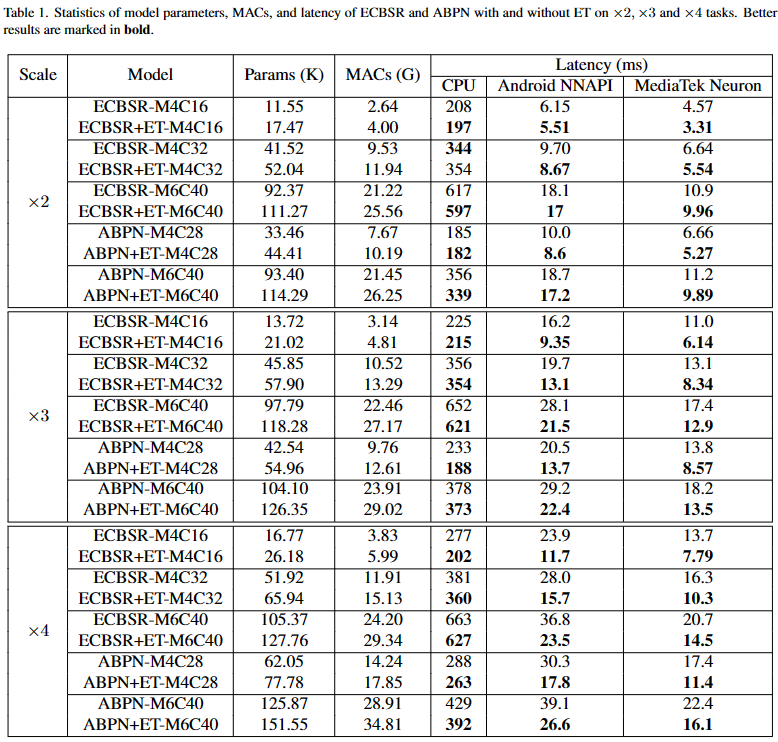

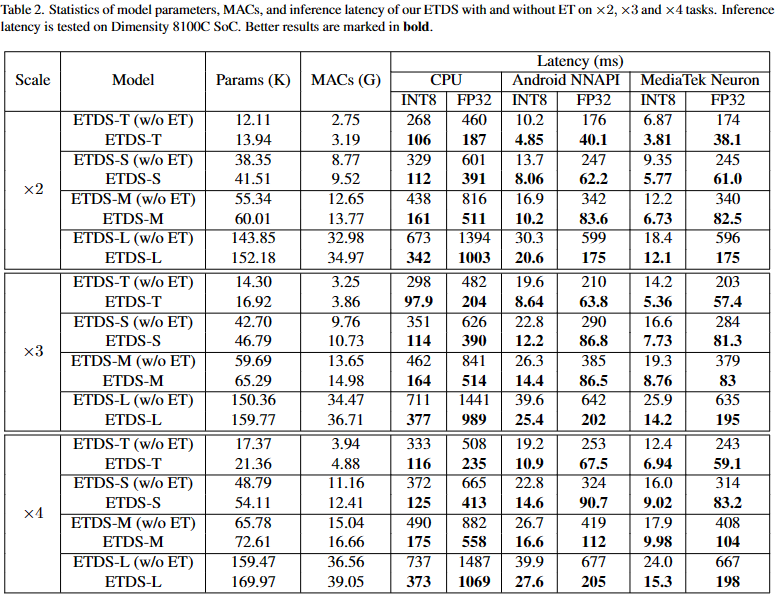

In recent years, there has been an increasing demand for real-time super-resolution networks on mobile devices. To address this issue, many lightweight super-resolution models have been proposed. However, these models still contain time-consuming components that increase inference latency, which limits their real-world applications on mobile devices. In this paper, we propose a novel model based on Equivalent Transformation and Dual Stream network construction (ETDS), for single image super-resolution. The ET method is proposed to transform time-consuming operators into time-friendly ones as convolution and ReLU on mobile devices. Then, a dual stream network is designed to alleviate redundant parameters yielded from the ET and enhance the feature extraction ability. Taking full advantage of the advance of the ET and the dual stream network structure, we develop the efficient SR model ETDS for mobile devices. The experimental results demonstrate that our ETDS achieves superior inference speed and reconstruction quality compared to prior lightweight SR methods on mobile devices.

Like BasicSR, we put the training and validation data under the datasets folder. You can download the data in the way provided by BasicSR, but please note that our data path is slightly different from BasicSR, please modify the data path in the configuration file (all configuration files are under the configs folder).

Also, under the scripts/datasets/DIV2K folder, we provide the script to download the DIV2K dataset. You can download the DIV2K dataset as follows:

bash scripts/datasets/DIV2K/build.shFinally, the structure of the dataset is as follows:

datasets/

├── DIV2K

├── train

├── HR

├── original

├── 0001.png

├── 0002.png

├── ...

├── 0800.png

├── subs

├── 0001_s001.png

├── 0001_s002.png

├── ...

├── 0800_s040.png

├── LR

├── bicubic

├── X2

├── original

├── 0001x2.png

├── 0002x2.png

├── ...

├── 0800x2.png

├── subs

├── 0001_s001.png

├── 0001_s002.png

├── ...

├── 0800_s040.png

├── X3...

├── X4...

├── valid...

├── Set5

├── GTmode12

├── baby.png

├── bird.png

├── butterfly.png

├── head.png

├── woman.png

├── original...

├── LRbicx2...

├── LRbicx3...

├── LRbicx4...

train.py is the entry file for the training phase. You can find the description of train.py in the BasicSR repository. The training command is as follows:

python train.py -opt {configs-path}.ymlwhere {configs-path} represents the path to the configuration file. All configuration files are under the configs/train folder. The log, checkpoint and other files generated during training are saved in the experiments/{name} folder, where {name} refers to the name option in the configuration file.

ETDS during training is a dual stream network, and it can be converted into a plain model through converter.py, as follows:

python converter.py --input {input-model-path}.pth --output {output-model-path}.pthwhere {input-model-path}.pth represents the path to the pre-trained model, and {output-model-path}.pth indicates where the converted model will be saved.

Also, the code of converting ECBSR and ABPN to plain models is in converter_ecbsr_et and converter_abpn_et.

Our pretrained models after conversion are in the experiments/pretrained_models folder.

The validition command is as follows:

python test.py -opt {configs-path}.ymlwhere {configs-path} represents the path to the configuration file. All configuration files are under the configs/test folder. The verification results are saved in the results folder.

This repository is based on BasicSR's code framework and has undergone secondary development. Here we point out the core files of this repository (Descending order of importance):

- core/archs/ir/ETDS/arch.py : ETDS architecture

- converter.py : Convert ETDS to plain model using the Equivalent Transformation technique.

- converter_abpn_et.py : Convert ABPN to plain model using the Equivalent Transformation technique.

- converter_ecbsr_et.py : Convert the ECBSR to a model with less latency using the Equivalent Transformation technique.

- train.py : Model training pipeline

- test.py : Model testing pipeline

- core/models/ir/etds_model.py : An implementation of the model interface for pipeline calls.

- validation/reparameterization.py😊: Verify the correctness of the description of reparameterization in the appendix.

This project is released under the Apache 2.0 license.

@InProceedings{Chao_2023_CVPR,

author = {Chao, Jiahao and Zhou, Zhou and Gao, Hongfan and Gong, Jiali and Yang, Zhengfeng and Zeng, Zhenbing and Dehbi, Lydia},

title = {Equivalent Transformation and Dual Stream Network Construction for Mobile Image Super-Resolution},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {14102-14111}

}