This is the pytorch demo code of our CVPR 2019 paper

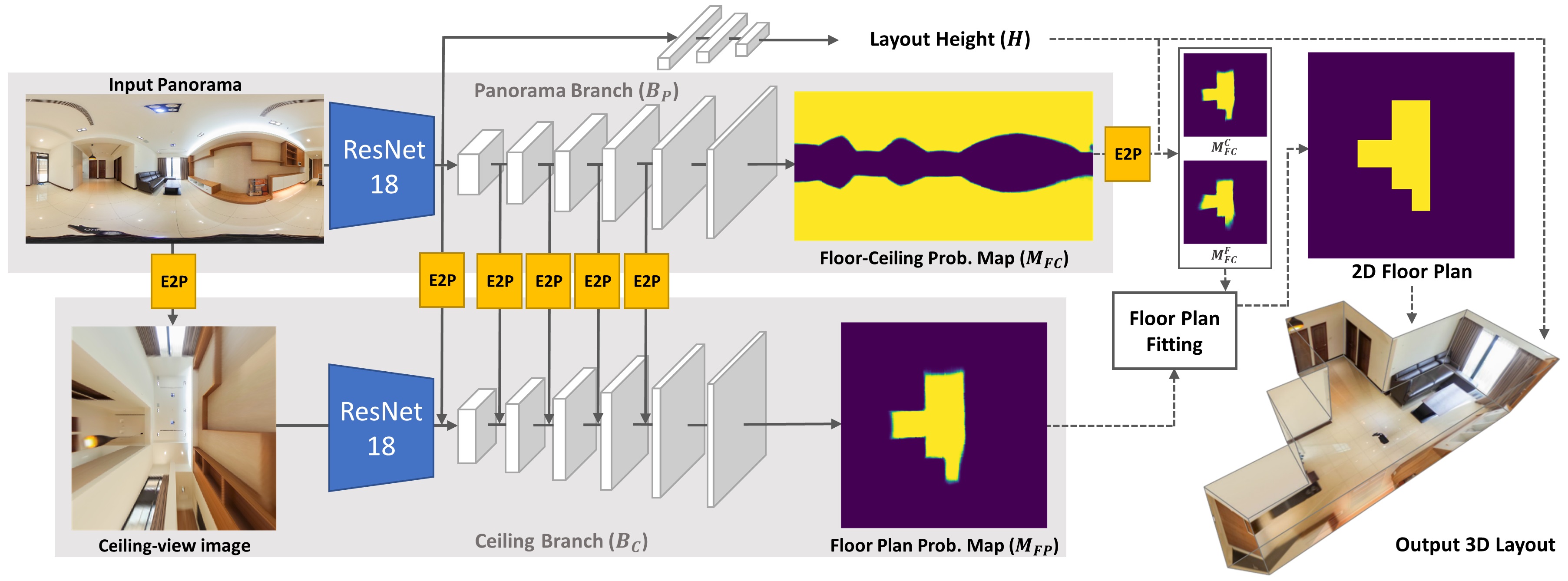

DuLa-Net: A Dual-Projection Network for Estimating Room Layouts from a Single RGB Panorama (Arxiv, Project)

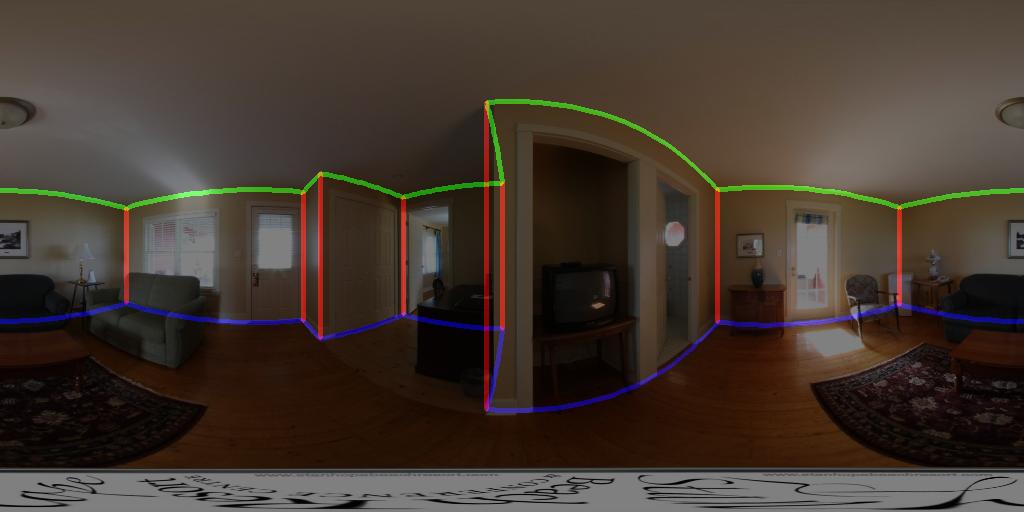

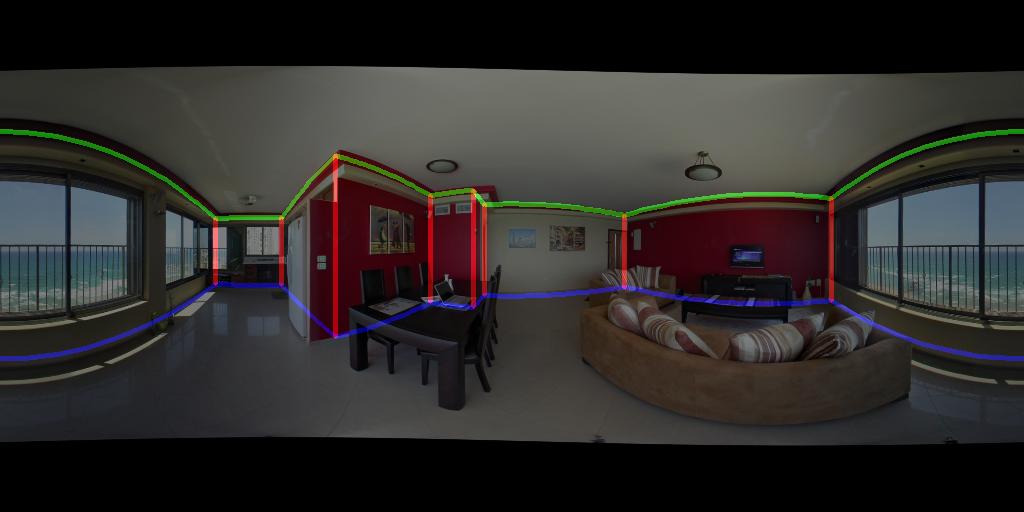

By this repo you can estimate the 3D room layout from a single indoor RGB panorama. To see more details please refer to the paper or project page.

- Python3

- Pytorch (CUDA >= 8.0)

- OpenCV-Python

- Pillow / scikit-image

First, please download the pretrained models and copy to ./Model/ckpt/

The pretrained models are trained on our Realtor360 dataset with different backbone networks.

The input panorama should be already aligned with the Manhattan World. We recommand you using the PanoBasic in Matlab or the python implementation here. Those tool can help you do the pre-processing to align the panorama.

Then using below command to load the pretrained model and predict the 3D layout.

python demo.py --input figs\001.jpg

If you want to use other backbone networks(default is resnet18).

python demo.py --input figs\001.jpg --backbone resnet50 --ckpt Model\ckpt\res50_realtor.pkl

The Realtor360 dataset currently couldn’t be made publicly available due to some legal privacy issue. Please refer to the MatterportLayout dataset(coming soon), which resembles the Realtor360 in all aspects.