This repository is the official implementation of "Meta-TTS: Meta-Learning for Few-shot SpeakerAdaptive Text-to-Speech".

| multi-task learning | meta learning |

|---|---|

|

|

This is how I build my environment, which is not exactly needed to be the same:

- Sign up for Comet.ml, find out your workspace and API key via www.comet.ml/api/my/settings and fill them in

config/comet.py. Comet logger is used throughout train/val/test stages.- Check my training logs here.

- [Optional] Install pyenv for Python version control, change to Python 3.8.6.

# After download and install pyenv:

pyenv install 3.8.6

pyenv local 3.8.6- [Optional] Install pyenv-virtualenv as a plugin of pyenv for clean virtual environment.

# After install pyenv-virtualenv

pyenv virtualenv meta-tts

pyenv activate meta-tts- Install requirements:

pip install -r requirements.txtFirst, download LibriTTS and VCTK, then change the paths in config/LibriTTS/preprocess.yaml and config/VCTK/preprocess.yaml, then run

python3 prepare_align.py config/LibriTTS/preprocess.yaml

python3 prepare_align.py config/VCTK/preprocess.yamlfor some preparations.

Alignments of LibriTTS is provided here, and

the alignments of VCTK is provided here.

You have to unzip the files into preprocessed_data/LibriTTS/TextGrid/ and

preprocessed_data/VCTK/TextGrid/.

Then run the preprocessing script:

python3 preprocess.py config/LibriTTS/preprocess.yaml

# Copy stats from LibriTTS to VCTK to keep pitch/energy normalization the same shift and bias.

cp preprocessed_data/LibriTTS/stats.json preprocessed_data/VCTK/

python3 preprocess.py config/VCTK/preprocess.yamlTo train the models in the paper, run this command:

python3 main.py -s train \

-p config/preprocess/<corpus>.yaml \

-m config/model/base.yaml \

-t config/train/base.yaml config/train/<corpus>.yaml \

-a config/algorithm/<algorithm>.yamlTo reproduce, please use 8 V100 GPUs for meta models, and 1 V100 GPU for baseline

models, or else you might need to tune gradient accumulation step (grad_acc_step)

setting in config/train/base.yaml to get the correct meta batch size.

Note that each GPU has its own random seed, so even the meta batch size is the

same, different number of GPUs is equivalent to different random seed.

After training, you can find your checkpoints under

output/ckpt/<corpus>/<project_name>/<experiment_key>/checkpoints/, where the

project name is set in config/comet.py.

To inference the models, run:

python3 main.py -s test \

-p config/preprocess/<corpus>.yaml \

-m config/model/base.yaml \

-t config/train/base.yaml config/train/<corpus>.yaml \

-a config/algorithm/<algorithm>.yaml \

-e <experiment_key> -c <checkpoint_file_name>and the results would be under

output/result/<corpus>/<experiment_key>/<algorithm>/.

Note: The evaluation code is not well-refactored yet.

cd evaluation/ and check README.md

Note: The checkpoints are with older version, might not capatiable with the current code. We would fix the problem in the future.

Since our codes are using Comet logger, you might need to create a dummy experiment by running:

from comet_ml import Experiment

experiment = Experiment()then put the checkpoint files under

output/ckpt/LibriTTS/<project_name>/<experiment_key>/checkpoints/.

You can download pretrained models here.

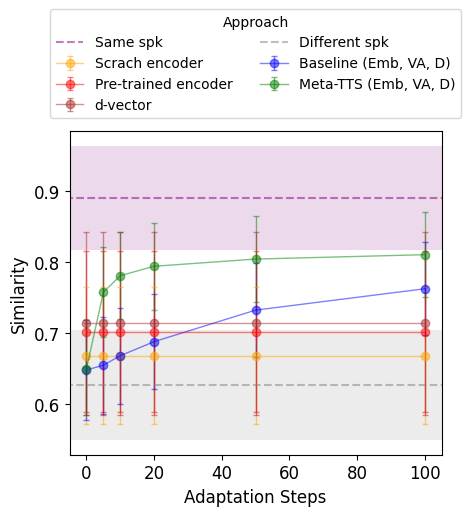

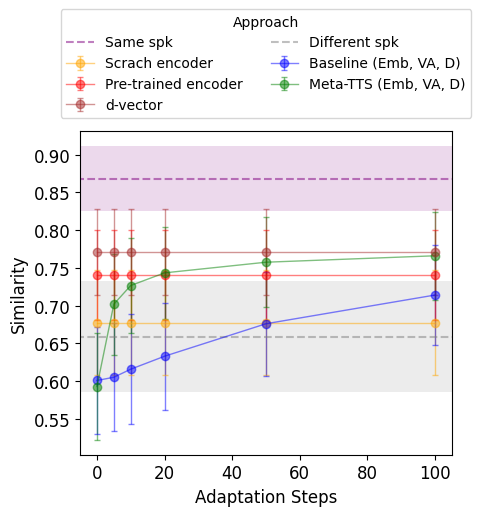

| Corpus | LibriTTS | VCTK |

|---|---|---|

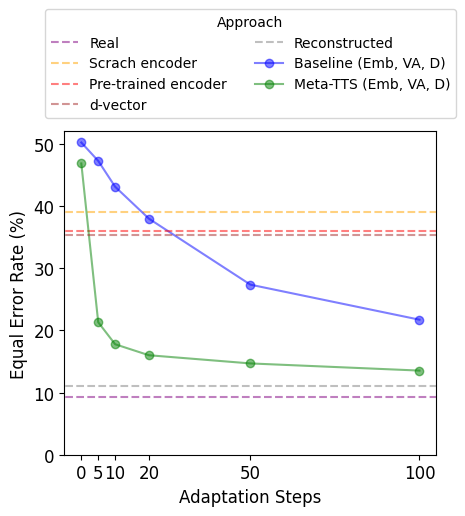

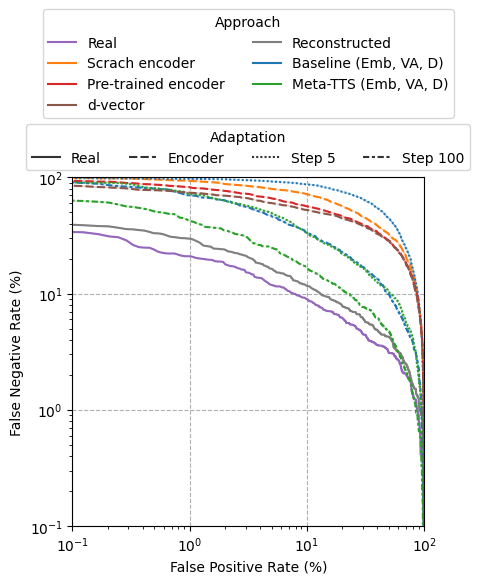

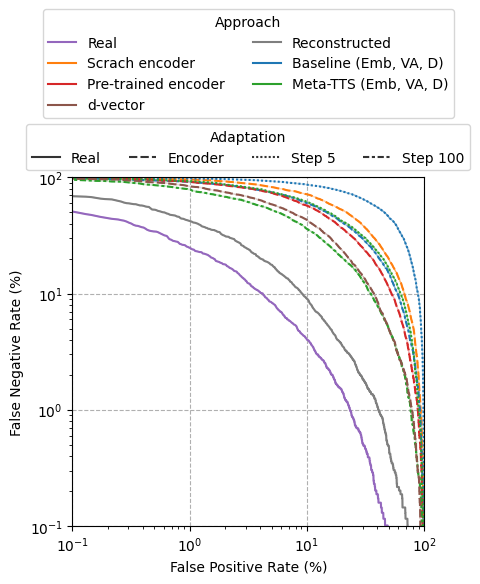

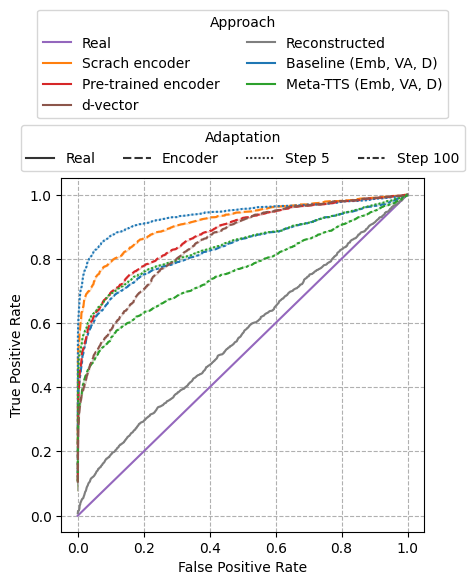

| Speaker Similarity |  |

|

| Speaker Verification |   |

|

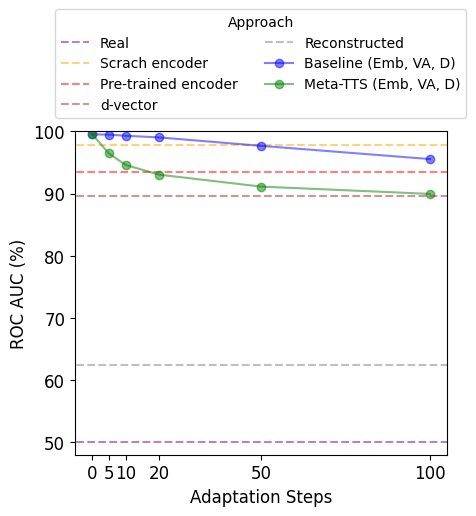

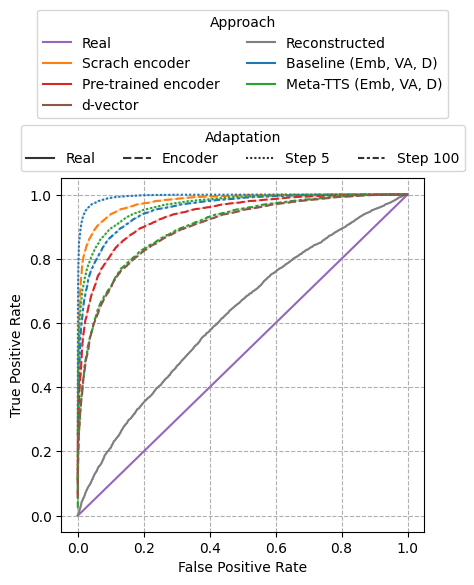

| Synthesized Speech Detection |   |

|