This is an official pytorch implementation for "Searching for BurgerFormer with Micro-Meso-Macro Space Design".

- PyTorch 1.8.0

- timm 0.4.12

Pre-trained checkpoints are released google drive/baiduyun. Place them in the .checkpoints/ folder.

Note: access code for baiduyun is gvfl.

To evaluate a pre-trained BurgerFormer model on ImageNet, run:

bash script/test.shTo retrain a BurgerFormer model on ImageNet, run:

bash script/train.sh- Searching Code

Please cite our paper if you find anything helpful.

@InProceedings{yang2022burgerformer,

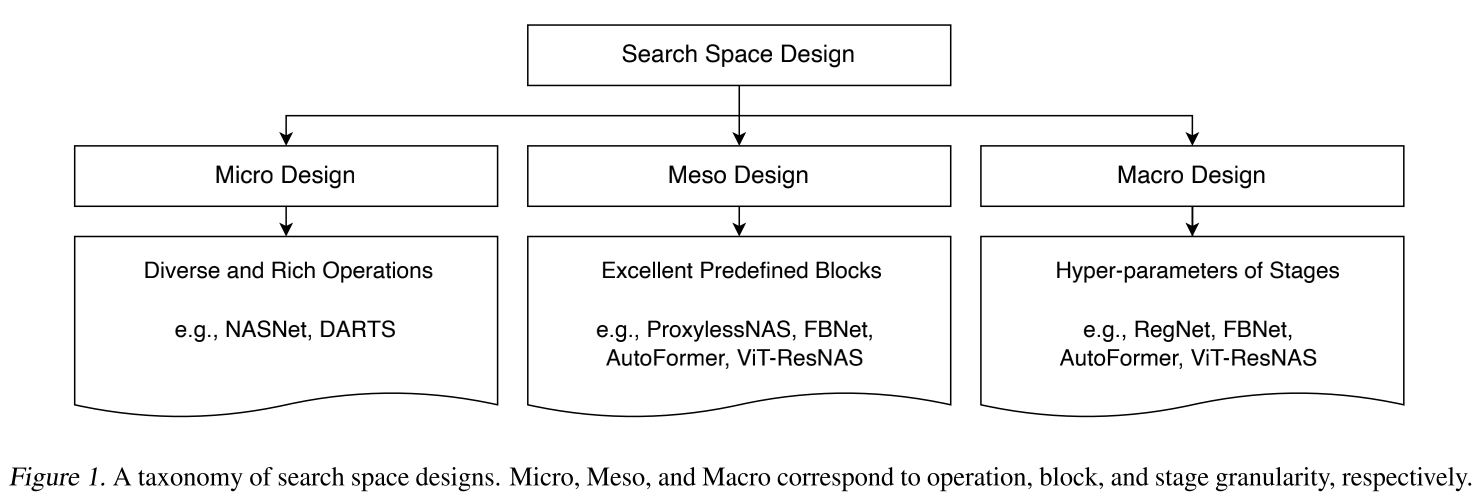

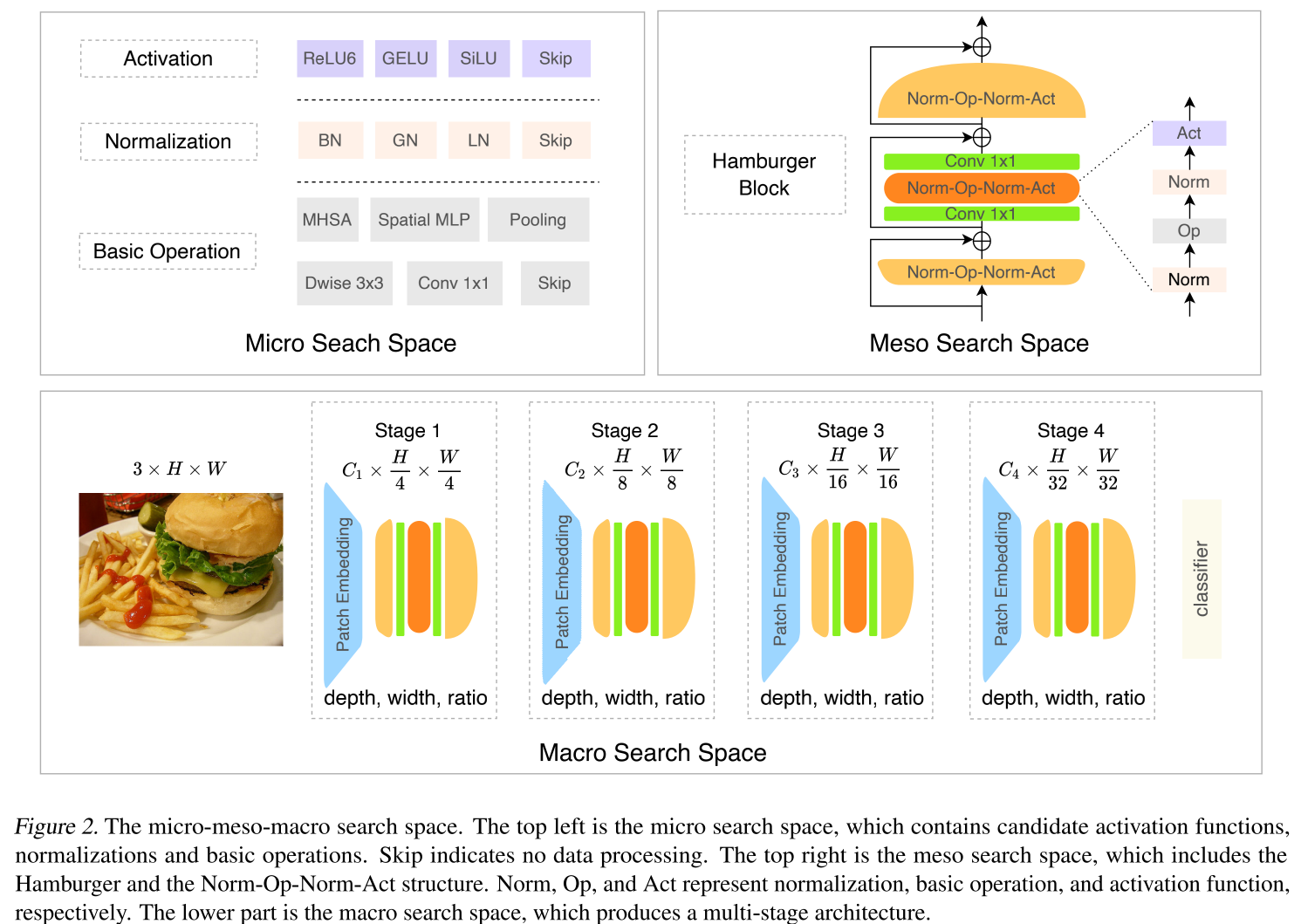

title={Searching for BurgerFormer with Micro-Meso-Macro Space Design},

author={Yang, Longxing and Hu, Yu and Lu, Shun and Sun, Zihao and Mei, Jilin and Han, Yinhe and Li, Xiaowei},

booktitle={ICML},

year={2022}

}

This code is heavily based on poolformer, ViT-ResNAS, pytorch-image-models, mmdetection. Great thanks to their contributions.