BiomedGPT is developed based on OFA but pre-trained and fine-tuned with multi-modal & multi-task biomedical datasets. Details are shown in datasets.md. If you have any questions, feel free to contact us or post issues.

Breaking News! 💥 :

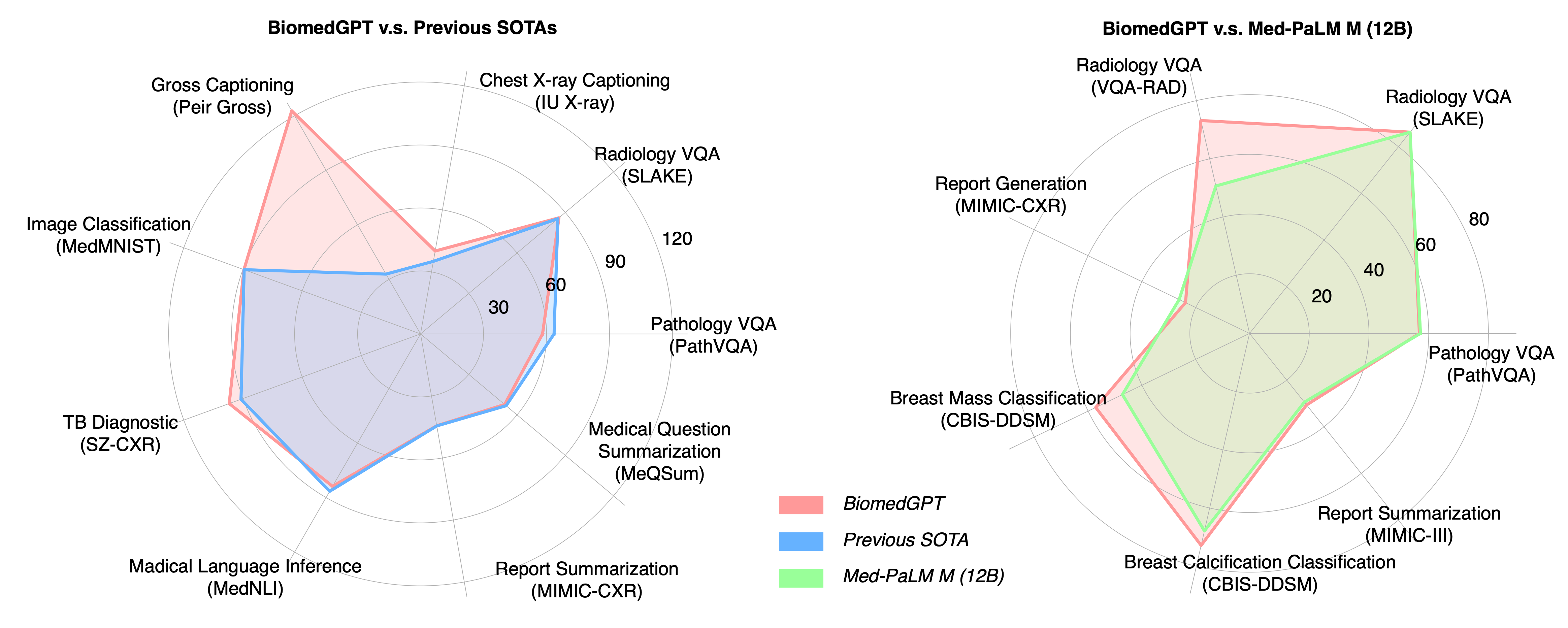

We have updated the fine-tuning receipts to to match or surpass the performance of prior state-of-the-art models, including Med-PaLM M (12B) and GPT-4V. For more details, please refer to the corresponding preprint version was updated. Below is a snapshot comparing these performances.

- [] We're updating our codebase and will soon release the latest SOTA checkpoints for various downstream tasks.

- [] Efforts are underway to translate our code from fairseq to Hugging Face, simplifying usage for users.

We provid pretrained checkpoints of BiomedGPT (Dropbox), which can be put in the scripts/ folder for further development. For finetuned checkpoints, please refer to checkpoints.md.

I recently received a reminder regarding potential policy issues associated with releasing the model checkpoints, due to the fact that not all pretraining / downstream datasets are fully open-sourced. I need to review the relevant policies and licenses to ensure the legality of sharing the weights. Once I can confirm this, I will enable downloading the checkpoints. Thank you for your patience.

git clone https://github.com/taokz/BiomedGPT

conda env create -f biomedgpt.yml

python -m pip install pip==21.2.4

pip install fairseqWe provide the preprocessing, pretraining, finetuning and inference scripts in the scripts/ folder. You can follow the directory setting below:

BiomedGPT/

├── checkpoints/

├── datasets/

│ ├── pretraining/

│ ├── finetuning/

│ └── ...

├── scripts/

│ ├── preprocess/

│ │ ├── pretraining/

│ │ └── finetuning/

│ ├── pretrain/

│ ├── vqa/

│ └── ...

└── ...

Please follow datasets.md to prepare pretraining datasets, which includes 4 TSV files: vision_language.tsv, text.tsv, image.tsv and detection.tsv in the directory of ./datasets/pretraining/.

cd scripts/pretrain bash pretrain_tiny.sh

Feel free to modify the hyperparameters in the bash script for your requirements or ablation study.

We provide the run scripts of fine-tuning and inference. There will be log files during execution. Before fine-tuning or inference, please refer to

Visual Question Answering

cd scripts/vqa # for fine-tuning bash train_vqa_rad_beam.sh # for inference bash evaluate_vqa_rad_beam.sh

Image Captioning

cd scripts/caption # for fine-tuning bash train_peir_gross.sh # for inference bash evaluate_peir_gross.sh

Text Summarization

cd scripts/text_sum # for fine-tuning bash train_meqsum.sh # for inference bash evaluate_meqsum.sh

Natural Language Inference

cd scripts/mednli # for fine-tuning bash train_mednli.sh # for inference bash evaluate_mednli.sh

Image Classification

cd scripts/image_cls # for fine-tuning: I provide a template, please set different hyparameters for each dataset in MedMNIST if required. bash train_medmnist.sh # for inference: a template bash evaluate_medmnist.sh

If you use BiomedGPT model or our code for publications, please cite 🤗:

@misc{zhang2023biomedgpt,

title={BiomedGPT: A Unified and Generalist Biomedical Generative Pre-trained Transformer for Vision, Language, and Multimodal Tasks},

author={Kai Zhang and Jun Yu and Zhiling Yan and Yixin Liu and Eashan Adhikarla and Sunyang Fu and Xun Chen and Chen Chen and Yuyin Zhou and Xiang Li and Lifang He and Brian D. Davison and Quanzheng Li and Yong Chen and Hongfang Liu and Lichao Sun},

year={2023},

eprint={2305.17100},

archivePrefix={arXiv},

primaryClass={cs.CL}

}