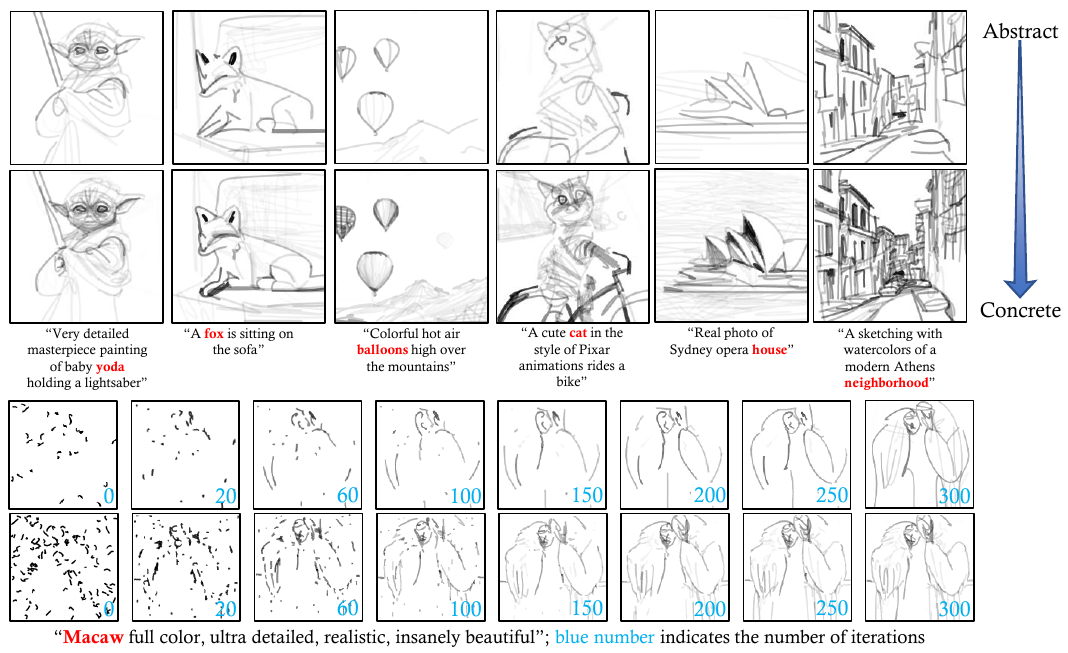

This repository contains our official implementation of the NeurIPS 2023 paper: DiffSketcher: Text Guided Vector Sketch Synthesis through Latent Diffusion Models, which can generate high-quality vector sketches based on text prompts. Our project page can be found here.

- [10/2023] We released the DiffSketcher code.

- [10/2023] We released the VectorFusion code.

- [10/2023] Thanks to @camenduru, DiffSketcher-colab has been released.

- Add a webUI demo.

- Add support for colorful results and oil painting.

Create a new conda environment:

conda create --name diffsketcher python=3.10

conda activate diffsketcherInstall pytorch and the following libraries:

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.6 -c pytorch -c nvidia

pip install omegaconf BeautifulSoup4

pip install opencv-python scikit-image matplotlib visdom wandb

pip install triton numba

pip install numpy scipy timm scikit-fmm einops

pip install accelerate transformers safetensors datasetsInstall CLIP:

pip install ftfy regex tqdm

pip install git+https://github.com/openai/CLIP.gitInstall diffusers:

pip install diffusers==0.20.2Install xformers (require python=3.10):

conda install xformers -c xformersInstall diffvg:

git clone https://github.com/BachiLi/diffvg.git

cd diffvg

git submodule update --init --recursive

conda install -y -c anaconda cmake

conda install -y -c conda-forge ffmpeg

pip install svgwrite svgpathtools cssutils torch-tools

python setup.py installPreview:

Script:

python run_painterly_render.py \

-c diffsketcher.yaml \

-eval_step 10 -save_step 10 \

-update "token_ind=4 num_paths=96 sds.warmup=1000 num_iter=1500" \

-pt "a photo of Sydney opera house" \

-respath ./workdir/sydney_opera_house \

-d 8019 \

--download-ca.k.a--config: configuration file, saving inDiffSketcher/config/.-eval_step: the step size used to eval the method (too frequent calls will result in longer times).-save_step: the step size used to save the result (too frequent calls will result in longer times).-update: a tool for editing the hyper-params of the configuration file, so you don't need to create a new yaml.-pta.k.a--prompt: text prompt.-respatha.k.a--results_path: the folder to save results.-da.k.a--seed: random seed.--download: download models from huggingface automatically when you first run them.

crucial:

-update "token_ind=2"indicates the index of cross-attn maps to init strokes.-update "num_paths=96"indicates the number of strokes.

optional:

-npt, a.k.a--negative_prompt: negative text prompt.-mv, a.k.a--make_video: make a video of the rendering process (it will take much longer).-frame_freq, a.k.a--video_frame_freq: control video frame.- Note: Download U2Net model and place

in

checkpoint/dir ifxdog_intersec=True - add

enable_xformers=Truein-updateto enable xformers for speeding up. - add

gradient_checkpoint=Truein-updateto use gradient checkpoint for low VRAM.

Preview:

Script:

python run_painterly_render.py \

-c diffsketcher-width.yaml \

-eval_step 10 -save_step 10 \

-update "token_ind=4 num_paths=48 num_iter=500" \

-pt "a photo of Sydney opera house" \

-respath ./workdir/sydney_opera_house \

-d 8019 \

--downloadcheck the run.md for more scripts.

The project is built based on the following repository:

We gratefully thank the authors for their wonderful works.

If you use this code for your research, please cite the following work:

@inproceedings{xing2023diffsketcher,

title={DiffSketcher: Text Guided Vector Sketch Synthesis through Latent Diffusion Models},

author={Xing, Ximing and Wang, Chuang and Zhou, Haitao and Zhang, Jing and Yu, Qian and Xu, Dong},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2023}

}

This work is licensed under a MIT License.