ReferIt3D: Neural Listeners for Fine-Grained 3D Object Identification in Real-World Scenes [ECCV 2020 (Oral)]

Created by: Panos Achlioptas, Ahmed Abdelreheem, Fei Xia, Mohamed Elhoseiny, Leonidas Guibas

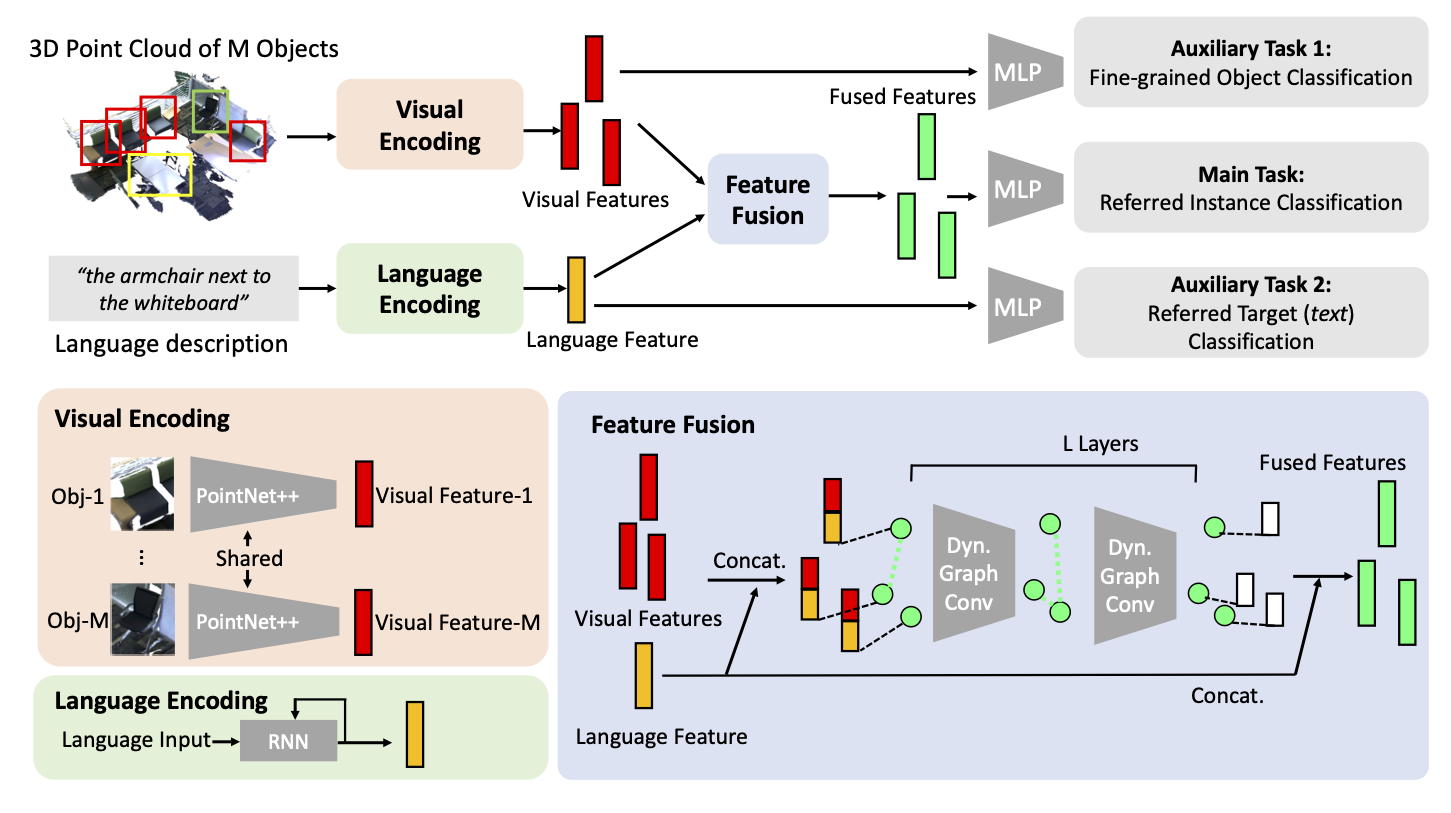

This work is based on our ECCV-2020 paper. There, we proposed the novel task of identifying a 3D object in a real-world scene given discriminative language, created two relevant datasets (Nr3D and Sr3D) and proposed a 3D neural listener (ReferIt3DNet) for solving this task. The bulk of the provided code serves the training & evaluation of ReferIt3DNet in our data. For more information please visit our project's webpage.

- Python 3.x with numpy, pandas, matplotlib (and a few more common packages - please see setup.py)

- Pytorch 1.x

Our code is tested with Python 3.6.9, Pytorch 1.4 and CUDA 10.0, on Ubuntu 14.04.

- (recommended) you are advised to create a new anaconda environment, please use the following commands to create a new one.

conda create -n referit3d_env python=3.6.9 cudatoolkit=10.0

conda activate referit3d_env

conda install pytorch torchvision -c pytorch- Install the referit3d python package using

cd referit3d

pip install -e .- To use a PointNet++ visual-encoder you need to compile its CUDA layers for PointNet++:

Note: To do this compilation also need: gcc5.4 or later.

cd external_tools/pointnet2

python setup.py installFirst you must download the train/val scans of ScanNet if you do not have them locally. To do so, please refer to the ScanNet Dataset for more details.

- Nr3D you can dowloaded Nr3D here (10.7MB)

- Sr3D / Sr3D+ you can dowloaded Sr3D/Sr3D+ here (19MB / 20MB)

Since Sr3d is a synthetic dataset, you can change the hyper-parameters to create a version customized to your needs. please see referit3d/data_generation/sr3d/

- To train on either Nr3d or Sr3d dataset, use the following commands

cd referit3d/scripts/

python train_referit3d.py -scannet-file the_processed_scannet_file -referit3D-file dataset_file.csv --log-dir dir_to_log --n-workers 4feel free to change the number of workers to match your #CPUs and RAM size.

- To train nr3d in joint with sr3d, add the following argument

--augment-with-sr3d sr3d_dataset_file.csv- To evaluate on either Nr3d or Sr3d dataset, use the following commands

cd referit3d/scripts/

python train_referit3d.py --mode evaluate -scannet-file the_processed_scannet_file -referit3D-file dataset_file.csv --resume-path the_path_to_the_best_model.pth --n-workers 4 --batch-size 64 - To evaluate on joint trained model, add the following argument to the above command

--augment-with-sr3d sr3d_dataset_file.csvyou can download a pretrained ReferIt3DNet models on Nr3D and Sr3D here. please extract the zip file and then copy the extracted folder to referit3d/log folder. you can run the following the command to evaluate:

cd referit3d/scripts

python train_referit3d.py --mode evaluate -scannet-file path_to_keep_all_points_00_view_with_global_scan_alignment.pkl -referit3D-file path_to_corresponding_csv.csv --resume-path checkpoints/best_model.pth

We wish to aggregate and highlight results from different approaches tackling the problem of fine-grained 3D object identification via language. If you use either of our datasets with a new method, please let us know! so we can add your method and attained results in our benchmark-aggregating page.

@article{achlioptas2020referit_3d,

title={ReferIt3D: Neural Listeners for Fine-Grained 3D Object Identification in Real-World Scenes},

author={Achlioptas, Panos and Abdelreheem, Ahmed and Xia, Fei and Elhoseiny, Mohamed and Guibas, Leonidas},

journal={16th European Conference on Computer Vision (ECCV)},

year={2020}

}

The code is licensed under MIT license (see LICENSE.md for details).