###WAN Infrastructure and Connectivity Manager

WICM is an implementation of the ETSI MANO defined WIM (WAN Infrastructure Manager) for the T-Nova project.

WIM is described ETSI ETSI GS NFV-MAN 001 V1.1.1 as:

Interface with the underlying Network Controllers to request virtual connectivity services. In such a case, the VIMs/WIMs interface with the Network Controllers over the Nf-Vi reference point. There could be multiple Network Controllers under a VIM (for example, if different virtual network partitioning techniques are used within the domain); in this case, the VIM is responsible to request virtual networks from each underlying Network Controller and setup the interworking function between them. This acknowledges the fact that there might be existing Network Controllers in the NFVI already deployed and in use for connectivity prior to the instantiation of the VIM/NFVO.

Establish the connectivity services directly by configuring the forwarding tables of the underlying Network Functions, hence becoming the Network Controller part of the VIM (although it is not explicitly shown in the figure). In such a case, the VIM directly controls some elements, for example, a software switch (a.k.a. vSwitch). This could also be the case if there are Network Functions that do not fall within the Administrative Domain of a Network Controller.

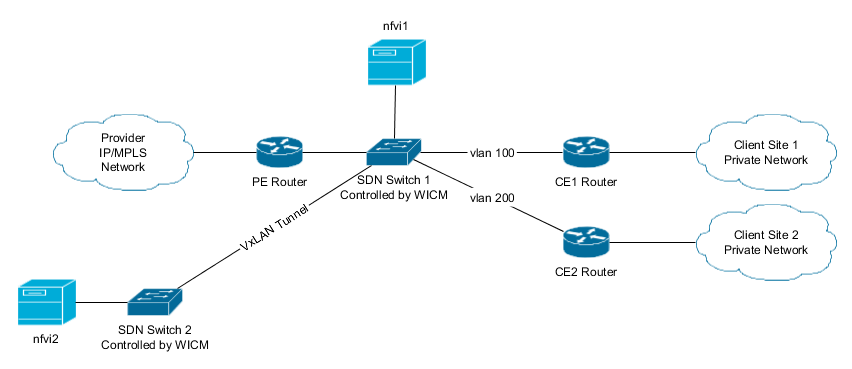

WICM in T-Nova works as an enabler for allowing the clients to acquire/use virtual network functions as a service. It is responsible for redirecting traffic from a client into a or several NFVI-PoP(s), by means of SDN switches. The switches are connected using VxLAN tunnels when direct cable connections are unavailable.

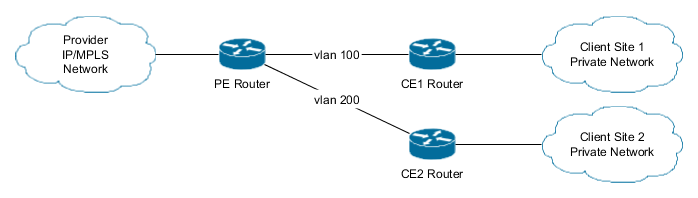

Consider the base scenery for IP/MPLS VPN:

PE stands for Provider Edge and CE for Client Edge. Client Site 1 has vlan 100 for its attachment circuit to the PE router, while Client Site 2 has vlan 200.

Client 1 would like to use some network virtual functions provided by it's network provider, available at the NFVI-PoPs: nfvi1 and nfvi2. nfvi1 is close to client 1 while nvfi2 if farther away. Which one to use is negotiated by the client and the provider, even both can be used. In this example traffic from the client site 1 to the internet will go first to the nfvi1 while traffic comming in from the internet will go first to the nfvi2 and then nfvi1.

To redirect the traffic from client 1 to the NFVI-PoPs we will use a SDN switch:

Now the orchestrator may request WICM to redirect traffic from client 1 into the nfvi-pops, WICM is also in charge to configure the SDN switches to send processed packets to their original destination.

This request is done in a two-step process:

-

Orchestrator requests WICM to reserve vlans for each of the NFVI-PoP(3 vlans in this case) that are going to be used for the client.

-

WICM answers with a vlan per nfvi pop per direction requested. These vlans are now reserved for that client.

-

Orchestrator instantiates the respective services in the correct vlans in order to receive the redirected traffic. And then, once the VNFs are ready requests WICM to start redirecting traffic.

-

Traffic redirection is enabled!

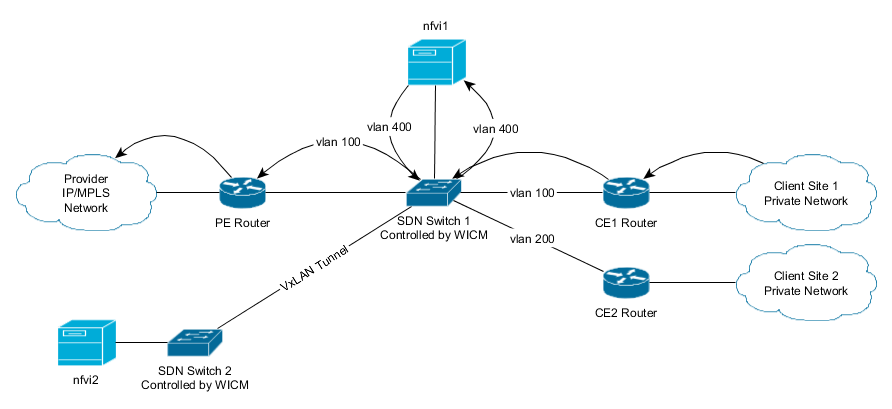

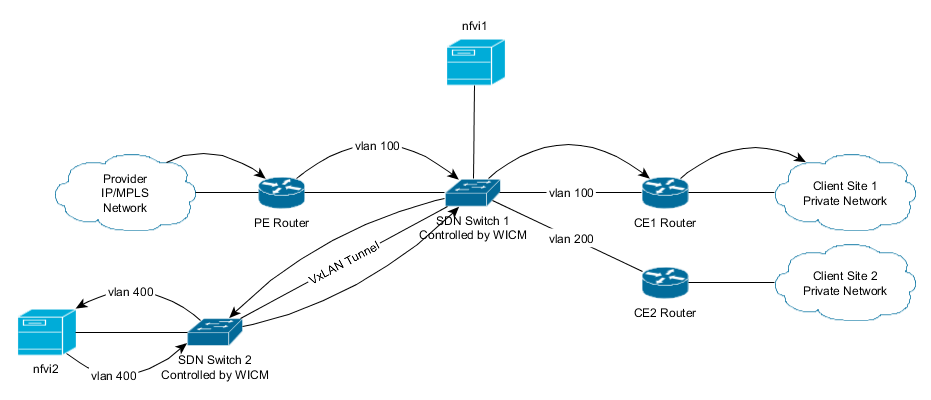

####For example if WICM reserved vlan 400@nfvi1 for direction ce->pe and vlan 400@nfvi2 for direction pe->ce the traffic would flow like this:

The whole process is transparent to both the PE and CE routers involved, with them only seeing vlan 100. Ensuring compatibility with legacy networks with minimum cost.

SDN switches modify the vlans assuring that packets enter both the routers and the nfvi-pops with correct vlans.

One nfvi-pop can be shared by several clients, just as long as vlans are available.

WICM uses OpenDaylight plus the VTN (Virtual Tenant Network) plugin to control the SDN switches and handle the low level redirection details.

WICM uses MariaDB or mySQL for persistence.

WICM is presented as a self-contained docker-compose file incorporating WICM, OpenDaylight and MariaDB. To run do on the WICM folder:

docker-compose build docker-compose up -d

Then you will need to initiate the database:

curl -X DELETE WICM_IP:12891/reset_db

In a production deployment this option should be disabled.

WICM will be available on port 12891.

To do so use the wicm.dockerfile found in the WICM directory. Make sure to update the configuration file (wicm.ini).

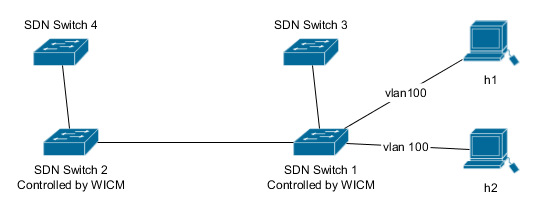

To test WICM you can use the Vagrantfile(demo/Vagrantfile) provided. It will create a virtual machine containing WICM and a simple test network:

Switches 3 and 4 simulate the NFVI-PoPs, are pre-programmed and not controlled by the WICM. Hosts h1 and h2 simulate the CE and PE, respectively.

To start the demo, on the demo directory do:

vagrant up

Once the machine is up it can be accessed:

vagrant ssh

Enter the mininet and try:

sudo screen -rx h1 ping h2

mininet> h1 ping h2 PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data. From 10.0.0.1 icmp_seq=1 Destination Host Unreachable From 10.0.0.1 icmp_seq=2 Destination Host Unreachable From 10.0.0.1 icmp_seq=3 Destination Host Unreachable

The ping fails because the NAP(Network Access Point) is not yet registered, to register it issue the following command on the virtual machine:

curl -X POST localhost:12891/nap \

-H "Content-type: application/json" \

-d'{"nap":{"mkt_id":"c1_nap1","client_mkt_id":"c1","switch":"openflow:1","ce_port":1,"pe_port":2,"ce_transport":{"type":"vlan","vlan_id":100},"pe_transport":{"type":"vlan","vlan_id":100}}}'

The payload in a read friendly format:

{

"nap":{

"mkt_id":"c1_nap1",

"client_mkt_id":"c1",

"switch":"openflow:1",

"ce_port":1,

"pe_port":2,

"ce_transport":{

"type":"vlan",

"vlan_id":100

},

"pe_transport":{

"type":"vlan",

"vlan_id":100

}

}

}

Meaning that client c1 has a NAP identified by c1_nap1 on SDN switch openflow:1. In the switch's port 1 with vlan 100 is connected the CE and on port 2 is connected the PE also with vlan 100. Only vlans are supported at the moment. The ids fields are assign/used by orchestrator (TeNOR).

This allows WICM to configure the SDN switch to allow the client site to access the internet and later this will be the point where the redirection to the NFVI-PoP(s) will occur.

Now the ping will work.

mininet> h1 ping h2 PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data. 64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=1.95 ms 64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.183 ms 64 bytes from 10.0.0.2: icmp_seq=3 ttl=64 time=0.261 ms

Now that the basic connectivity is up, redirection is the next step. First we register the NFVI-PoPs, in this case switches s3 and s4 that will circulate the packets in the assigned vlans:

curl -X POST localhost:12891/nfvi \

-H "Content-type: application/json" \

-d '{"nfvi":{"mkt_id":"nfvi1","switch":"openflow:1","port":3}}'

curl -X POST localhost:12891/nfvi \

-H "Content-type: application/json" \

-d '{"nfvi":{"mkt_id":"nfvi2","switch":"openflow:2","port":1}}'

The payload in a read friendly format:

{

"nfvi":{

"mkt_id":"nfvi1",

"switch":"openflow:1",

"ce_port":1,

}

}

Meaning that NFVI-PoP nfvi1 is available in SDN switch openflow:1, in port 3. The NFVI-PoP has no client associated because it belongs to the service provider and may hold VNFs for several clients. Payload for the second request is similar.

We are now ready to start redirecting from NAP c1_nap1 to the NFVI-PoPs:

The first step of the process:

curl -X POST localhost:12891/vnf-connectivity \

-H "Content-type: application/json" \

-d'{"service":{"ns_instance_id":"service1","client_mkt_id":"c1","nap_mkt_id":"c1_nap1","ce_pe":["nfvi1","nfvi2"],"pe_ce":["nfvi1"]}}'

The payload in a read friendly format:

{

"service":{

"ns_instance_id":"service1",

"client_mkt_id":"c1",

"nap_mkt_id":"c1_nap1",

"ce_pe":["nfvi1","nfvi2"],

"pe_ce":["nfvi1"]

}

}

Requesting a redirection from c1->nfvi1->nfvi2->Internt and from the Internet->nfvi1->c1.

Once we make this request to WICM it returns:

{

"allocated": {

"pe_ce": [

{

"nfvi_id": "nfvi1",

"transport": {

"type": "vlan",

"vlan_id": 400

}

},

{

"nfvi_id": "nfvi2",

"transport": {

"type": "vlan",

"vlan_id": 400

}

}

],

"ns_instance_id": "service1",

"ce_pe": [

{

"nfvi_id": "nfvi1",

"transport": {

"type": "vlan",

"vlan_id": 401

}

}

]

}

}

Meaning vlans 400@nfvi1 for direction ce->pe and vlans 400@nfvi2 and 401@nfvi1 for direction pe->ce are reserved for the request service1, keep in mind that at this time no redirection is occurring. The vlans are reserved so TeNOR may instantiate the VNFs at nfvi1/nfvi2 correctly to receive the traffic on the assign vlans.

Once the VNFs are ready, TeNOR request WICM to start the traffic redirection. As mentioned before the nfvi-pops are already configured, so one can immediately:

curl -X PUT localhost:12891/vnf-connectivity/service1

You can use, on the virtual machine, to confirm that the packets are passing through nfvi1 while h1 is pinging h2.

watch sudo ovs-ofctl -Oopenflow13 dump-flows s3

Notice that the n_packets will increase when the redirection is up.

Finally to stop the redirection use:

curl -X DELETE localhost:12891/vnf-connectivity/service1

Check all NAPs:

curl localhost:12891/nap

Delete a NAP

curl -X DELETE localhost:12891/nap/<NAP_ID>

Check all NFVI-PoPs:

curl localhost:12891/nfvi

Delete a NFVI-PoPs:

curl -X DELETE localhost:12891/nfvi/<NFVI_ID>

Check all redirections

curl localhost:12891/vnf-connectivity