Auto Label Anything is an open-source labeling tool designed to enhance and extend the capabilities of Meta's Segment Anything Model 2 (SAM2). Our tool provides an intuitive interface for efficient annotation for videos. As the TAVTechnologies R&D Team, we started this side project to speed up the annotation process for our projects. Now, we want to share it with the community and continue developing it. We are planning to add more features and improve the tool in the future with additional support of opensourcers.

- 🎥 Automatically segment videos using SAM2 with just a few clicks.

- 👁️ Real-time visualization of segmentation results.

- 📦 Export labeled data in various computer-vision task formats (bbox, polygon, mask).

- 👥 Support for multiple concurrent users.

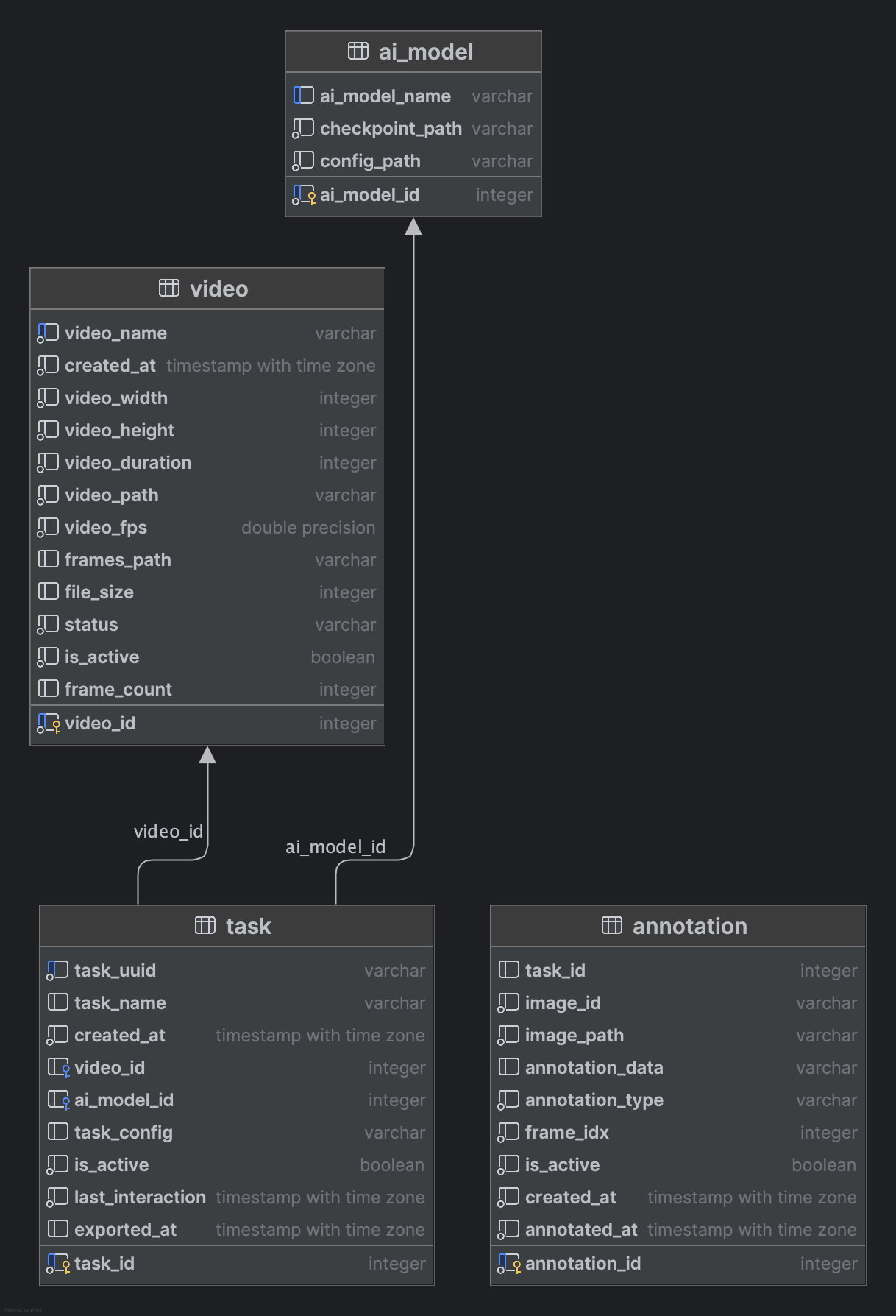

- 🐳 Fully set up with Docker Compose, including Redis and PostgreSQL.

- ⚙️ Parallel processing for multiple segmentation tasks (workers).

Auto Label Anything allows you to annotate objects in a video sequence by just clicking several points on these objects. The main goal of the software is pre-annotation. If you need:

- Faster pre-annotations on static or large objects

- Do fast PoC's by quickly annotating several objects in a video

- Retrieve bbox or polygon annotations for your object detection or segmentation tasks

- And love building a small project from scratch :)

Then, Auto Label Anything is the right tool for you to contribute or directly use in your workflow.

UI is very similar to original Meta's SAM2 UI. However our UI enables more than three object (max size is three for original), video selection, export and model selection between predefined SAM2 models.

To start using it, you need to follow these steps:

- Be sure to create a new folder to store your videos under the mounted volume in the docker-compose.yaml file. This folder will be used to store your videos and annotations.

mkdir -p ./autolabel_data/user_videos- Import the

api.jsonfile to Postman, Hoppscotch or any other API tool to access the API collection. - Send a POST request to load videos into the system. Use the following endpoint and payload (path should be accessible from the container):

POST http://localhost:8000/api/videos

Content-Type: application/json

{

"video_path": "/path/to/your/video.mp4",

"video_name": "your_video_name"

}- The video status will be

readywhen the initial video processing is complete. You can check it directly from database or send another request. You can now start annotating the video.

To check the video status, you can use the following endpoint:

GET http://localhost:8000/api/videos/status/{video_id}- Access the UI by navigating to

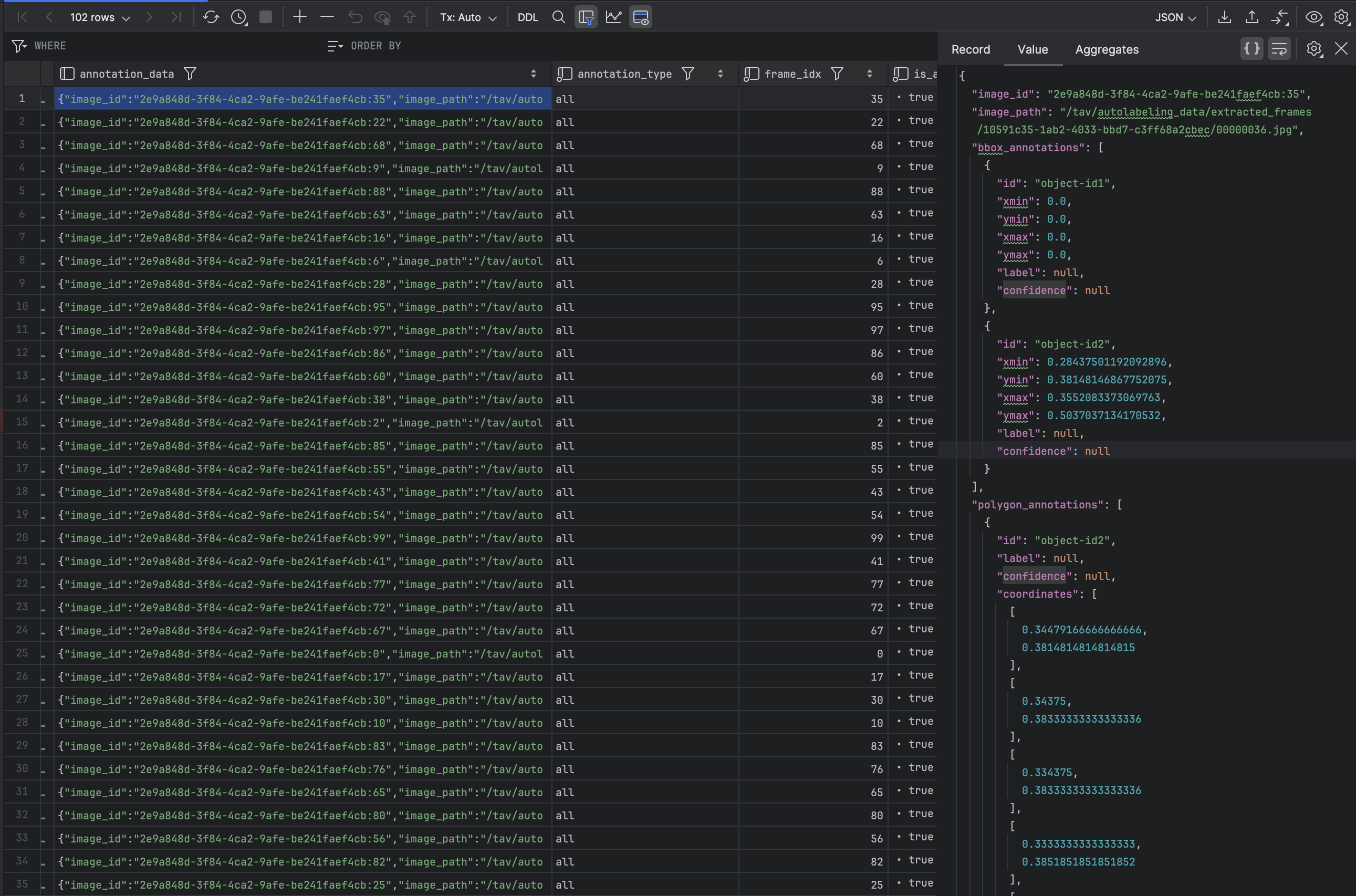

http://localhost:3000in your web browser. You can start the annotation process by selecting the video you uploaded and using the provided tools to annotate objects in the video. - After export, get your annotations from db. (Note: Visualization and UI enabled editing will be added in the future.)

This project uses custom Nvidia/CUDA installations and requires a GPU to run. If you prefer, you can host the service submodule on a cloud provider while hosting other systems on your local server or PC.

Note: CPU and MPS support will be added later.

To use Auto Label Anything, follow these steps:

- Clone the Repository:

# use --recurse-submodules to clone the submodules as well

git clone --recurse-submodules https://github.com/TAVTechnologies-Research/AutoLabelAnything.git- Navigate to the Project Directory:

cd AutoLabelAnything- Run the docker compose project with default settings::

docker compose up --build # -d if you want to run in detached modeTo configure your database and credentials, follow these steps:

-

Update ./docker-compose.yaml credentials with your preferred credentials.:

-

Create .env files for each project and update them with your credentials:

cp ./autolabel-anything-api/.env.example ./autolabel-anything-api/.env

cp ./autolabel-anything-sam2-services/.env.example ./autolabel-anything-sam2-services/.env

cp ./autolabel-anything-ui/.env.example ./autolabel-anything-ui/.env- Video upload support.

- UI support for bbox prompt.

- Faster data transfer on UI side using bbox and polygon visualizations.

- Support for more computer vision tasks.

- Support for other open-source labeling tools.

- Support for zero-shot object detection models.

Contribution is always welcome! The project contribution will be available after release of our code of conduct for our first community project and contribution guidelines. Please stay tuned for updates.

This project is licensed under the Apache 2.0 License. See the LICENSE file for details.

Important Note: This project utilizes Meta's Segment Anything Model 2 (SAM2), which is licensed under the Apache 2.0 License. Additionally, PostgreSQL and Redis have their own license agreements. Users who want to use this software must comply with these licenses. TAV Technologies assumes no responsibility for any issues arising from the use of these third-party components. This is not a commercial product, just a side project that uses open-source software to show other use cases and target community needs.

We would like to thank the following contributors for their efforts (in alphabetical order):

Deniz Peker

Gokhan Koc

Ismail Alp Aydemir

Ozgur Kisa

Pursenk Aras

Zana Simsek

Below are the references that were instrumental in developing this project:

-

META Segment Anything 2

- Official SAM2 Repository

-

PostgreSQL

- Official Website

-

Redis

- Official Website

-

Demo Video

- Video Used In Demo

-

Helper Websites You Can Use