A official pytorch implementation for the paper: ' Deep Learning-based Time Series Forecasting' Xiaobao Song, Liwei Deng,Hao Wang*, Yaoan Zhang, Yuxin He and Wenming Cao (*Correspondence) PDF

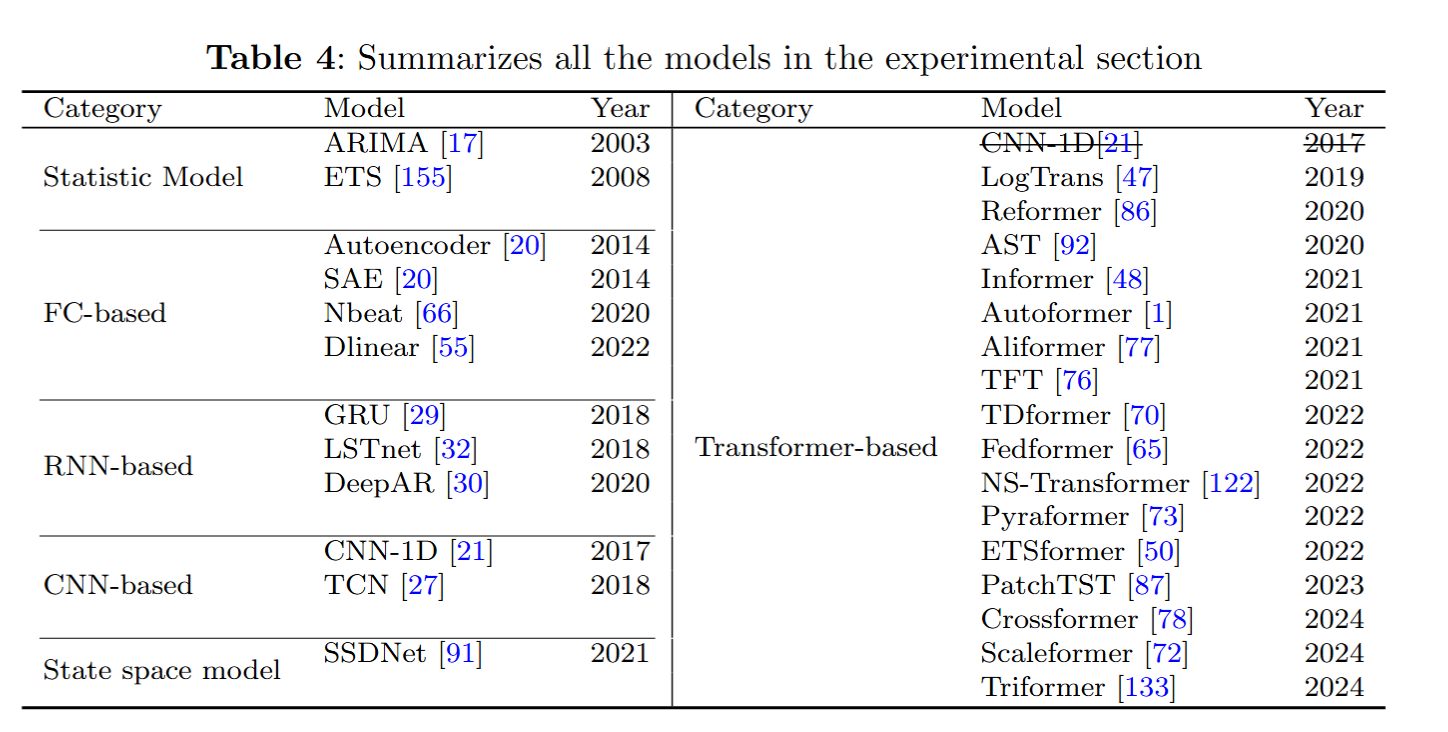

❗️❗️❗️Tips: Due to our carelessness, we incorrectly reclassified the CNN-1D model to the Transformer-based category in the paper. We sincerely apologize for this mistake.

The following are the baseline models included in this project (continuously updated):

- ARIMA PDF (IEEE Transactions on Power Systems 2003)

- ETS PDF (Journal of statistical software 2008)

- Autoencoder PDF (Neurocomputing 2014)

- SAE PDF (IEEE Transactions on Intelligent Transportation Systems 2014)

- CNN-1D PDF (IEEE Symposium Series on Computational Intelligence 2017)

- TCN PDF Code (ArXiv 2018)

- GRU PDF (Artificial Neural Networks and Machine Learning–ICANN 2018)

- Nbeat PDF (Journal of biomedical informatics 2020)

- LSTnet PDF Code (ACM SIGIR 2018)

- LogTrans PDF (NIPS 2019)

- DeepAR PDF (NIPS 2020)

- AST PDF Code (NIPS 2020)

- Reformer PDF (ICLR 2020)

- SSDNet PDF (IEEE International Conference on Data Mining2021)

- Informer PDF Code (AAAl 2021)

- Autoformer PDF Code (NlPS 2021)

- Aliformer PDF (ArXiv 2021)

- TFT PDF Code (ArXiv 2021)

- TDformer PDF Code (ArXiv 2022)

- Dlinear PDF (AAAl 2022)

- Fedformer PDF Code (lCML 2022)

- NS-Transformer PDF Code (NIPS 2022)

- Pyraformer PDF Code (ICLR 2022)

- ETSformer PDF Code (ArXiv 2022)

- PatchTST PDF Code (ICLR 2023)

- Crossformer PDF Code (ICLR 2023)

- Scaleformer PDF Code (ICLR 2024)

- Triformer PDF (ArXiv 2024)

- ......

📝Install dependecies [Back to Top]

Install the required packages

pip install -r requirements.txt👉Data Preparation[Back to Top]

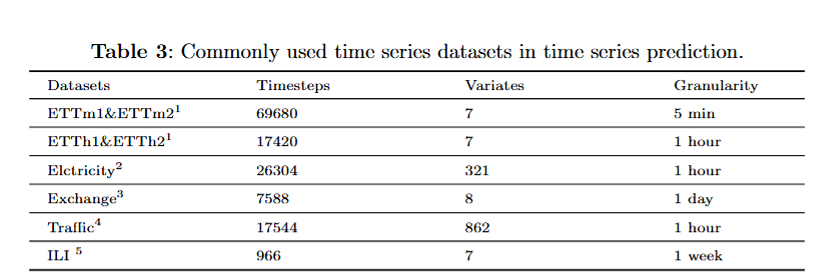

We follow the same setting as previous work. The datasets for all the six benchmarks can be obtained from [Autoformer]. The datasets are placed in the datasets folder of our project. The tree structure of the files is as follows:

\datasets

├─electricity

│

├─ETT-small

│

├─exchange_rate

│

├─illness

│

└─traffic

🚀Run Experiment[Back to Top]

We have provided all the experimental scripts for the benchmarks in the ./scripts folder, which covers all the benchmarking experiments. To reproduce the results, you can run the following shell code.

./scripts/ETTh1.sh

./scripts/ETTh2.sh

./scripts/ETTm1.sh

./scripts/ETTm2.sh

./scripts/exchange.sh

./scripts/illness.sh

./scripts/traffic.shFor any questions or feedback, feel free to contact Xiaobao Song or Liwei Deng.

If you find this code useful in your research or applications, please kindly cite:

@article{song2024deep,

title={Deep learning-based time series forecasting},

author={Song, Xiaobao and Deng, Liwei and Wang, Hao and Zhang, Yaoan and He, Yuxin and Cao, Wenming},

journal={Artificial Intelligence Review},

volume={58},

number={1},

pages={23},

year={2024},

publisher={Springer}

}

Song, X., Deng, L., Wang, H. et al. Deep learning-based time series forecasting. Artif Intell Rev 58, 23 (2025). https://doi.org/10.1007/s10462-024-10989-8

We express our gratitude to the following members for their contributions to the project, completed under the guidance of Professor Hao Wang:

Xiaobao Song, Liwei Deng,Yaoan Zhang,Junhao Tan,Hongbo Qiu,Xinhe Niu