Semantic image segmentation is the task of classifying each pixel in an image from a predefined set of classes.

In this tutorial, you will:

- Perform inference with 10 well-known semantic segmentors

- Fine tune semantic segmentors on a custom dataset

- Design and train your own semantic segmentation model

This work is based on MMSegmentation: OpenMMLab segmentation toolbox and benchmark.

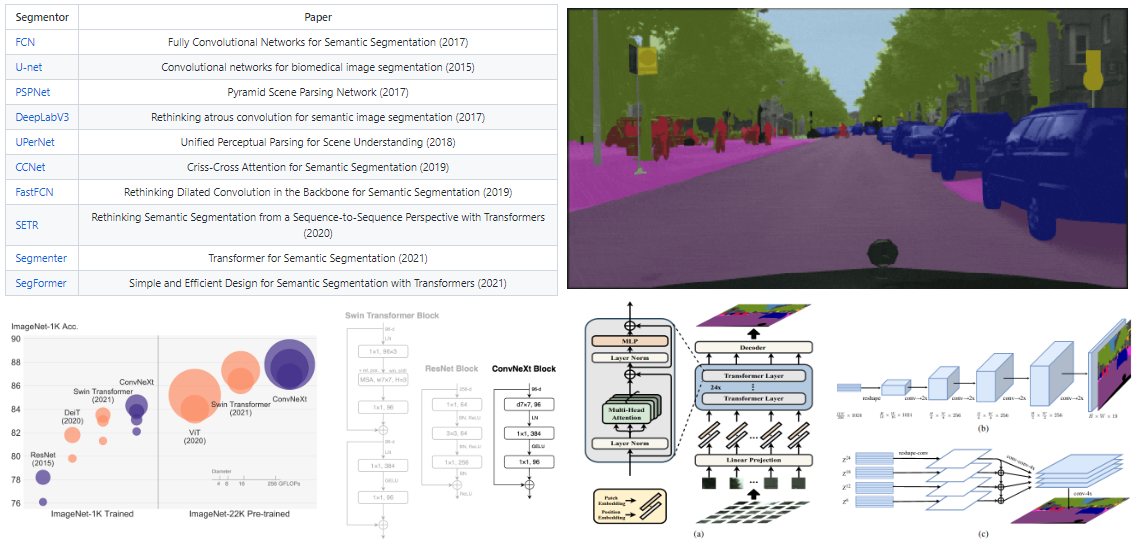

| Segmentor | Paper |

|---|---|

| FCN | Fully Convolutional Networks for Semantic Segmentation (2017) |

| U-net | Convolutional networks for biomedical image segmentation (2015) |

| PSPNet | Pyramid Scene Parsing Network (2017) |

| DeepLabV3 | Rethinking atrous convolution for semantic image segmentation (2017) |

| UPerNet | Unified Perceptual Parsing for Scene Understanding (2018) |

| CCNet | Criss-Cross Attention for Semantic Segmentation (2019) |

| FastFCN | Rethinking Dilated Convolution in the Backbone for Semantic Segmentation (2019) |

| SETR | Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers (2020) |

| Segmenter | Transformer for Semantic Segmentation (2021) |

| SegFormer | Simple and Efficient Design for Semantic Segmentation with Transformers (2021) |

Here is how to load a pretrained model, perfrom inference and vizualize the results.

model = init_segmentor(config, checkpoint, device='cuda:0')

result = inference_segmentor(model, img)

model.show_result(img, result, out_file='result.jpg', win_name=m_name)

| Segmentor | Result |

|---|---|

| FCN |  |

| U-net |  |

| PSPNet |  |

| DeepLabV3 |  |

| UPerNet |  |

| CCNet |  |

| FastFCN |  |

| SETR |  |

| Segmenter |  |

| SegFormer |  |

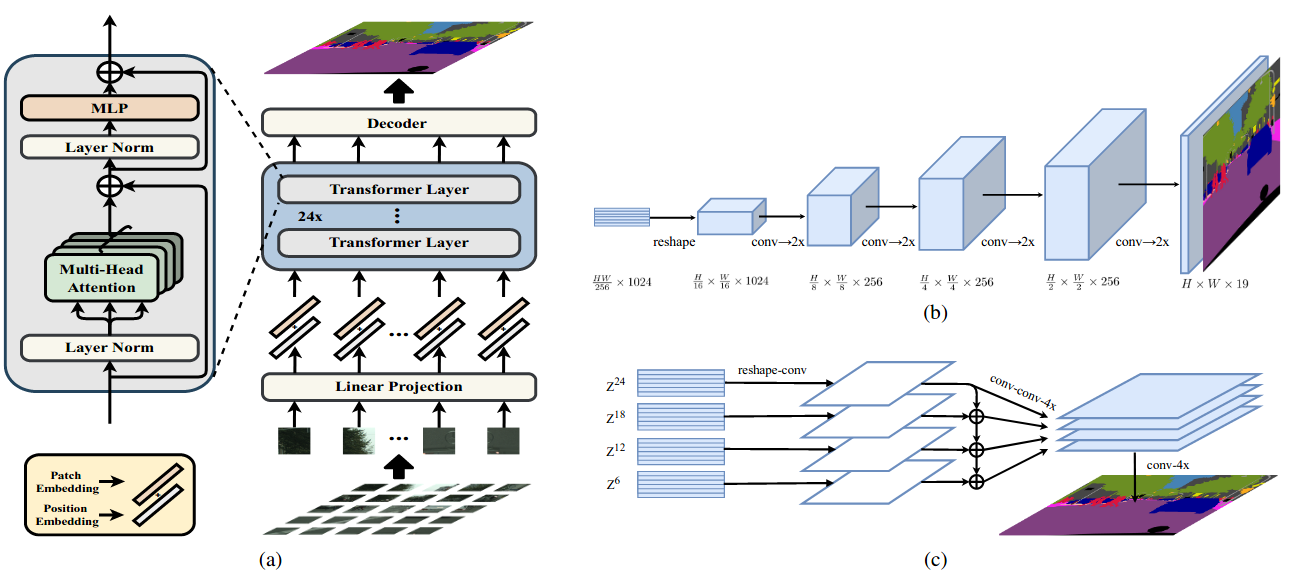

- Select a semantic segmentation model: SEgmentation TRansformer SETR

- Add a new dataset class: Scene Understanding Datasets

- Create a config file.

- Conduct training and evaluation.

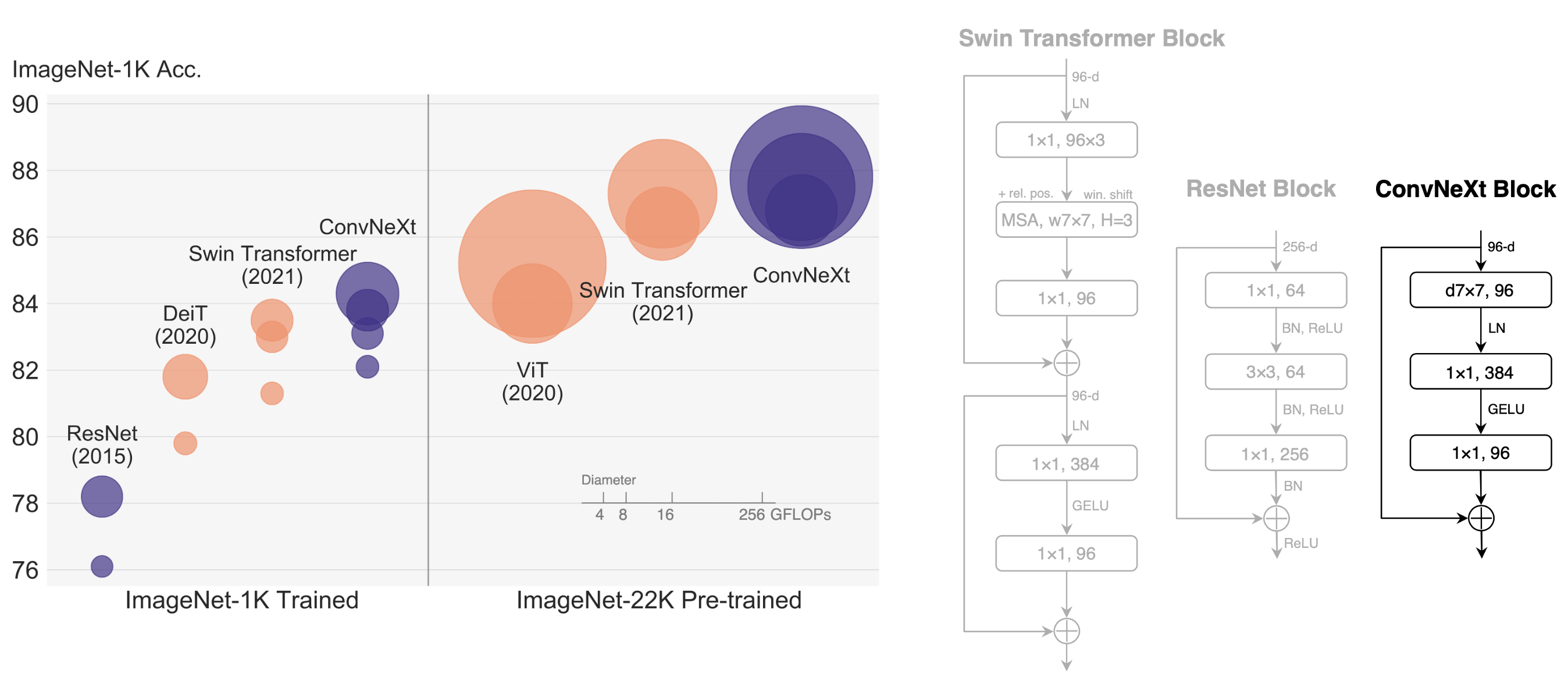

- Select a semantic segmentation model: UPerNet Unified Perceptual Parsing for Scene Understanding

- Replabe the ResNet backbone with a new one: ConvNeXt A ConvNet for the 2020s

- Config the heads.

- Add a new dataset class: Scene Understanding Datasets

- Conduct training and evaluation.

Regards!